Today Baidu’s Silicon Valley AI Lab (SVAIL) released Warp-CTC open source software for the machine learning community. Warp-CTC is an implementation of the #CTC algorithm for #CPUs and NVIDIA #GPUs. With Warp-CTC, researchers can directly call the C library from a project or implement Warp-CTC with #Torch using supplied bindings.

Today Baidu’s Silicon Valley AI Lab (SVAIL) released Warp-CTC open source software for the machine learning community. Warp-CTC is an implementation of the #CTC algorithm for #CPUs and NVIDIA #GPUs. With Warp-CTC, researchers can directly call the C library from a project or implement Warp-CTC with #Torch using supplied bindings.

According to SVAIL, Warp-CTC is 10-400x faster than current implementations. It makes end-to-end deep learning easier and faster so researchers can make progress more rapidly.

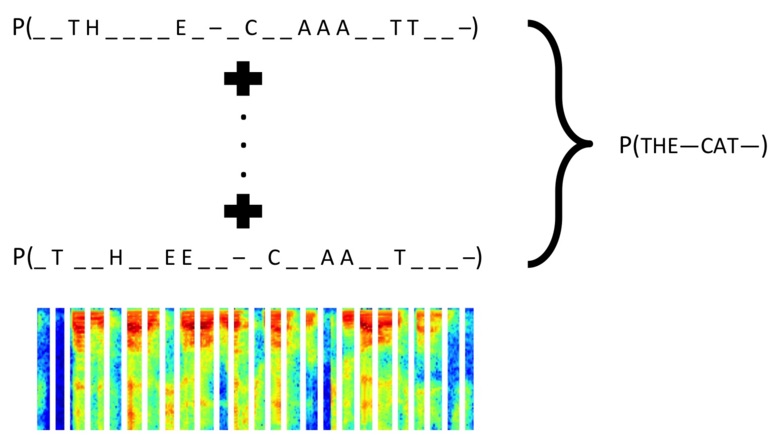

Connectionist Temporal Classification (CTC) is a loss function useful for performing supervised learning on sequence data, without needing an alignment between input data and labels. For example, CTC can be used to train end-to-end systems for speech recognition, which is how they we have been using it at Baidu’s Silicon Valley AI Lab.

SVAIL engineers developed Warp-CTC to improve the scalability of models trained using CTC while we were building our Deep Speech end-to-end speech recognition system. “We found that currently available implementations of CTC generally required significantly more memory and/or were tens to hundreds of times slower.”

Why did SVAIL open-source Warp-CTC? “We want to make end-to-end deep learning easier and faster so researchers can make more rapid progress. A lot of open source software for deep learning exists, but previous code for training end-to-end networks for sequences (like our Deep Speech engine) has been too slow. We want to start contributing to the machine learning community by sharing an important piece of code that we created.”

Sign up for our insideHPC Newsletter