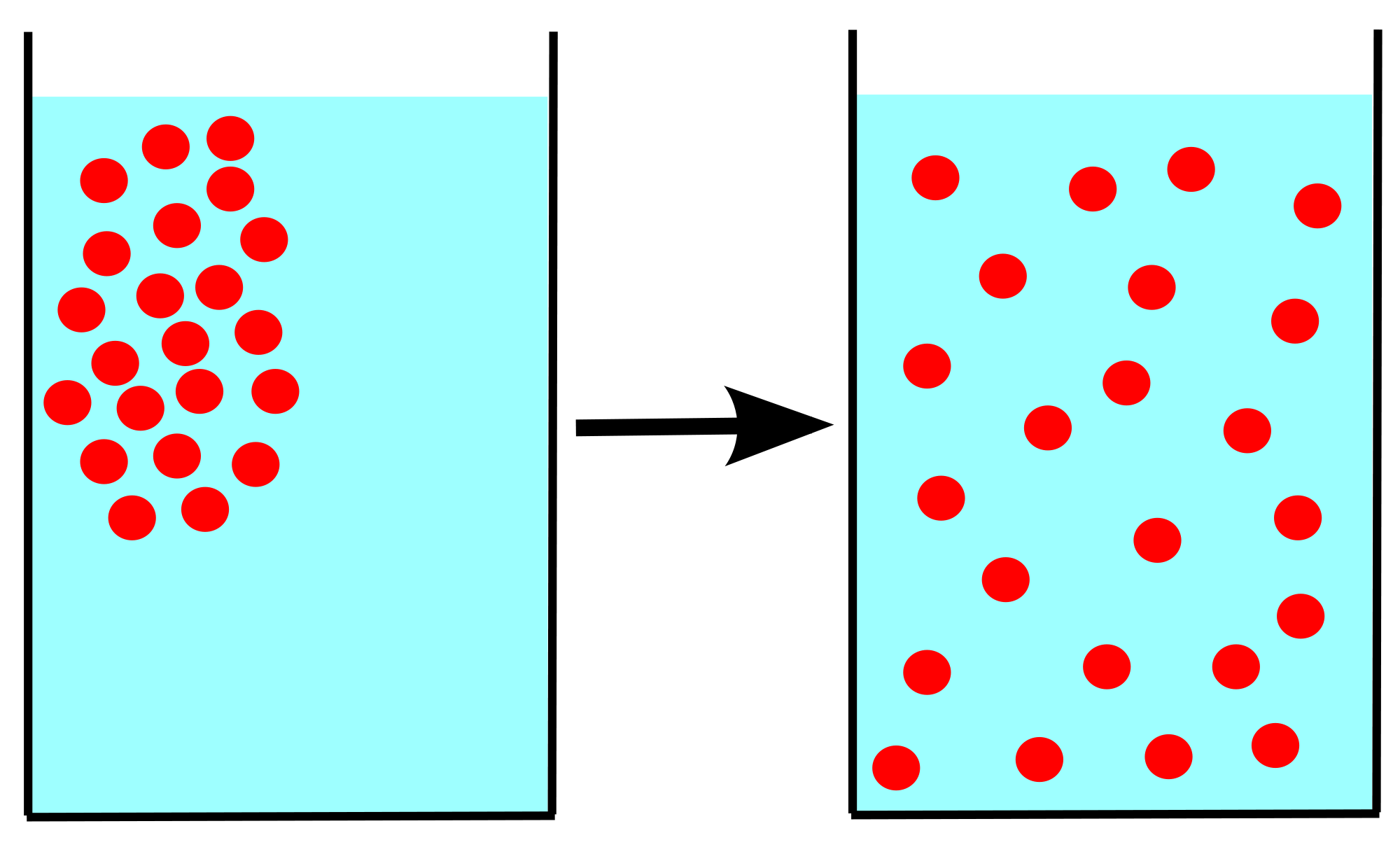

When using the Intel Xeon Phi coprocessor it is important to understand the algorithm well, in order to determine the best methods to gain maximum performance. An examples of a good, fairly simple application is a diffusion calculation. Particles can move through a volume over time.

When using the Intel Xeon Phi coprocessor it is important to understand the algorithm well, in order to determine the best methods to gain maximum performance. An examples of a good, fairly simple application is a diffusion calculation. Particles can move through a volume over time.

In a 3D example, the iterations are performed over time, then over the X, Y, and Z the particle movement is calculated. After each iteration, the new state becomes the old state and the next time iteration is performed. Boundary conditions become important, as the correct computations cannot be allowed to overflow the edges of the cube. A calculation of this type can compiled to run on the Intel Xeon Phi coprocessor through the use of compiler directives, with the –mmic flag used.

The next step is to look at using OpenMP directives to create multiple threads to distribute the work over many threads and cores. A key OpenMP directive, #pragma omp for collapse, will collapse the inner two loops into one. The developer can then set the number of threads and cores to use and return the application to determine the performance. In one test case, three threads per physical core shows the best performance, by quite a lot compared to just using one or two threads per core.

Vectorization can then be investigated, as another method to gain performance. In some cases, a compiler can determine which code to vectorize, but in other instances the developer must make these decisions. In the test case of calculating the diffusion of particles, the inner loop (after the collapsing as described above) can be vectorized by inserting #pragma simd before the inner most loop. By having the developer involved in this, knowledge of the dependencies is critical for gaining performance.

In a simple example like this, the speedups using the Intel Xeon Phi coprocessor as compared to the baseline implementation was about 300 X, when using both OpenMP scaling and vectorization.

In a simple example like this, the speedups using the Intel Xeon Phi coprocessor as compared to the baseline implementation was about 300 X, when using both OpenMP scaling and vectorization.

Source: Intel, USA

Transform Data into Opportunity Accelerate analysis: Intel® Data Analytics Acceleration Library.