Today IBM announced that it is the first major cloud provider to make the Nvidia Tesla P100 GPU accelerator available globally on the cloud. By combining NVIDIA’s acceleration technology with IBM’s Cloud platform, businesses can more quickly and efficiently run compute-heavy workloads, such as artificial intelligence, deep learning and high performance data analytics.

Today IBM announced that it is the first major cloud provider to make the Nvidia Tesla P100 GPU accelerator available globally on the cloud. By combining NVIDIA’s acceleration technology with IBM’s Cloud platform, businesses can more quickly and efficiently run compute-heavy workloads, such as artificial intelligence, deep learning and high performance data analytics.

As the AI era takes hold, demand continues to surge for our GPU-accelerated computing platform in the cloud,” said Ian Buck, general manager, Accelerated Computing, NVIDIA. “These new IBM Cloud offerings will provide users with near-instant access to the most powerful GPU technologies to date – enabling them to create applications to address complex problems that were once unsolvable.”

With more than 160 zettabytes of data expected to be generated by 2025 according to IDC , enterprises of all sizes are increasingly relying on cognitive and deep learning applications to make sense of these massive volumes and varieties of data. GPUs, or graphics processing units, work in conjunction with a server’s central processing unit (CPU) to accelerate application performance helping these compute-heavy data workloads run more quickly and efficiently.

Since 2014, IBM has been working closely with NVIDIA to bring the latest GPU technology to the cloud. IBM was the first cloud provider to introduce the NVIDIA Tesla K80 GPU accelerator in 2015 and the Tesla M60 in 2016, and the introduction of the Tesla P100 builds on IBM’s leadership in bringing the latest NVIDIA GPU technology to the cloud for machine learning, AI and HPC workloads.

Deep learning and AI was once reserved for research organizations and universities,” said Subbu Rama, founder and CEO, BitFusion. “By collaborating with IBM Cloud, BitFusion is changing that dynamic, enabling data scientists and developers to jumpstart innovation.”

As the first cloud provider to enable the use of the Tesla P100 GPU accelerator globally on the cloud, IBM is making it more accessible for businesses in industries including healthcare, financial services, energy and manufacturing to extract valuable insight from big data. For example, clients in the financial services industry can use GPUs on the IBM Cloud to run complex risk calculations in seconds; healthcare companies can more quickly analyze medical images or identify genetic variations; and energy companies can identify new ways to improve operations.

As the first cloud provider to enable the use of the Tesla P100 GPU accelerator globally on the cloud, IBM is making it more accessible for businesses in industries including healthcare, financial services, energy and manufacturing to extract valuable insight from big data. For example, clients in the financial services industry can use GPUs on the IBM Cloud to run complex risk calculations in seconds; healthcare companies can more quickly analyze medical images or identify genetic variations; and energy companies can identify new ways to improve operations.

The latest NVIDIA GPU technology delivered on the IBM Cloud is opening the door for enterprises of all sizes to use cognitive and AI to address complex big data challenges,” said John Considine, general manager, Cloud Infrastructure, IBM. “IBM’s global network of 50 cloud data centers, along with its advanced cognitive and GPU capabilities, is helping to accelerate the pace of client innovation.”

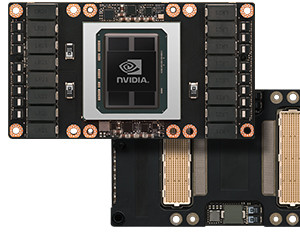

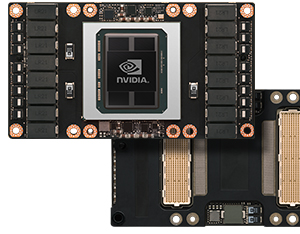

Clients will have the option to equip individual IBM Bluemix bare metal cloud servers with two NVIDIA Tesla P100 accelerator cards. The Tesla P100 provides 5.3 teraFLOPS of double-precision performance and 16 gigabytes of GPU memory in a single server to accelerate compute-intensive workloads. The addition of a Tesla P100 accelerator can deliver up to 65 percent more machine learning capabilities and 50 times the performance than its predecessor, the Tesla K80. The combination of IBM’s connectivity and bare metal servers with the Tesla P100 GPUs provides clients with higher throughput than traditional virtualized servers. This high level of performance allows clients to deploy fewer, more powerful cloud servers to more quickly deliver increasingly complex simulations and big data workloads.

The Tesla P100 joins NVIDIA’s portfolio of GPU offerings on the IBM Cloud, including the Tesla M60, Tesla K80 and Tesla K2 GPUs. IBM plans to make the Tesla P100 GPU accelerators available in May 2017.

This is great news for IBM’s cloud, it’s always nice to see increased adoption of the accelerated on-demand computing.

However I’d like to point out that the Nimbix global public cloud was actually first to market in October of 2016 with the P100 and NVLink. NVLink greatly accelerates machine learning workflows, especially in multi-GPU configurations, and we consider that to be table stakes now for most workflows based on market requirements:

https://www.nimbix.net/blog/2016/10/04/ibm-nvidia-powerful-gpu-cloud/

(also for commentary from HPCwire): https://www.hpcwire.com/2016/10/06/power8-nvlink-coming-nimbix-cloud/

As with all of our offerings, the Nimbix global public cloud is a baremetal service, which again is widely considered table stakes for both traditional HPC and new use cases like ML/DL.

Additionally, we recently deployed NVIDIA’s DGX-1 platform, also available on demand in our global public cloud. The DGX-1 is an eight way configuration, with NVLink, and with EDR InfiniBand. It’s critical to have low latency interconnects as well as we have customers using multiple nodes for deep learning training using various frameworks.

Developers, data scientists, and researchers have choices of single, quad, and eight-way P100 on-demand machine types, all interconnected with the latest InfiniBand technology, and enabled with our PushToCompute for CI/CD of custom PaaS/SaaS workflows. Not only were we first to market, months ahead of the IBM Cloud, but we also have the fastest and most advanced offerings available today among any major provider. You can read more about major providers in this Forbes article mentioning Nimbix as well:

https://www.forbes.com/sites/moorinsights/2017/03/24/nvidia-scores-yet-another-gpu-cloud-for-ai-with-tencent/#3818270f1847

We would appreciate the record corrected! It’s great when other providers add capabilities, because this helps the general cause, but let’s try not to revise history when it comes to which global providers release offerings first!

Thank you,

Leo Reiter

CTO, Nimbix, Inc.

Thanks, Leo. This was a IBM/Nvidia press release, so I have forwarded your comments on to them. -Rich