In this video from the 2017 GPU Technology Conference, Rob Ober from Nvidia describes the new HGX-1 reference platform for GPU computing.

In this video from the 2017 GPU Technology Conference, Rob Ober from Nvidia describes the new HGX-1 reference platform for GPU computing.

“Powered by NVIDIA Tesla GPUs and NVIDIA NVLink high-speed interconnect technology; the HGX-1 comes as AI workloads—from autonomous driving and personalized healthcare to superhuman voice recognition—are taking off in the cloud.”

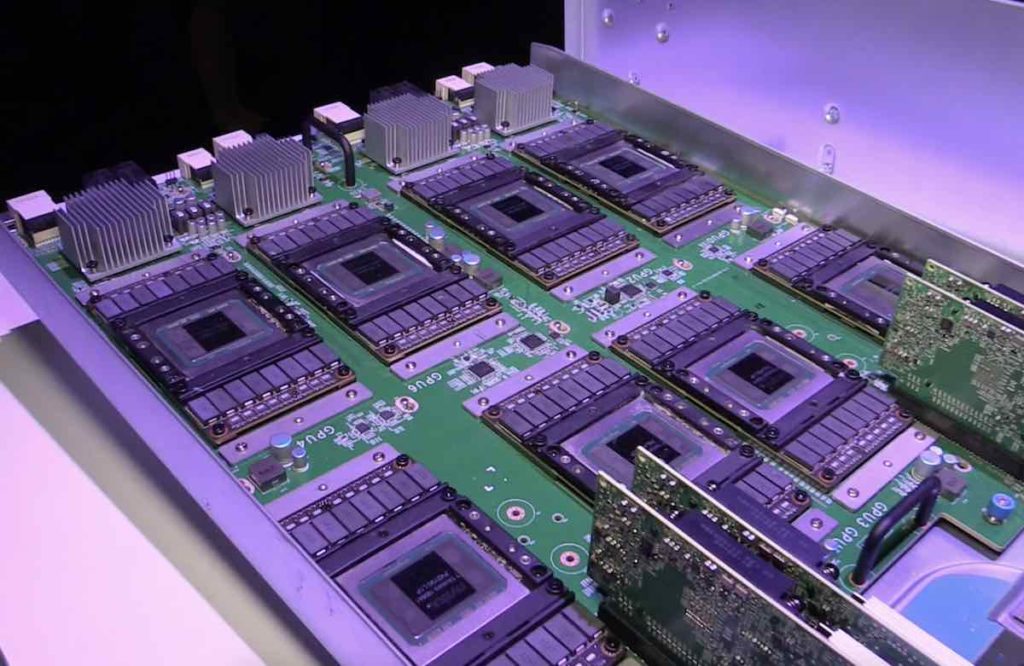

Purpose-built for cloud computing, the HGX-1 enclosure architecture provides revolutionary performance with unprecedented configurability and future-proofing. It taps into the power of eight NVIDIA Tesla GPUs interconnected with the NVLink hybrid cube, that was introduced with the NVIDIA DGX-1 for class-leading performance. Its innovative PCIe switching architecture enables a CPU to be dynamically connected to any number of GPUs. This allows cloud service providers that standardize with a single HGX-1 infrastructure to offer customers a range of CPU and GPU machine instances, while a standard NVLink fabric architecture allows the rich GPU software ecosystem to accelerate AI and other workloads.

With HGX-1, hyperscale data centers can provide optimal performance and flexibility for virtually any accelerated workload, including deep learning training, inference, advanced analytics, and high-performance computing. For deep learning, it provides up to 100X faster performance when compared with legacy CPU-based servers and is estimated to be one-fifth the cost for AI training and one-tenth the cost for AI inferencing.

With its modular design, HGX-1 is suited for deployment in existing data center racks across the globe, offering hyperscale data centers a quick, simple path to be ready for AI. HGX-1 is architected to be Tesla V100 ready and extract the full AI performance that Tesla V100 provides. With a simple drop-in upgrade, Tesla V100 GPUs will create an even more flexible and powerful cloud computing platform.”

With its modular design, HGX-1 is suited for deployment in existing data center racks across the globe, offering hyperscale data centers a quick, simple path to be ready for AI. HGX-1 is architected to be Tesla V100 ready and extract the full AI performance that Tesla V100 provides. With a simple drop-in upgrade, Tesla V100 GPUs will create an even more flexible and powerful cloud computing platform.”

The HGX-1 AI accelerator provides extreme performance scalability to meet the demanding requirements of fast growing machine learning workloads, and its unique design allows it to be easily adopted into existing data centers around the world,” said Kushagra Vaid, the GM of Azure Hardware Infrastructure at Microsoft.