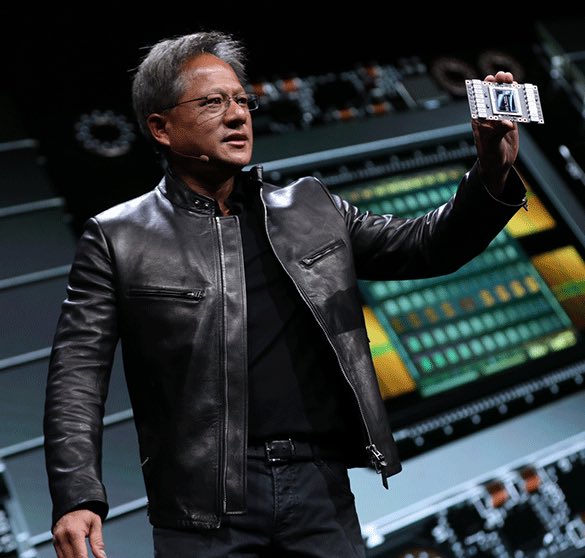

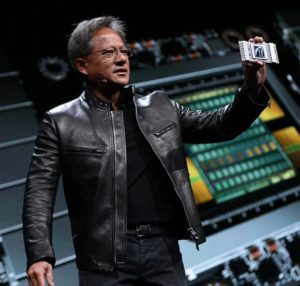

This week at the GPU Technology Conference, Nvidia CEO Jensen Huang Wednesday launched Volta, a new GPU architecture that delivers 5x the performance of its predecessor.

This week at the GPU Technology Conference, Nvidia CEO Jensen Huang Wednesday launched Volta, a new GPU architecture that delivers 5x the performance of its predecessor.

Over the course of two hours, Huang introduced a lineup of new Volta-based AI supercomputers including a powerful new version of our DGX-1 deep learning appliance; announced the Isaac robot-training simulator; unveiled the NVIDIA GPU Cloud platform, giving developers access to the latest, optimized deep learning frameworks; and unveiled a partnership with Toyota to help build a new generation of autonomous vehicles.

Built with 21 billion transistors, the newly announced Volta V100 delivers deep learning performance equal to 100 CPUs. Representing an investment by NVIDIA of more than $3 billion, the processor is built “at the limits of photolithography,” Huang told the crowd.

Volta will be supported by new releases of deep learning frameworks Caffe 2, Microsoft Cognitive Toolkit, MXNet, and TensorFlow, letting users quickly get the most out of Volta’s power.

“We’re on our second generation of GPUs in the cloud,” said Jason Zander, corporate vice president of Microsoft Azure. “We just announced P40s and P100s, but we really love Volta. My job is to ensure people use the Azure Cloud, and people want to use what’s available immediately, without waiting. We want data scientists and developers to focus on models and less on the plumbing.”

At insideHPC, we’ve spent the past week at GTC gathering interviews, podcasts, and much more, so please stay tuned!