When it comes to getting the performance out of your HPC system, it’s the small things that count. In this Sponsored Post, David Lombard, Sr. Principal Engineer at Intel Corporation, explains.

The main issues with setting up and maintaining an HPC environment center is making sure that it meets requirements in terms of usability, performance, and scalability. Those needs can of course vary considerably depending on the circumstances, and one of the great catchphrases (and standard answers) we have in HPC is, ‘it depends’. The workflows, applications, and the size of the user community significantly drive the system architecture and hardware choices, such as compute node configuration, fabric, and file system. The size of the system is a significant factor as very large scale systems are extremely intolerant of performance degradations. One of the key problems we have in those circumstances is that small factors have a big effect on performance, and so what might be tolerable on a smaller scale system can make a large scale system unusable, or hobbled in terms of its performance.

The main issues with setting up and maintaining an HPC environment center is making sure that it meets requirements in terms of usability, performance, and scalability. Those needs can of course vary considerably depending on the circumstances, and one of the great catchphrases (and standard answers) we have in HPC is, ‘it depends’. The workflows, applications, and the size of the user community significantly drive the system architecture and hardware choices, such as compute node configuration, fabric, and file system. The size of the system is a significant factor as very large scale systems are extremely intolerant of performance degradations. One of the key problems we have in those circumstances is that small factors have a big effect on performance, and so what might be tolerable on a smaller scale system can make a large scale system unusable, or hobbled in terms of its performance.

One of the great generalizations is that HPC is about extremes – but it’s true. It’s the small disruptions that remind us we have to be very much concerned with the edges of performance in order to fully exploit the system. We must be meticulous and methodical about what we focus on and how we intend to get the performance we need. Reliability and scalability are just as important, and the sheer size of an HPC system is often one of the factors we have to contend with in terms of performance, reliability and scalability because that size causes non-linear behaviors. This leads us down the route of specialized operating systems and applications, and many other details that span a wide variety of disciplines across the hardware and software domains – and we have to bring all these elements together.

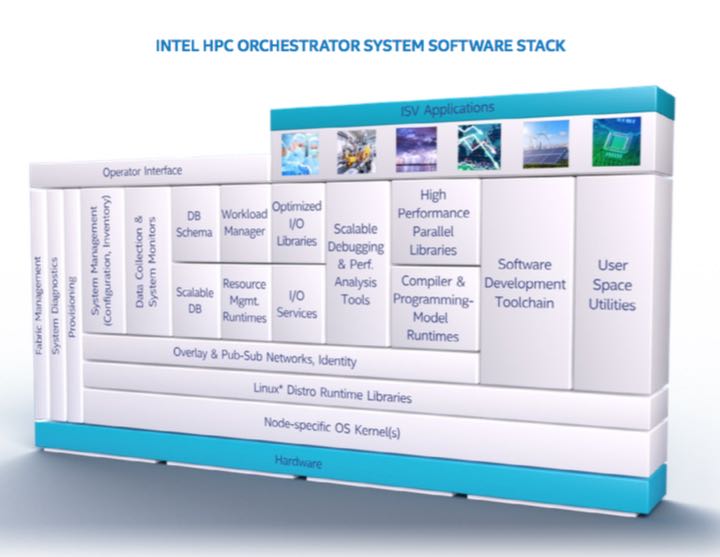

Middleware, more properly, HPC middleware, is the set of software components that differentiate an HPC system from racks of individual servers. While Intel provides some middleware components, particularly the development tool chain, e.g., Intel® Parallel Studio XE, including Intel® MPI and Intel® Math Kernel Library, the majority of the components are provided by the HPC community itself, as well as the broader Linux community. In fact, there is a dedicated Linux Foundation project, called OpenHPC, focused on defining required HPC components and guiding their development so they will work together effectively.

OpenHPC includes components for scalable provisioning, enabling HPC systems to manage the software configuration on tens to thousands of compute nodes; system health monitoring, keeping track of the hardware and software health of the compute nodes; and workload management, enabling the effective and efficient use of the system’s compute resources via on-demand and batch workflows.

Absent Open HPC, and its commercial incarnation, Intel® HPC Orchestrator, system integrators and system administrators would need to build each middleware component from its original open source project. This is certainly achievable, but it does require broad expertise to select the appropriate open source components, and consider the combinations of components and the tradeoffs among them, individually and as groups. Once selected, the software needs to be configured, built, integrated, and finally validated as a complete at-scale software stack.

Besides eliminating a lot of this work for users, Intel® HPC Orchestrator is an even more careful and focused extension of the OpenHPC software. Intel HPC Orchestrator provides a fully integrated and validated software stack that takes the various components available throughout the community—from the operating system offered by Red Hat, to Altair’s PBS Professional workload manager—and puts them into a single “package.” All the necessary software is there so that you can focus on using the system rather than building and maintaining it. Intel runs integration and performance benchmarks and compares them against expectations of how a system of a particular hardware architecture, number of compute nodes, fabric, and file system configuration should be performing.

In essence, Intel HPC Orchestrator takes optimum HPC design rules and considerations and embeds them in the software stack so the processes we’ve used and the attention we’ve paid to these very small details can be leveraged into a system that’s more robust and better performing. Intel HPC Orchestrator encapsulates the important tradeoffs, and pays attention to the small details that can greatly impact how well the underlying features of the hardware are leveraged to deliver better performance and scalability.

We entered the 1990s with shared memory parallel systems and we exited the 1990s with distributed memory systems. This fueled a revolution that saw the price of entry for HPC drop because we could take components from a variety of sources – Intel processors, Ethernet networks, Linux, middleware, etc. – and assemble a complete HPC system that provided all the hardware and software you needed to run applications at a level of performance that had been limited to a few fabulously expensive supercomputers. Intel has been involved since the beginning of this transition from very high-end, multi-million dollar HPC systems, to much more affordable systems built with commercial off-the-shelf (COTS) processors, networks, and more recently, non-volatile memory (NVM).

Intel HPC Orchestrator is another important enabler for the widespread adoption of HPC. There are many system integrators today who aren’t necessarily experts in HPC who can build and sell extremely powerful and easy-to-manage HPC systems to their customers. This is partly because Intel, through Intel HPC Orchestrator software, defines how to build the system and provides guidance on the best way to configure it to ensure performance, scalability and reliability.

Between the integrator’s knowledge of the hardware and Intel HPC Orchestrator, a very credible and capable HPC system can be acquired that two decades ago would have only been available to a small number of companies with enormous HPC budgets. That’s why we refer to these trends as the “democratization of HPC.” Our goal is to make HPC available to virtually every size public or private organization that can use it for discovery, to make better decisions, to build better products and remain competitive in the future.

More on Intel® HPC Orchestrator at intel.com/hpcorchestrator