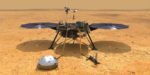

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

Eviden Caught up in Atos’ Financial, Acquisition Struggles

With a longstanding TOP500 supercomputing heritage, a leadership position in European supercomputing, a commitment to build Europe’s first exascale-class system and with major techno- geopolitical stakes on the line, the financial difficulties of Atos and, by extension, its Eviden HPC unit….

Exascale: E4S Software Deployments Boost Industry Acceptance of Accelerators

Last November at SC23, industry leaders reflected on successful deployments of the Exascale Computing Project’s Extreme-Scale Scientific Software Stack (E4S). They highlighted how E4S at Pratt & Whitney, ExxonMobil, TAE Technologies, and GE Aerospace….

Exascale’s New Software Frontier: Combustion-PELE

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The scientific challenge Diesel and gas-turbine engines drive the world’s trains, planes, and ships, but the fossil fuels that power these engines produce much of the carbon emissions […]

DOE: E4S for Extreme-Scale Science Now Supports Nvidia Grace and Grace Hopper GPUs

E4S, the open source Extreme-Scale Scientific Software Stack for HPC-AI scientific applications, now incorporates AI/ML libraries and expands GPU support to include the Nvidia Grace and Grace Hopper architectures. E4S is a community effort to provide open-source….

Exascale’s New Software Frontier: LatticeQCD for Particle Physics

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The Science Challenge One of the most challenging goals for researchers in the fields of nuclear and particle physics is to better understand the interactions between quarks and gluons — […]

Exascale: Bringing Engineering and Scientific Acceleration to Industry

At SC23, held in Denver, Colorado, last November, members of ECP’s Industry and Agency Council, comprised of U.S. business executives, government agencies, and independent software vendors, reflected on how ECP and the move to exascale is impacting current and planned use of HPC….

HPC News Bytes 20240219: AI Safety and Governance, Running CUDA Apps on ROCm, DOE’s SLATE, New Advanced Chips

Happy President’s Day morning to everyone! Today’s HPC News Bytes races (6:22) around the HPC-AI landscape with comments on: developments in AI security and governance, running CUDA (NVIDIA) apps on ROCm (AMD), DOE’s Exascale Software Linear….

NVIDIA Reveals Eos Supercomputer: 4,600 H100 GPUs for 18 AI Exaflops

NVIDIA Thursday released a video that offers the first public look at Eos (pictured here), a monster 18.4 exaflops FP8 AI supercomputer powered by 576 DGX H100 systems… NVIDIA said Eos would be ranked no. 9 on the TOP500 list of the world’s fastest supercomputers, according….