With the demand for intelligent solutions like autonomous driving, digital assistants, recommender systems, enterprises of every type are demanding AI powered – applications for surveillance, retail, manufacturing, smart cities and homes, office automation, autonomous driving, and more coming every day. Increasingly, AI applications are powered by smart inference-based inputs. This sponsored post from Intel explores how inference engines can be used to power AI apps, audio, video and highlights the capabilities of Intel’s Distribution of OpenVINO (Open Visual Inference and Neural Network Optimization) toolkit.

Are Memory Bottlenecks Limiting Your Application’s Performance?

Often, it’s not enough to parallelize and vectorize an application to get the best performance. You also need to take a deep dive into how the application is accessing memory to find and eliminate bottlenecks in the code that could ultimately be limiting performance. Intel Advisor, a component of both Intel Parallel Studio XE and Intel System Studio, can help you identify and diagnose memory performance issues, and suggest strategies to improve the efficiency of your code.

Five Reasons to Run Your HPC Applications in The Cloud

In this new infographic from Intel, you’ll see examples of successful, real-world HPC deployments on virtually unlimited, Intel® Xeon® technology-powered AWS compute instances that provide scalability and agility not attainable on-premises. Download the new report to discover how to innovate without limits, reduce costs, and get your results to market faster by moving your HPC workloads to AWS.

Unified Deep Learning Configurations and Emerging Applications

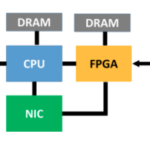

This is the final post in a five-part series from a report exploring the potential machine and a variety of computational approaches, including CPU, GPU and FGPA technologies. This article explores unified deep learning configurations and emerging applications.

The AI Revolution: Unleashing Broad and Deep Innovation

For the AI revolution to move into the mainstream, cost and complexity must be reduced, so smaller organizations can afford to develop, train and deploy powerful deep learning applications. It’s a tough challenge. The following guest article from Intel explores how businesses can optimize AI applications and integrate them with their traditional workloads.

Considerations for Applications to Transition to a Cloud

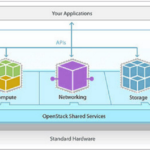

This is the third entry in an insideHPC series that explores the HPC transition to the cloud, and what your business needs to know about this evolution. This series, compiled in a complete Guide, covers cloud computing for HPC, considerations for applications to transition to the cloud, IaaS components, OpenStack fundamentals and more.

Why the OS is So Important when Running HPC Applications

This is the fourth entry in an insideHPC series that explores the HPC transition to the cloud, and what your business needs to know about this evolution. This series, compiled in a complete Guide available, covers cloud computing for HPC, why the OS is important when running HPC applications, OpenStack fundamentals and more.

Research for New Technology Using Supercomputers

This paper presents our approach to research and development in relation to four applications in which utilization of simulations in super-large-scale computation systems is expected to serve useful purposes.