Igor Sfiligoi from SDSC gave this talk at the ECSS Symposium. “I have recently helped IceCube expand their resource pool by a few orders of magnitude, first to 380 PFLOP32s for a few hours and later to 170 PFLOP32s for a whole workday. In this session I will explain what was done and how, alongside an overview of why IceCube needs so much compute.”

Second GPU Cloudburst Experiment Paves the Way for Large-scale Cloud Computing

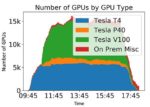

Researchers at SDSC and the Wisconsin IceCube Particle Astrophysics Center have successfully completed a second computational experiment using thousands of GPUs across Amazon Web Services, Microsoft Azure, and the Google Cloud Platform. “We drew several key conclusions from this second demonstration,” said SDSC’s Sfiligoi. “We showed that the cloudburst run can actually be sustained during an entire workday instead of just one or two hours, and have moreover measured the cost of using only the two most cost-effective cloud instances for each cloud provider.”

Is Ubiquitous Cloud Bursting on the Horizon for Universities?

In this special guest feature from Scientific Computing World, Mahesh Pancholi from OCF writes a growing number of universities are taking advantage of public cloud infrastructures that are widely available from large companies like Amazon, Google and Microsoft. “Public cloud providers are surveying the market and partnering with companies, like OCF, for their pedigree in providing solutions to the UK Research Computing community. In order to help Universities take advantage of their products by integrating them with the existing infrastructure such as HPC clusters.”

Velocity Compute: PeerCache for HPC Cloud Bursting

In this podcast, Eric Thune from Velocity Compute describes how the company’s PeerCache software optimizes data flow for HPC Cloud Bursting. “By using PeerCache to deliver hybrid cloud bursting, development teams can quickly extend their existing on-premise compute to burst into the cloud for elastic compute power. Your on-premise workflows will run identically in the cloud, without the need for retooling, and the workflow is then moved back to your on-premises servers until the next time you have a peak load.”

Universities step up to Cloud Bursting

In this special guest feature, Mahesh Pancholi from OCF writes that many of universities are now engaging in cloud bursting and are regularly taking advantage of public cloud infrastructures that are widely available from large companies like Amazon, Google and Microsoft. “By bursting into the public cloud, the university can offer the latest and greatest technologies as part of its Research Computing Service for all its researchers.”

Adaptive Computing rolls out Moab HPC Suite 9.1.2

Today Adaptive Computing announced the release of Moab 9.1.2, an update which has undergone thousands of quality tests and includes scores of customer-requested enhancements. “Moab is a world leader in dynamically optimizing large-scale computing environments. It intelligently places and schedules workloads and adapts resources to optimize application performance, increase system utilization, and achieve organizational objectives. Moab’s unique intelligent and predictive capabilities evaluate the impact of future orchestration decisions across diverse workload domains (HPC, HTC, Big Data, Grid Computing, SOA, Data Centers, Cloud Brokerage, Workload Management, Enterprise Automation, Workflow Management, Server Consolidation, and Cloud Bursting); thereby optimizing cost reduction and speeding product delivery.”

Adaptive Computing Launches Moab/NODUS for HPC Cloud Bursting

Today Adaptive Computing announced Moab/NODUS Cloud Bursting, making HPC cloud strategies more accessible than ever before. “Considering that public cloud bursting is usually extremely challenging, the Moab/NODUS Cloud Bursting Solution is brilliant in its ability to integrate seamlessly with existing management infrastructure,” said Art Allen, President, Adaptive Computing Enterprises, Inc.

Bright Computing Steps up with Cloud Bursting to Azure at ISC 2017

In this video from ISC 2017, Bill Wagner from Bright Computing describes the company’s new capabilities for Cloud Bursting to Microsoft Azure. “Cloud bursting from an on-premises cluster to Microsoft Azure offers companies an efficient, cost-effective, secure and flexible way to add additional resources to their HPC infrastructure. Bright’s integration with Azure also gives our clients the ability to build an entire off-premises cluster for compute-intensive workloads in the Azure cloud platform.”

Local or Cloud HPC?

Cloud computing has become another tool for the HPC practitioner. For some organizations, the ability of cloud computing to shift costs from capital to operating expenses is very attractive. Because all cloud solutions require use of the Internet, a basic analysis of data origins and destinations is needed. Here’s an overview of when local or cloud HPC make the most sense.

Reducing the Time to Science with Efficient Clouds

In this special guest feature from Scientific Computing World, Dr Bruno Silva from The Francis Crick Institute in London writes that new cloud technologies will make the cloud even more important to scientific computing. “The emergence of public cloud and the ability to cloud-burst is actually the real game-changer. Because of its ‘infinite’ amount of resources (effectively always under-utilized), it allows for a clear decoupling of time-to-science from efficiency. One can be somewhat less efficient in a controlled fashion (higher cost, slightly more waste) to minimize time-to-science when required (in burst, so to speak) by effectively growing the computing estate available beyond the fixed footprint of local infrastructure – this is often referred to as the hybrid cloud model. You get both the benefit of efficient infrastructure use, and the ability to go beyond that when strictly required.”