In this video from the 4th Annual MVAPICH User Group, DK Panda from Ohio State University presents: Overview of the MVAPICH Project and Future Roadmap. “This talk will provide an overview of the MVAPICH project (past, present and future). Future roadmap and features for upcoming releases of the MVAPICH2 software family (including MVAPICH2-X, MVAPICH2-GDR, MVAPICH2-Virt, MVAPICH2-EA and MVAPICH2-MIC) will be presented. Current status and future plans for OSU INAM, OEMT and OMB will also be presented.”

IBM Ramps Up Apache Spark at SC15

“What we’re previewing here today is a capability to have an overarching software, resource scheduler and workflow manager that takes all of these disparate sources and unifies them into a single view, making hundreds or thousands of computers look like one, and allowing you to run multiple instances of Spark. We have a very strong Spark multitenancy capability, so you can run multiple instances of Spark simultaneously, and you can run different versions of Spark, so you don’t obligate your organization to upgrade in lockstep.”

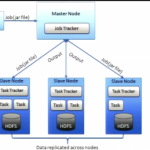

Using PBS to Schedule MapReduce Jobs Accessing OrangeFS

Using PBS Professoinal and a customized version of myHadoop has allowed researchers at Clemson University to submit their own Hadoop MapReduce jobs on the “Palmetto Cluster”. Now, researchers at Clemson can run their own dedicated Hadoop daemons in a PBS scheduled environment as needed.