Today GCS in Germany announced that their new Hornet supercomputer at HLRS is ready for extreme-scale computing challenges.

To test its mettle, Hornet successfully completed six “XXL-projects” from computationally demanding scientific fields such as planetary research, climatology, environmental chemistry, and aerospace. All six XXL Projects utilized all of Hornet’s available 94,646 compute cores–results that more than satisfied the HLRS HPC experts and scientific users.

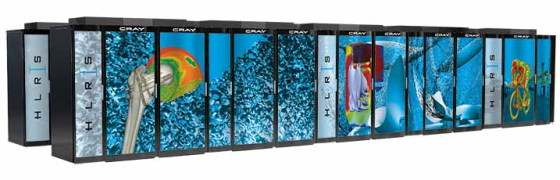

The new HLRS supercomputer Hornet, a Cray XC40 system which in its current configuration delivers a peak performance of 3.8 PetaFlops, was declared “up and running” in late 2014. In its early installation phase, prior to making the machine available for general use, HLRS had invited national scientists and researchers from various fields to apply large-scale simulation projects on Hornet. The goal was to deliver evidence that all related HPC hardware and software components required to smoothly run highly complex and extreme-scale compute jobs are up and ready for top-notch challenges.

The six identified HLRS XXL-projects were the following:

(1) Project: Convection Permitting Channel Simulation

Institute of Physics and Meteorology, Universität Hohenheim

- 84,000 compute cores

- 84 compute hours

- 330 TB of data + 120 TB for post-processing

Objective: Run a latitude belt simulation around the Earth at a resolution of a few km for a time period long enough to cover various extreme events on the Northern hemisphere and to study the model performance.

Project Team and Scientific Contact:

Prof. Dr. Volker Wulfmeyer, Dr. Kirsten Warrach-Sagi, Dr. Thomas Schwitalla

e-mail: volker.wulfmeyer@uni-hohenheim.de

(2) Project: Direct Numerical Simulation of a Spatially-Developing Turbulent Boundary Along a Flat Plate

Institute of Aerodynamics and Gas Dynamics (IAG), Universität Stuttgart

- 93,840 compute cores

- 70 machine hours

- 30 TB of data

Objective: To conduct a direct numerical simulation of the complete transition of a boundary layer flow to fully-developed turbulence along a flat plate up to high Reynolds numbers.

Project Team and Scientific Contact:

Prof. Dr. Claus Dieter Munz, Ing. Muhammed Atak

e-mail: atak@iag.uni-stuttgart.de

(3) Project: Prediction of the Turbulent Flow Field Around a Ducted Axial Fan

Institute of Aerodynamics, RWTH Aachen University

- 92,000 compute cores

- 110 machine hours

- 80 TB of data

Objective: To better understand the development of vortical flow structures and the turbulence intensity in the tip-gap of a ducted axial fan.

Project Team and Scientific Contact:

Univ.-Prof. Dr.-Ing. Wolfgang Schröder, Dr.-Ing. Matthias Meinke, Onur Cetin

e-mail: office@aia.rwth-aachen.de

(4) Project: Large-Eddy Simulation of a Helicopter Engine Jet

Institute of Aerodynamics, RWTH Aachen University

- 94,646 compute cores

- 300 machine hours

- 120 TB of data

Objective: Analysis of the impact of internal perturbations due to geometric variations on the flow field and the acoustic field of a helicopter engine jet.

Project Team and Scientific Contact:

Univ.-Prof. Dr.-Ing. Wolfgang Schröder, Dr.-Ing. Matthias Meinke, Onur Cetin

e-mail: office@aia.rwth-aachen.de

(5) Project: Ion Transport by Convection and Diffusion

Institute of Simulation Techniques and Scientific Computing, Universität Siegen

- 94.080 compute cores

- 5 machine hours

- 1.1 TB of data

Objective: To better understand and optimize the electrodialysis desalination process.

Project Team and Scientific Contact:

Prof. Dr.-Ing. Sabine Roller, Harald Klimach, Kannan Masilamani

e-mail: harald.klimach@uni-siegen.de

(6) Project: Large Scale Numerical Simulation of Planetary Interiors

German Aerospace Center/Technische Universität Berlin

- 54,000 compute cores

- 3 machine hours

- 2 TB of data

Objective: To study the effect of heat driven convection within planets on the evolution of a planet (how is the surface influenced, how are conditions for life maintained, how do plate-tectonics work and how quickly can a planet cool).

Project Team and Scientific Contact:

Prof. Dr. Doris Breuer, Dr. Christian Hüttig, Dr. Ana-Catalina Plesa, Dr. Nicola Tosi

e-mail: ana.plesa@dlr.de