According to comments made at Nvidia’s GPU Technology Conference (GTC) last week, the Pascal, next generation GPU is now just a year away. Although that may come as little surprise to some, the GTC presented some new information on the features that will be introduced and on performance.

According to comments made at Nvidia’s GPU Technology Conference (GTC) last week, the Pascal, next generation GPU is now just a year away. Although that may come as little surprise to some, the GTC presented some new information on the features that will be introduced and on performance.

As reported here, the form of machine learning termed ‘Deep learning’ was a key focus at this year’s GTC. Nvidia claimed that its new Pascal architecture will accelerate deep learning applications ten times faster than the speed of its current-generation Maxwell processors.

The Pascal architecture includes three new design features; the first of which is mixed-precision computing. Mixed-precision computing enables Pascal GPUs to compute at 16-bit floating point accuracy at twice the rate of 32-bit floating point accuracy. Again, deep learning is an important application here because increased floating point performance particularly benefits classification and convolution which are two key activities in deep learning.

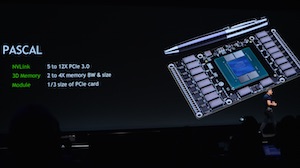

The new architecture will also include 3D memory, which will provide up to three times the bandwidth and nearly three times the frame buffer capacity of Maxwell GPUs. By reducing the memory bandwidth constraints, which limit the speed at which data can be delivered to the GPU; developers can build larger neural networks and accelerate the bandwidth-intensive portions of deep learning applications.

Pascal will have its memory chips stacked on top of each other, and placed adjacent to the GPU, much closer than previous architectures. This reduces the distance that bits need to travel from memory to GPU resulting in accelerated communication and better power efficiency.

This is in addition to up to 32 GB of memory — 2.7 times more than the newly launched Nvidia flagship, the GeForce GTX Titan X. One of the most anticipated features of the new Pascal architecture is NVLink – Nvidia’s high-speed interconnect, which links together two or more GPUs — which should increase the gains seen from the increase in available memory and bandwidth.

NVLink allows for double the number of GPUs in a system to work together in deep learning computations. In addition, CPUs and GPUs can connect in new ways to enable more flexibility and energy efficiency in server design compared to PCI-E.

The technology will allow data to move between GPUs and CPUs five to 12 times faster than they can with today’s current standard, PCI-Express. As deep learning, big data, and data-centric computing become ever more prevalent, increasing the memory bandwidth will dramatically improve performance of data intensive applications.

This story appears here as part of a cross-publishing agreement with Scientific Computing World.