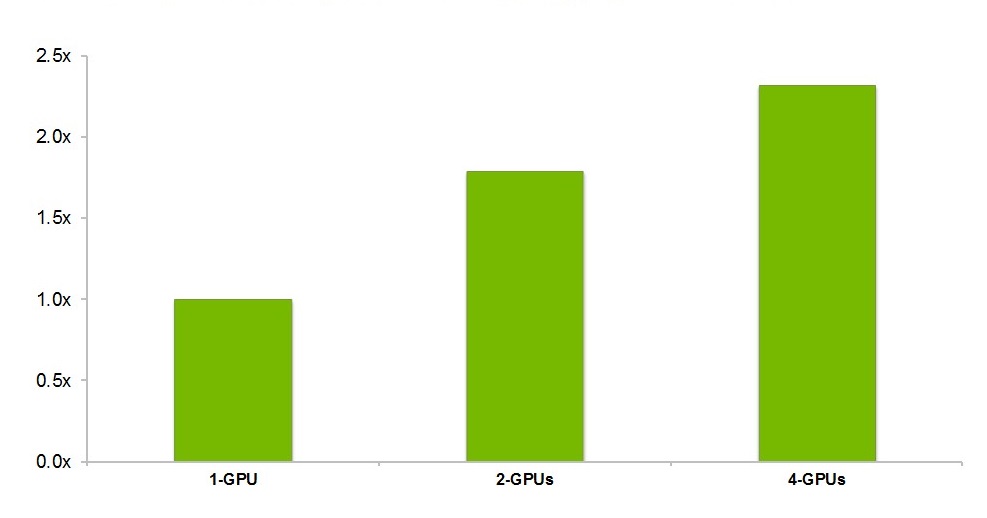

DIGITS 2 Preview performance vs. previous version on

GeForce GTX TITAN X GPUs in an NVIDIA DIGITS DevBox system

Today Nvidia updated its GPU-accelerated deep learning software to accelerate deep learning training performance.

With new releases of DIGITS and cuDNN, the new software provides significant performance enhancements to help data scientists create more accurate neural networks through faster model training and more sophisticated model design.

For data scientists, DIGITS 2 now delivers automatic scaling of neural network training across multiple high-performance GPUs. This can double the speed of deep neural network training for image classification compared to a single GPU. The new automatic multi-GPU scaling capability in DIGITS 2 maximizes the available GPU resources by automatically distributing the deep learning training workload across all of the GPUs in the system. Using DIGITS 2, Nvidia engineers trained the well-known AlexNet neural network model more than two times faster on fourNvidia Maxwell architecture-based GPUs, compared to a single GPU.1 Initial results from early customers are demonstrating better results.

Training one of our deep nets for auto-tagging on a single Nvidia GeForce GTX TITAN X takes about sixteen days, but using the new automatic multi-GPU scaling on four TITAN X GPUs the training completes in just five days,” said Simon Osindero, A.I. architect at Yahoo’s Flickr. “This is a major advantage and allows us to see results faster, as well letting us more extensively explore the space of models to achieve higher accuracy.”

For deep learning researchers, cuDNN 3 features optimized data storage in GPU memory for the training of larger, more sophisticated neural networks. cuDNN 3 also provides higher performance than cuDNN 2, enabling researchers to train neural networks up to two times faster on a single GPU. The new cuDNN 3 library is expected to be integrated into forthcoming versions of the deep learning frameworks Caffe, Minerva, Theano and Torch, which are widely used to train deep neural networks.

High-performance GPUs are the foundational technology powering deep learning research and product development at universities and major web-service companies,” said Ian Buck, vice president of Accelerated Computing at NVIDIA. “We’re working closely with data scientists, framework developers and the deep learning community to apply the most powerful GPU technologies and push the bounds of what’s possible.”

Availability

The DIGITS 2 Preview release is available today as a free download for Nvidia registered developers. To learn more or download, visit the DIGITS website.

The cuDNN 3 library is expected to be available in major deep learning frameworks in the coming months. To learn more visit the cuDNN website.