Modern server architectures rely on memory locality for optimal performance. Data needs to be organized that allows the CPU’s to process the data, without having to wait. If the data is not structured in a cache friendly way, the CPU will have to wait. What if the application needs fast access to neighboring data, rather than elements in a row, or must transverse the data multiple times?

Modern server architectures rely on memory locality for optimal performance. Data needs to be organized that allows the CPU’s to process the data, without having to wait. If the data is not structured in a cache friendly way, the CPU will have to wait. What if the application needs fast access to neighboring data, rather than elements in a row, or must transverse the data multiple times?

To improve data locality of multi-dimensional data, using a Morton ordering, can help in many cases. When data is held in cache or in cache lines, performance will be better than if data is held in different cache lines. On the Intel Xeon Phi coprocessor, a single cache line is 64 bytes, enough to hold 16 single precision floating point values. But what happens when the data is not contiguous?

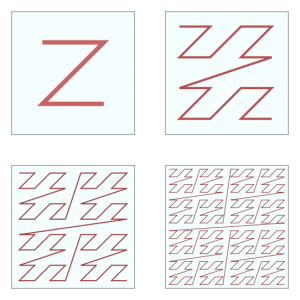

The Morton order is a mapping of multidimensional data to one dimension that preserves locality of the data. This is also known as Z-order. When using this ordering, for example in a 16 x 16 matrix, each of the 4 sub matrices can fit into a single cache line. For example, when transposing a simple matrix, if each of the sub-matrices are transposed themselves, then transposing the 4 x 4 matrices will lead to the full matrix transpose. When dealing with large matrices (1024 x 1024 and larger), speedups by using a Morton ordering compared to a naïve implementation can result in up to 11 times the performance on the Intel Xeon Phi coprocessor. When using multiple threads (which the Intel Xeon Phi is designed for), using the Morton ordering, performance is up to 2.44 times faster for a large matrix transpose. Even on an Intel Xeon CPU, by using up to 32 threads, comparing the naïve implementation to one using Morton ordering, the speedup was up to over 4X while running a 32768 x 32768 matrix transpose.

Another example of using data grouping is when running an application that uses a large matrix multiply function. Using a combination of new ordering and the Intel MIC compiler directives, performance on the Intel Xeon Phi coprocessor was up to 36 X faster, and on the Intel Xeon CPU was up to 29 X faster.

By using Morton ordering as an alternative to row-major or column-major data storage, significant speedups can be achieved on the Intel Xeon Phi coprocessor or Intel Xeon CPU when performing matrix multiplies or matrix transposes. Morton reordering was effective on either setup. By doing this reordering of the data, maximum performance is achieved due to cache locality. Taking advantage of cache lines will greatly speed up performance of application codes.

Source: Intel, USA.

Turn Big Research into Big Results

Accelerate data analysis with Intel® Parallel Studio XE.