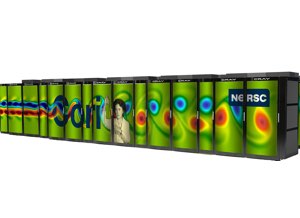

NERSC reports that it has selected a number of HPC research projects to participate in the center’s new Burst Buffer Early User Program. For the first time, users will be able to test and run their codes using the new Burst Buffer feature on the center’s newest supercomputer, Cori.

NERSC reports that it has selected a number of HPC research projects to participate in the center’s new Burst Buffer Early User Program. For the first time, users will be able to test and run their codes using the new Burst Buffer feature on the center’s newest supercomputer, Cori.

“Burst Buffers have the potential to transform science on supercomputers: removing I/O bottlenecks, enabling new workflows and bringing together data analysis and simulations,” said Wahid Bhimji, a data architect at NERSC who is co-leading the Cori Early User Programs. “However, this is a brand new technology, offering a new way of working and bringing challenges from software to scheduling. So it is really important and exciting to be able to shape the use of this technology in collaboration with these important science projects.”

Cori Phase 1, recently installed in the new Computational Research and Theory building at Berkeley Lab, is a Cray XC system based on the Haswell multi-core processor. It includes a number of new features designed to support data-intensive science, including the Burst Buffer. Based on the Cray DataWarp I/O accelerator, the Burst Buffer is designed to enhance I/O performance and features a layer of non-volatile storage that sits between a processors’ memory and the parallel file system.

NERSC’s Burst Buffer Early User Program is one of several programs NERSC has rolled out this year to help users get up and running on Cori as soon as possible and tune the system to meet science needs. Proposals were solicited beginning in early August; selection criteria included scientific merit, computational challenges and broad use of Burst Buffer data features, such as I/O improvements, checkpointing, workflow improvements, data staging and visualization. The selected projects reflect the range of the six program offices in the DOE Office of Science and the breadth of scientific computing the center supports.

“We’re very happy with the response to the Burst Buffer Early User Program call,” said Deborah Bard, a data architect at NERSC who is also co-leading the Cori Early User Programs. “In fact, we decided to support more applications than we’d originally anticipated.” Some applications already had Laboratory Directed Research and Development funding at Berkeley Lab and existing support from NERSC staff. Others will be given earlier access to the Burst Buffer to enable their projects, though without dedicated NERSC support.

NERSC Burst Buffer early use cases:

NERSC-supported: New Efforts:

- Nyx/BoxLib cosmology simulations, Ann Almgren, Berkeley Lab (HEP)

- Phoenix: 3D atmosphere simulator for supernovae, Eddie Baron, University of Oklahoma (HEP)

- Chombo-Crunch + VisIt for carbon sequestration, David Trebotich, Berkeley Lab (BES)

- Sigma/UniFam/Sipros bioinformatics codes, Chongle Pan, Oak Ridge National Laboratory (BER)

- XGC1 for plasma simulation, Scott Klasky, Oak Ridge National Laboratory (FES)

- PSANA for LCLS, Amadeo Perazzo, SLAC (BES/BER)

NERSC-supported: Existing Engagements

- ALICE data analysis, Jeff Porter, Berkeley Lab (NP)

- Tractor: Cosmological data analysis (DESI), Peter Nugent, Berkeley Lab (HEP)

- VPIC-IO performance, Suren Byna, Berkeley Lab (HEP/ASCR)

- YODA: Geant4 sims for ATLAS detector, Vakhtang Tsulaia, Berkeley Lab (HEP)

- Advanced Light Source SPOT Suite, Craig Tull, Berkeley Lab (BES/BER)

- TomoPy for ALS image reconstruction, Craig Tull, Berkeley Lab (BES/BER)

- kitware: VPIC/Catalyst/ParaView, Berk Geveci, kitware (ASCR)

Early Access

- Image processing in cryo-microscopy/structural biology, Sam Li, UCSF (BER)

- htslib for bioinformatics, Joel Martin, Berkeley Lab (BER)

- Falcon genome assembler, William Andreopoulos, Berkeley Lab (BER)

- Ray/HipMer genome assembly, Rob Egan, Berkeley Lab (BER)

- HipMer, Steven Hofmeyr, Berkeley Lab (BER/ASCR)

- CESM Earth System model, John Dennis, UCAR (BER)

- ACME/UV-CDAT for climate, Dean N. Williams, Livermore Lab (BER)

- Global View Resilience with AMR, neutron transport, molecular dynamics, Andrew Chien, University of Chicago (ASCR/BES/BER)

- XRootD for Open Science Grid, Frank Wuerthwein, University of California, San Diego (HEP/ASCR)

- OpenSpeedShop/component-based tool framework, Jim Galarowicz, Krell Institute (ASCR)

- DL-POLY for material science, Eva Zarkadoula, ORNL (BES)

- CP2K for geoscience/physica chemistry, Chris Mundy, Pacific Northwest National Laboratory (BES)

- ATLAS simulation of ITK with Geant4, Swagato Banerjee, University of Louisville (HEP)

- ATLAS data analysis, Steve Farrell, Berkeley Lab (HEP)

- Spark, Costin Iancu, Berkeley Lab (ASCR)

Source: NERSC