In this special guest feature, Robert Roe from Scientific Computing World describes why Nvidia is in the driver’s seat for Deep Learning.

The Nvidia GPU technology Conference (GTC), held at the beginning of April in Silicon Valley, California, showcased the latest Nvidia technology – with a focus on VR and deep learning – including the introduction of a new GPU based on the Pascal architecture.

The Nvidia GPU technology Conference (GTC), held at the beginning of April in Silicon Valley, California, showcased the latest Nvidia technology – with a focus on VR and deep learning – including the introduction of a new GPU based on the Pascal architecture.

Nvidia CEO Jen-Hsun Huang’s theme for the opening keynote was based on “a new computing model.” Huang explained that Nvidia builds computing technologies for the most demanding computer users in the world and that the most demanding applications require GPU acceleration. “The computers you need aren’t run of the mill. You need supercharged computing, GPU accelerated computing” said Huang.

It did not take long to deliver this promise of supercharged computing, Huang announced a number of new technologies including the first Tesla GPU based on Nvidia’s new Pascal architecture, the Tesla P100, a development platform for autonomous cars, the DRIVE PX 2, and a deep-learning supercomputer in a box the DGX-1.

New technology for deep learning

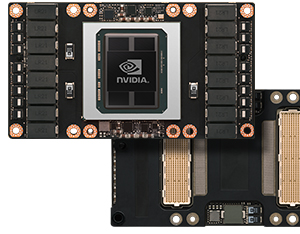

The new Tesla P100 GPU features Nvidia’s latest technologies including NV Link, HBM2 high bandwidth memory (HBM), improved programming model featuring Unified Memory and Compute Preemption, and transistors based on a 16nm FinFET manufacturing process.

The new card is the first major update to the Tesla range of GPUs since the Tesla K80, based on the Kepler architecture, was released in 2014.

With the release of every new GPU architecture, Nvidia introduces improvements to performance and power efficiency. Like previous Tesla GPUs, GP100 is composed of large numbers of graphics processing clusters (GPCs), streaming multiprocessors (SMs), and memory controllers.

GP100 achieves its increased throughput by providing six GPCs, up to 60 SMs, and eight 512-bit memory controllers (4096 bits total) per GPU. However the performance increases are not delivered through brute force alone, performance is increased not only by adding more SMs than previous GPUs but by making each SM more efficient. Each SM has 64 CUDA cores and four texture units, for a total of 3840 CUDA cores and 240 texture for each card.

A number of changes to the SM in the Maxwell architecture improved its efficiency compared to Kepler. Pascal builds on this and incorporates additional improvements that increase performance per watt even further over Maxwell. While TSMC’s 16nm Fin-FET manufacturing process plays an important role, many GPU architectural modifications were also implemented to further reduce power consumption while maintaining performance.

We’re in production now. We’ll ship it soon. First, it will show up in the cloud and then in OEMs by Q1 next year,” said Huang.

Over the last two years, the GTC keynotes, have focused on deep learning and neural networks, valuable technologies in their own right but also prerequisites for artificial intelligence. 2016 was no exception, with a lot of excitement around the new Pascal architecture but Nvidia also released two new cards based on the previous Maxwell architecture, the new Tesla M40 and M4 cards designed specifically for address deep learning applications.

One of the biggest things that ever happened in computing is AI. Five years ago, deep learning began, sparked by the availability of lots of data, the availability of the GPU, and the introduction of new algorithms” said Huang.

Using one general architecture, one general algorithm, we can tackle one problem after another. In the old traditional approach, programs were written by domain experts. Now you have a general deep learning algorithm and all you need lots of data and lots of computing power.’

The new M40 and the M4 GPUs, are being released to address this growing focus on deep learning and artificial intelligence applications. Nvidia is directly targeting these GPUs as ‘hyper-scale accelerators’ to target the growing machine learning market. More information on the use of Nvidia GPUs for machine learning applications can be found on Nvidia’s blog.

Tesla M40 is quite a beast. It’s a beast of a GPU accelerator for internet training. We have something smaller, an M4, for inferencing,” said Huang. Nvidia stated during the keynote that the Tesla M40 delivers the same model within hours versus days on CPU based compute systems.

Deep learning drives autonomous vehicles

While there are thousands of potential applications for deep learning, one of the best established today is the use of deep learning in autonomous driving systems – driverless cars. While these systems are not yet available to the public, Nvidia has been working with car manufacturers and software developers to design systems which can effectively simulate, not only driving, but also the complex decision making needed for autonomous vehicles to function in the real world, with real world complications and hazards.

While there are thousands of potential applications for deep learning, one of the best established today is the use of deep learning in autonomous driving systems – driverless cars. While these systems are not yet available to the public, Nvidia has been working with car manufacturers and software developers to design systems which can effectively simulate, not only driving, but also the complex decision making needed for autonomous vehicles to function in the real world, with real world complications and hazards.

The DRIVE PX 2 is Nvidia’s latest hardware platform for autonomous vehicles. With a much smaller form factor, the Nvidia system replaces an entire trunk full of computers that were required for previous iterations of the hardware platform.

DRIVE PX 2 provides a significant increase in processing power for deep learning, using two Tegra processors alongside two next-generation Pascal GPUs. Nvidia claims that the system will be capable of 24 trillion Deep Learning operations per second – specialised instructions that accelerate the math used in deep learning network inference. That’s more than 10 times the computational power than the previous-generation system.

Huang said: “We took all of this horsepower and shrunk it into DRIVE PX 2 with two Tegra processors and two Pascal next-gen processors. This four chip configuration will give you a supercomputing cluster that can connect 12 cameras, plus lidar, and fuse it all in real time.”

One of the key technologies for this project is the mapping platform, which Huang explains is created from the data taken from individual DRIVE PX 2 systems and assimilating that information into a single high-definition map. “You stream up point clouds, which are accumulated and compressed in the cloud to create one big high definition map. You’ll have DRIVE PX 2 in the car and DGX-1 in the cloud.”

The final piece of the deep learning puzzle for Nvidia is the DGX-1, the world’s first purpose-built system for deep learning. The DGX-1 is the first system built with Pascal powered Tesla P100 accelerators, interconnected with Nvidia NVLink, a high speed interconnect system developed for the Pascal architecture.

It’s engineered top to bottom deep learning, 170 teraflops in a box, 2 petaflops in a rack, with eight Tesla P100s” stated Huang. “It’s the densest computer node ever made.”

Huang compared the performance of the DGX-1 to a single Xeon processor. “A dual Xeon processor has three teraflops, this has 170 teraflops. With a dual Xeon it takes 150 hours to train Alexnet; with DGX -1 it takes two hours.”

Huang concluded: “Last year, I predicted a year from now, I’d expect a 10x speed up year over year. I’m delighted to say we have a 12x speedup year over year.”

This combination of new technology has a specific focus on driving the development of deep learning technology, having established new levels of performance in hardware development it is now time to see what the software developers can accomplish with this new hardware.

This story appears here as part of a cross-publishing agreement with Scientific Computing World.