In this TACC Podcast, host Jorge Salazar interviews John McCalpin, a Research Scientist in the High Performance Computing Group at the Texas Advanced Computing Center and Co-Director of the Advanced Computing Evaluation Laboratory at TACC.

In this TACC Podcast, host Jorge Salazar interviews John McCalpin, a Research Scientist in the High Performance Computing Group at the Texas Advanced Computing Center and Co-Director of the Advanced Computing Evaluation Laboratory at TACC.

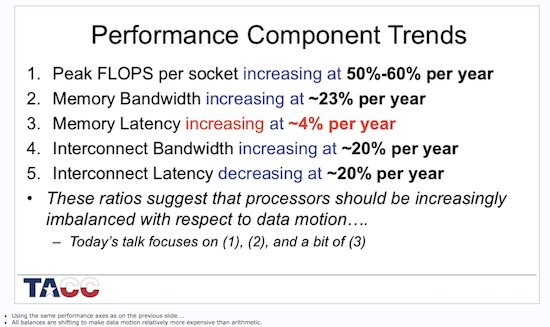

Twenty-five years ago as an oceanographer at the University of Delaware, Dr. McCalpin developed the STREAM benchmark. It continues to be widely used as a simple synthetic benchmark program that measures sustainable memory bandwidth and the corresponding computation rate for simple vector kernels. As the father of the STREAM benchmark, Dr. McCalpin was invited to speak at SC16 with a talk was entitled, “Memory Bandwidth and System Balance in HPC Systems.” View the slides.

“The most important thing for an application-oriented scientist or developer to understand is whether or not their workloads are memory-bandwidth intensive. It turns out that this is not as obvious as one might think… Due to the complexity of the systems and difficulties in understanding hardware performance counters, these can be tricky issues to understand. If you are in an area that tends to use high bandwidth, you can get some advantage from system configurations. It’s fairly easy to show, for example if you’re running medium-to-high bandwidth codes you don’t want to buy the maximum number of cores, the maximum frequency in the processors because those carry quite a premium and don’t deliver any more bandwidth than less expensive processors. You can sometimes get additional improvements through algorithmic changes, which in some sense don’t look optimal because they involve more arithmetic. But if they don’t involve more memory traffic the arithmetic may be very close to free and you may be able to get an improved quality of solution.

In the long run, if you need orders of magnitude more bandwidth than is currently available there’s a set of technologies that are sometimes referred to as processor in memory – I call it processor at memory – technologies that involves cheaper processors distributed out to adjacent to the memory chips. Processors are cheaper, simpler, lower power. That could allow a significant reduction in cost to build the systems, which allows you to build them a lot bigger and therefore deliver significantly higher memory bandwidth. That’s a very revolutionary change.”