In this AI Podcast, Bob Bond from Nvidia and Mike Senese from Make magazine discuss the Do It Yourself movement for Artificial Intelligence. “Deep learning isn’t just for research scientists anymore. Hobbyists can use consumer grade GPUs and open-source DNN software to tackle common household tasks from ant control to chasing away stray cats.”

Archives for December 2016

Video: Advances and Challenges in Wildland Fire Monitoring and Prediction

Janice Coen from NCAR gave this Invited Talk at SC16. “The past two decades have seen the infusion of technology that has transformed the understanding, observation, and prediction of wildland fires and their behavior, as well as provided a much greater appreciation of its frequency, occurrence, and attribution in a global context. This talk will highlight current research in integrated weather – wildland fire computational modeling, fire detection and observation, and their application to understanding and prediction.”

The Festivus Airing of Grievances from Radio Free HPC

In this podcast, the Radio Free HPC team honors the Festivus tradition of the annual Airing of Grievances. Our random gripes include: the need for a better HPC benchmark suite, the missed opportunity for ARM servers, the skittish battery in the new Macbook Pro, and a lack of an industry standards body for cloud computing.

SAGE Project Looks to Percipient Storage for Exascale

“The SAGE project, which incorporates research and innovation in hardware and enabling software, will significantly improve the performance of data I/O and enable computation and analysis to be performed more locally to data wherever it resides in the architecture, drastically minimizing data movements between compute and data storage infrastructures. With a seamless view of data throughout the platform, incorporating multiple tiers of storage from memory to disk to long-term archive, it will enable API’s and programming models to easily use such a platform to efficiently utilize the most appropriate data analytics techniques suited to the problem space.”

Thomas Sterling Presents: HPC Runtime System Software for Asynchronous Multi-Tasking

Thomas Sterling presented this Invited Talk at SC16. “Increasing sophistication of application program domains combined with expanding scale and complexity of HPC system structures is driving innovation in computing to address sources of performance degradation. This presentation will provide a comprehensive review of driving challenges, strategies, examples of existing runtime systems, and experiences. One important consideration is the possible future role of advances in computer architecture to accelerate the likely mechanisms embodied within typical runtimes. The talk will conclude with suggestions of future paths and work to advance this possible strategy.”

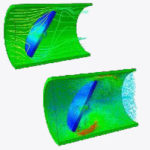

Nor-Tech Rolls Out HPC Clusters Integrated with Abaqus 2017

Today Nor-Tech announced that it is now integrating the latest release of simulation platform Abaqus into its leading-edge HPC clusters. “We have been working with SIMULIA for many years,” said Nor-Tech President and CEO David Bollig. “While this relationship has been beneficial for both companies, the real winners are our clients because they are able to fully leverage the power of our clusters with Abaqus.”

Shaheen Supercomputer at KAUST Completes Trillion Cell Reservoir Simulation

Over at KAUST News, Nicholas G. Demille writes that the Shaheen supercomputer has completed the world’s first trillion cell reservoir simulation. A Saudi Aramco research team led by fellow Ali Dogru conducted the reservoir simulation using Shaheen and the company’s proprietary software TeraPOWERS. The Aramco researchers were supported by a team of specialists from the KAUST Supercomputing Core Lab, with the work rendering imagery so detailed that it changed the face of natural resource exploration.

Podcast: Intel Invests Upstream to Accelerate AI Innovation

In this Intel Chip Chat, Doug Fisher from Intel describes the company’s efforts to accelerate innovation in artificial intelligence. “Fisher talks about Intel’s upstream investments in academia and open source communities. He also highlights efforts including the launch of the Intel Nervana AI Academy aimed at developers, data scientists, academia, and startups that will broaden participation in AI. Additionally, Fisher reports on Intel’s engagements with open source ecosystems to optimize the performance of the most-used AI frameworks on Intel architecture.”

Video: The Materials Project – A Google of Materials

“The Materials Project is harnessing the power of supercomputing together with state-of-the-art quantum mechanical theory to compute the properties of all known inorganic materials and beyond, design novel materials and offer the data for free to the community together with online analysis and design algorithms. The current release contains data derived from quantum mechanical calculations for over 60,000 materials and millions of properties.”

Building for the Future Aurora Supercomputer at Argonne

“Argonne National Labs has created a process to assist in moving large applications to a new system. Their current HPC system, Mira will give way to the next generation system, Aurora, which is part of the collaboration of Oak Ridge, Argonne, and Livermore (CORAL) joint procurement. Since Aurora contains technology that was not available in Mira, the challenge is to give scientists and developers access to some of the new technology, well before the new system goes online. This allows for a more productive environment once the full scale new system is up.”