The Pittsburgh Supercomputing Center (PSC) has completed its Phase 2 Upgrade of the Bridges supercomputer. According to PSC, the machine is now available for research allocations to the national scientific community.

Bridges’ new nodes add large-memory and GPU resources that enable researchers who have never used high-performance computing to easily scale their applications to tackle much larger analyses,” says Nick Nystrom, principal investigator in the Bridges project and Senior Director of Research at PSC. “Our goal with Bridges is to transform researchers’ thinking from ‘What can I do within my local computing environment?’ to ‘What problems do I really want to solve?’”

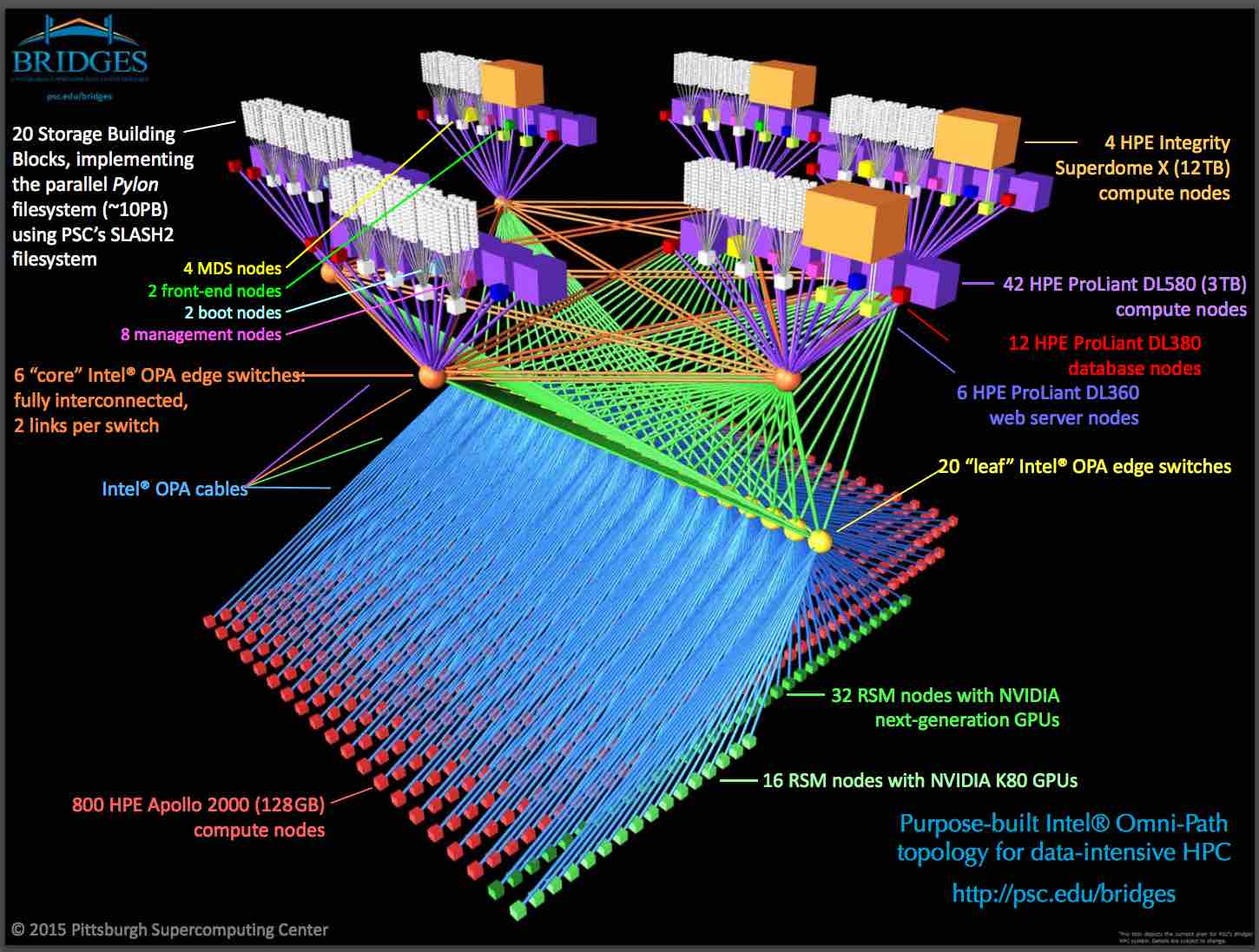

The new upgrades increase the number of nodes available on the system and expand its data storage capacity. New nodes introduced in this upgrade include two with 12 TB (terabytes) of random-access memory (RAM), 34 with 3 TB of RAM, and 32 with two NVIDIA Tesla P100 GPUs. The configuration of Bridges, using different types of nodes optimized for different computational tasks, represents a new step to provide powerful “heterogeneous computing” to fields that are entirely new to high-performance computing (HPC) as well as to “traditional” HPC users.

“Bridges is a national computing resource and project uniquely configured to support ‘Big Data’ capabilities at scale as well as HPC simulations,” says Irene Qualters, the director of the NSF’s Office of Advanced Cyberinfrastructure. “It explores coherence of these two computing modalities within a reliable and robust platform for science and engineering researchers, and through its participation in XSEDE, it promotes interoperability, broad access and outreach that encourages workflows and data sharing across and beyond research cyberinfrastructure.”

Bridges offers a distinct and heterogeneous set of computing resources:

- The large-memory 12- and 3-TB nodes, featuring new “Broadwell” Intel Xeon CPUs, greatly expand Bridges’ capacity for DNA sequence assembly of large genomes and metagenomes (collections of genomes of species living in an environment); execution of applications implemented to use shared memory; and scaling analyses using popular research software packages such as MATLAB, R, and other high-productivity programming languages.

- The GPU nodes provide the research community with early access to P100 GPUs and significantly expand Bridges’ capacity for “deep learning” in artificial intelligence research and accelerating applications across a wide range of fields in the physical and social sciences and the humanities.

- The upgrade increases Bridges’ long-term storage capacity to 10 PB (petabytes, or thousands of terabytes), strengthening Bridges’ support for community data collections, advanced data management and project-specific data storage.

Together with the already operational Phase 1 hardware, the Phase 2 upgrade increases Bridges’ speed to 1.35 Pf/s (Petaflops, or quadrillions of 64-bit floating-point operations per second)—about 29,000 times as fast as a high-end laptop. The upgrade also expands the system’s total memory, to 274 TB (or trillions of bytes)—about 17,500 times the RAM in a high-end laptop.

Recent advances achieved on Bridges include:

- Creation of the world’s largest database of specific DNA and RNA sequences, requiring a run that used 4.8 TB of memory for 24 days, by Rachid Ounit and Chris Mason of the Weill Cornell Medical College. Their database allows rapid identification and classification of species in metagenomics samples.

- A massive assembly of 1.6 trillion base pairs of metagenomic data from an oil sands tailings pond, by Brian Couger of the University of Oklahoma. Analysis is now in progress to characterize organisms and identify new microbial phyla that may lead to improved methods for cleaning up oil spills and waste.

- Application of machine learning to quantify the impact of high-quality photos for online sharing economies, by Dokyun Lee at Carnegie Mellon University’s Tepper School of Business. Lee found substantial increases in booking rooms and annual earnings, together with spillover effects on the neighborhood level.

- Popular science gateways such as Galaxy, SEAGrid and the Causal Web are in production on Bridges, allowing researchers to leverage Bridges transparently from a web browser as “HPC-Software-as-a-Service”. Many other gateways will be implemented on Bridges and/or use Bridges for all or part of their workflows.

- Bridges is now available to the IceCube South Pole Neutrino Observatory. Using a distributed software architecture, Bridges’ GPU nodes are being used to simulate photon propagation in ice, illustrating the potential of HPC data analytics to complement scientific instruments.

The operational status allows users at U.S. institutions pursuing non-proprietary research to apply for allocations on the full system via NSF’s XSEDE network of supercomputing centers. These allocations are offered at no cost, as part of XSEDE’s mission to supply advanced computing infrastructure to the nation’s research community. Users may apply for the April 2017 allocations process until January 15.

Commercial users may also acquire time on the system, via PSC’s Corporate Affiliates Program.

PSC will officially launch the Bridges system at a series of events on Jan. 27, 2017.