Today announced a significant new release of its PowerAI deep learning software distribution on Power Systems that attacks the major challenges facing data scientists and developers by simplifying the development experience with tools and data preparation while also dramatically reducing the time required for AI system training from weeks to hours.

IBM PowerAI on Power servers with GPU accelerators provide at least twice the performance of our x86 platform; everything is faster and easier: adding memory, setting up new servers and so on,” said current PowerAI customer Ari Juntunen, CTO at Elinar Oy Ltd. “As a result, we can get new solutions to market very quickly, protecting our edge over the competition. We think that the combination of IBM Power and PowerAI is the best platform for AI developers in the market today. For AI, speed is everything —nothing else comes close in our opinion.”

Data scientists and developers use deep learning to develop applications ranging from computer vision for self-driving cars to real time fraud detection and credit risk analysis systems. These cognitive applications are much more compute resource hungry than traditional applications and often overwhelm x86 systems.

The new PowerAI roadmap announced today offers four significant new features that address critical customer needs for AI system performance, effective data preparation, and enterprise-level software:

- Ease of Use: A new software tool called “AI Vision” that an application developer can use with limited knowledge about deep learning to train and deploy deep learning models targeted at computer vision for their application needs.

- Tools for data preparation: Integration with IBM Spectrum Conductor cluster virtualization software that integrates Apache Spark to ease the process of transforming unstructured as well as structured data sets to prepare them for deep learning training

- Decreased training time: A distributed computing version of TensorFlow, a popular open-source machine learning framework first built by Google. This distributed version of TensorFlow takes advantage of a virtualized cluster of GPU-accelerated servers using cost-efficient, high-performance computing methods to bring deep learning training time down from weeks to hours

- Easier model development: A new software tool called “DL Insight” that enables data scientists to rapidly get better accuracy from their deep learning models. This tool monitors the deep learning training process and automatically adjusts parameters for peak performance.

Data scientists and an emerging community of cognitive developers will lead much of the innovation in the cognitive era. Our objective with PowerAI is to make their journey to AI as easy, intuitive and productive as possible,” said Bob Picciano, Senior Vice President, IBM Cognitive Systems. “Power AI reduces the also reduce frustration of waiting and increase productivity. Power Systems were designed for data and this next era of computing, in great contrast to x86 servers which were designed for the client/server programmable era of the past.”

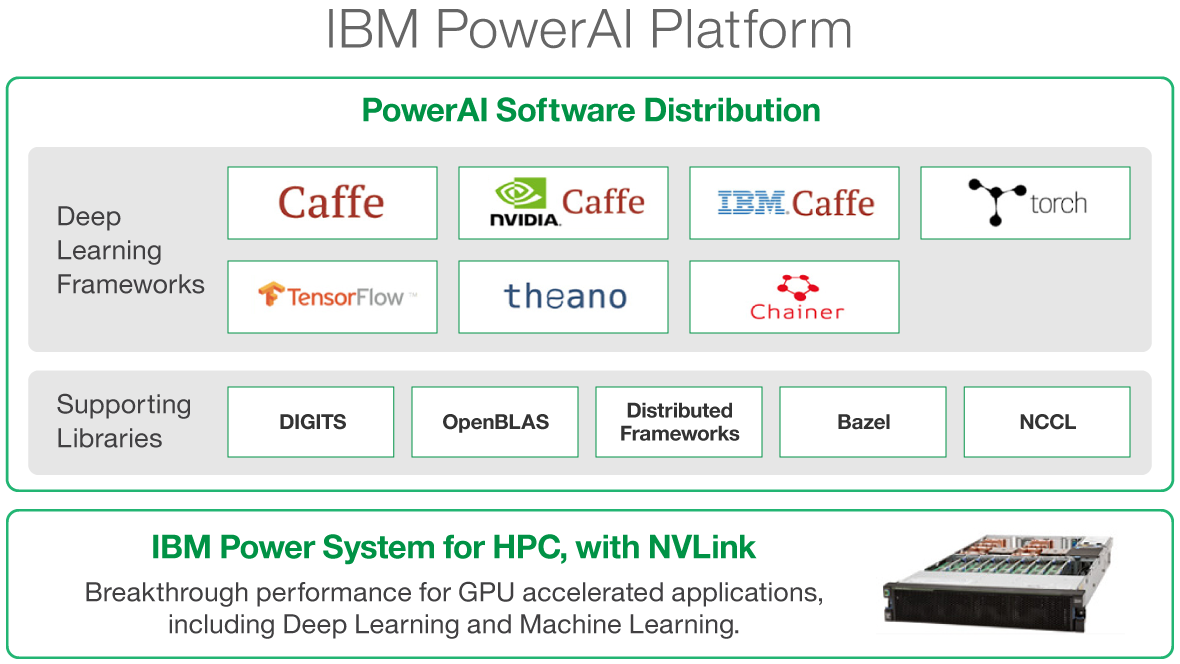

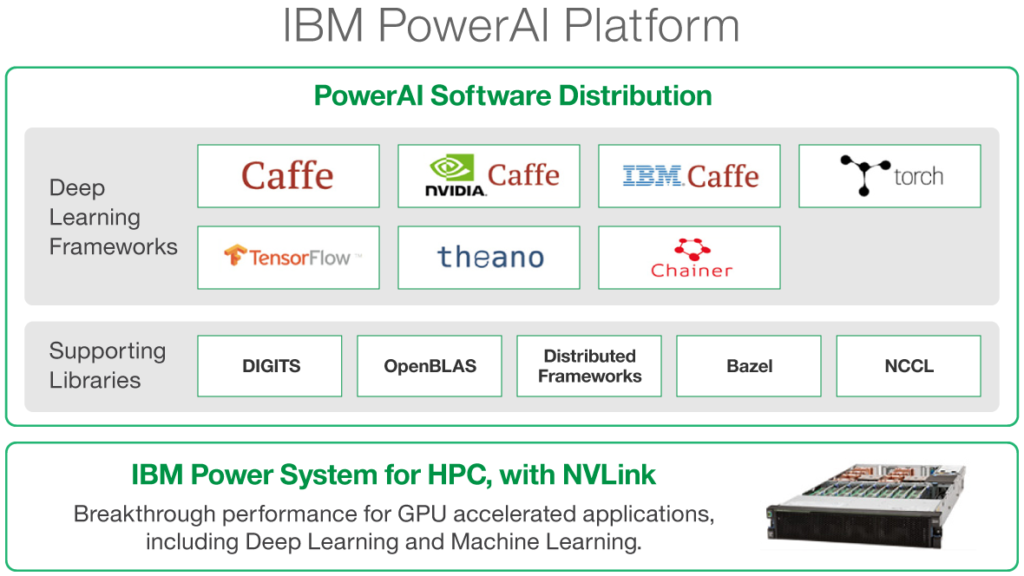

PowerAI is optimized for IBM Power Systems S822LC for HPC, which are designed for data-intensive workloads like deep learning, machine learning and AI. The combination of IBM POWER processors and NVIDIA GPUs, embedded with a high-speed interface or “super-highway” between the POWER processor and the NVIDIA GPU (NVLink), enables fast and easy data movement between the two processors. This exclusive coupling delivers higher performance in AI training, which is a key metric for developer productivity. It enables innovation at a faster pace, so developers can invent and try new models, parameter settings and data sets.