Sponsored Post

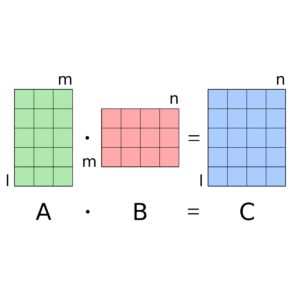

The most widely used matrix-matrix multiplication routine is GEMM (GEneral Matrix Multiplication) from the BLAS (Basic Linear Algebra Subroutines) library. And these days it can be found being used in machine learning, neural networks, and machine vision.

The most widely used matrix-matrix multiplication routine is GEMM (GEneral Matrix Multiplication) from the BLAS (Basic Linear Algebra Subroutines) library. And these days it can be found being used in machine learning, neural networks, and machine vision.

“The biggest performance challenge for matrix multiplication is size. To naïvely multiply two n by n matrices requires n3 floating point operations, and n2 data movement. This becomes immediately unmanageable when n gets really large, which is typical in most big science HPC applications.”

It is not surprising then that a great deal of effort has gone into optimizing GEMM. For really large matrices, data movement and cache issues will have a significant impact performance. The standard approach with most HPC applications is to transform the input data into a packed format internally first and then perform the multiplication over smaller blocks using highly optimized code and block sizes chosen to maximize cache and register usage. Packing in GEMM allows more data to fit into the caches, enabling contiguous, aligned, and predictable accesses.

Packing works well in most HPC situations where the matrix sizes are generally large and the time spent packing the input matrices is small relative to the computational time. However, when matrix sizes tend to be smaller, as is common in some machine learning algorithms, the packing overhead starts to become significant. In fact, a GEMM implementation that does not pack the input matrices will outperform a conventional GEMM implementation that does. This is particularly true when multiple matrix operations involve the same input matrix.

[clickToTweet tweet=”Deep learning applications get an automatic benefit from Intel MKL without needing any modification of the code.” quote=”Deep learning applications get an automatic benefit from the latest Intel MKL GEMM without any modification.”]

Intel® Math Kernel Library 2017 (Intel® MKL 2017) includes new GEMM kernels that are optimized for various skewed matrix sizes. The new kernels take advantage of Intel® Advanced Vector Extensions 512 (Intel® AVX-512) and the capabilities of the latest generations of the Intel® Xeon PhiTM processors. GEMM optimally chooses at runtime whether to use the conventional kernels that pack data, or the new kernels without packing. The choice is based on characteristics of the matrices and the underlying processor’s capabilities. As a result, deep learning applications that rely on GEMM will automatically benefit from these optimizations without needing any modification of the code.

The latest release of Intel® MKL 2017 also introduces another way to minimize the overhead for applications that rely on packed data. It provides new APIs that allow preserving the packed input matrices for use in multiple matrix multiplications that use the same input matrix. This way the packing overhead can be amortized over multiple GEMM calls.

These two approaches help to achieve high GEMM performance on multicore and many-core Intel® architectures, particularly for deep neural networks.

Intel® MKL 2017 includes all the standard BLAS, PBLAS, LAPACK, ScaLAPACK, along with specialized Deep Neural Network functions (DNN), optimized for the latest Intel® architectures.

Download the Intel® Math Kernel Library (Intel® MKL) for free.