This sponsored post NVIDIA is the first of five in a series of case studies that illustrate how AI is driving innovation across businesses of every size and scale.

The Earth’s climate has changed throughout history. In the last 650,000 years, there have been seven cycles of glacial advance and retreat, with the abrupt end of the last ice age (about 7,000 years ago) marking the beginning of modern climate and the era of human civilization.

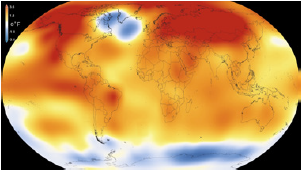

NASA: Earth’s 2016 surface temperatures were warmest on record. (Photo: climate.nasa.gov)

Most of these climate changes are attributed to very small variations in Earth’s orbit that change the amount of solar energy our planet receives. Many of the more recent changes, however, are directly related to increased amounts of carbon in the atmosphere. September 2016 was the warmest September in 136 years of modern record-keeping, according to a monthly analysis of global temperatures by scientists at the NASA Goddard Institute for Space Studies (GISS).

Given the impact of this change, the need to monitor land surface from satellite images and understand the impact of warming on crop yield changes, vegetation, and other landscapes is vital. However, automating satellite image classification is a challenge due to the high variability inherent in satellite data and the lack of sufficient training data. Most methods rely on commercial software that is difficult to scale given the region of study (the entire globe) and frequently encounter issues around compute and memory-intensive processing, cost, massively parallel architecture, and machine learning automation.

Deep Learning for DeepSat

In order to better keep a finger on the pulse of the Earth’s health, NASA developed DeepSat, a deep learning AI framework for satellite image classification and segmentation. An ensemble of deep neural networks within NASA’s Earth Science and Carbon Monitoring System, DeepSat provides vital signs of changing landscapes at the highest possible resolution, enabling scientists to use the data for independent modeling efforts. This is just one way innovation in deep learning and AI has lead to a deeper understanding of our planet.

Zoom of San Francisco, CA, with individual trees

segmented in this highly heterogeneous landscape. (Photo: NVIDIA)

DeepSat is used in many ways, including: accurately quantifying the amount of carbon sequestered by vegetated landscapes to offset emissions; to downscaling climate projection variables at a high resolution; and providing critical layers that allow assessment of effects like the urban heat island and rooftop solar efficiency. DeepSat provides a robust library of models that are trained using millions of tunable parameters and can be scaled across very large and noisy data sets.

[clickToTweet tweet=”DeepSat provides vital signs of earth’s changing landscapes at the highest possible resolution #AI” quote=”DeepSat provides vital signs of earth’s changing landscapes at the highest possible resolution #AI”]

With the compute power of NVIDIA GPUs, NASA was able to train the networks from a survey of 330,000 image scenes across the continental U.S. Average image tiles were 6000 x 7000 pixels, each weighing about 200 MB. The entire dataset for this sample was close to 65 TB for a single time epoch, with a ground sample distance of one meter. NASA also built a large training database (SATnet) of hand-generated, labeled polygons representing different land cover types for model training. CNN Models were trained on NVIDIA DIGITS DevBox, and the trained model was run on all image scenes using the NASA Ames Pleiades Supercomputer GPU cluster, equipped with NVIDIA Tesla GPUs with 217,088 NVIDIA CUDA cores.

“Our best network dataset produced a classification accuracy of 97.95% and outperformed three state-of-the-art object recognition algorithms by 11%.” – Sangram Ganguly, Senior Research Scientist, NASA Ames Research Center.

A Firmer Grasp on Carbon Impact

Powered by NVIDIA GPUs, testing and training performance saw marked improvement across the board. Increasing the input size allowed for a gradient descent with less noise, bigger images that provided more context for classification, and improved classification/segmentation accuracy. Training time was reduced, which automatically brings more experimentation and faster innovation.

“Our best network dataset produced a classification accuracy of 97.95% and outperformed three state-of-the-art object recognition algorithms by 11%,” said Ganguly.

Such insight into Earth’s behavior has potential impact across industries, countries, and humanity itself. Imagery showing the changes to our planet’s vital signs can better prepare governments to plan for natural disasters—showing areas at risk of forest fires, flooding, and avalanches. It can also assist farmers with crop production on a hotter, drier planet, and provide deeper insight into sea levels, temperatures, and levels of acidity. It would not be an overstatement to say that the future of our planet depends upon it.

This case study on innovation within the AI industry from NVIDIA first ran as part of the company’s Deep Learning Success Stories.