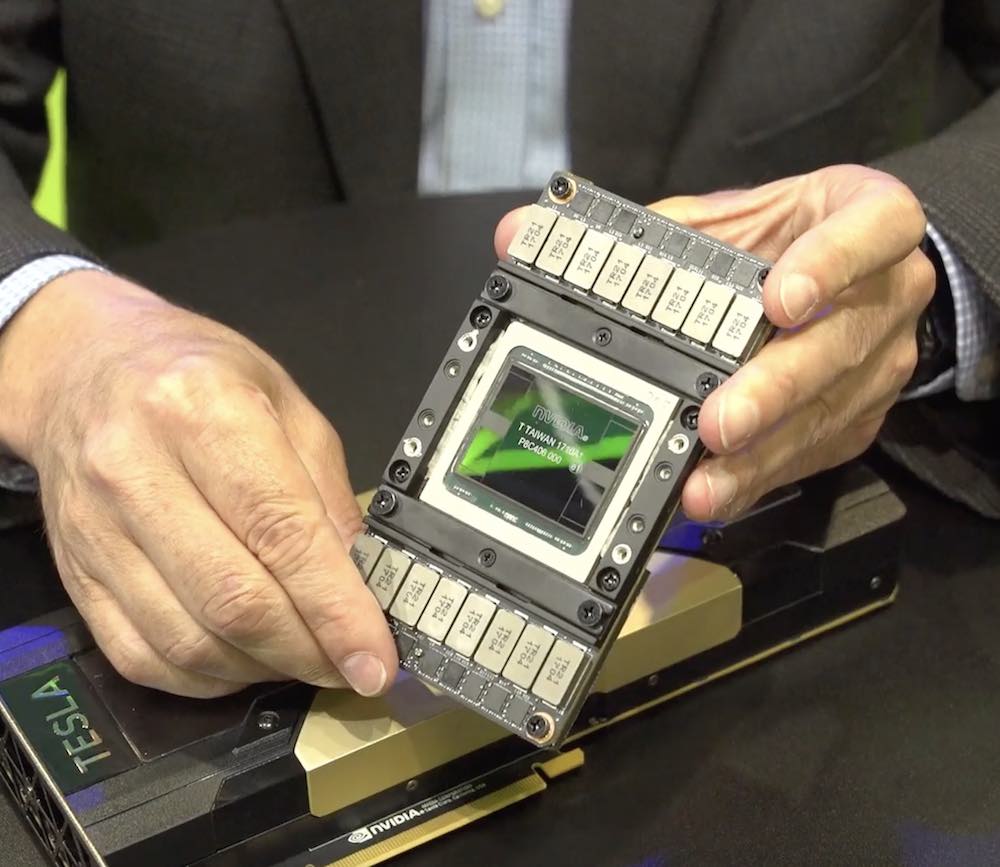

Nvidia Volta GPU

Today NVIDIA and its systems partners Dell EMC, Hewlett Packard Enterprise, IBM and Supermicro today unveiled more than 10 servers featuring NVIDIA Volta architecture-based Tesla V100 GPU accelerators — the world’s most advanced GPUs for AI and other compute-intensive workloads.

Volta systems built by our partners will ensure that enterprises around the world can access the technology they need to accelerate their AI research and deliver powerful new AI products and services,” said Ian Buck, vice president and general manager of Accelerated Computing at NVIDIA.

NVIDIA V100 GPUs, with more than 120 teraflops of deep learning performance per GPU, are uniquely designed to deliver the computing performance required for AI deep learning training and inferencing, high performance computing, accelerated analytics and other demanding workloads. A single Volta GPU offers the equivalent performance of 100 CPUs, enabling data scientists, researchers and engineers to tackle challenges that were once impossible.

Seizing on the AI computing capabilities offered by NVIDIA’s latest GPUs, Dell EMC, HPE, IBM and Supermicro are bringing to the global market a broad range of multi-V100 GPU systems in a variety of configurations.

V100-based systems announced include:

- Dell EMC — The PowerEdge R740 supporting up to three V100 GPUs for PCIe, the PowerEdge R740XD supporting up to three V100 GPUs for PCIe, and the PowerEdge C4130 supporting up to four V100 GPUs for PCIe or four V100 GPUs for NVIDIA NVLink interconnect technology in an SXM2 form factor.

- HPE — HPE Apollo 6500 supporting up to eight V100 GPUs for PCIe and HPE ProLiant DL380 systems supporting up to three V100 GPUs for PCIe.

- IBM — The next generation of IBM Power Systems servers based on the POWER9 processor will incorporate multiple V100 GPUs and take advantage of the latest generation NVLink interconnect technology — featuring fast GPU-to-GPU interconnects and an industry-unique OpenPOWER CPU-to-GPU design for maximum throughput.

- Supermicro — Products supporting the new Volta GPUs include a 7048GR-TR workstation for all-around high-performance GPU computing, 4028GR-TXRT, 4028GR-TRT and 4028GR-TR2 servers designed to handle the most demanding deep learning applications, and 1028GQ-TRT servers built for applications such as advanced analytics.

These partner systems complement an announcement yesterday by China’s leading original equipment manufacturers — including Inspur, Lenovo and Huawei — that they are using the Volta architecture for accelerated systems for hyperscale data centers.

Each NVIDIA V100 GPU features over 21 billion transistors, as well as 640 Tensor Cores, the latest NVLink high-speed interconnect technology, and 900 GB/sec HBM2 DRAM to achieve 50 percent more memory bandwidth than previous generation GPUs.

V100 GPUs are supported by NVIDIA Volta-optimized software, including CUDA 9.0 and the newly updated deep learning SDK, including TensorRT 3, DeepStream SDK and cuDNN 7 as well as all major AI frameworks. Additionally, hundreds of thousands of GPU-accelerated applications are available for accelerating a variety of data-intensive workloads, including AI training and inferencing, high performance computing, graphics and advanced data analytics.

As deep learning continues to become more pervasive, technology advancements across systems and accelerators need to evolve in order to gain intelligence from large datasets faster than ever before,” said Bill Mannel, vice president and general manager of High Performance Computing and Artificial Intelligence at Hewlett Packard Enterprise. “The HPE Apollo 6500 and HPE ProLiant DL380 systems combine the industry-leading GPU performance of NVIDIA Tesla V100 GPU accelerators and Volta architecture with HPE unique innovations in system design and manageability to deliver unprecedented levels of performance, scale and efficiency for high performance computing and artificial intelligence applications.”