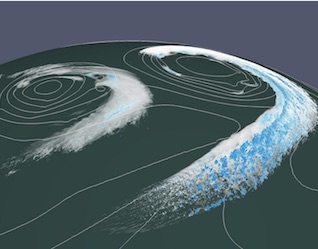

Visualization of the baroclinic wave at day 10 of the simulation with 930 m grid spacing.

A new peer-reviewed paper is reportedly causing a stir in the climatology community. Entitled, “Near-global climate simulation at 1 km resolution: establishing a performance baseline on 4888 GPUs with COSMO 5.0” the Swiss paper was written by Oliver Fuhrer, Tarun Chadha, Torsten Hoefler, Grzegorz Kwasniewski, Xavier Lapillonne, David Leutwyler, Daniel Lüthi, Carlos Osuna, Christoph Schär, Thomas C. Schulthess, and Hannes Vogt.

The best hope for reducing long-standing global climate model biases, is through increasing the resolution to the kilometer scale. Here we present results from an ultra-high resolution non-hydrostatic climate model for a near-global setup running on the full Piz Daint supercomputer on 4888 GPUs. The dynamical core of the model has been completely rewritten using a domain-specific language (DSL) for performance portability across different hardware architectures. Physical parameterizations and diagnostics have been ported using compiler directives. To our knowledge this represents the first complete atmospheric model being run entirely on accelerators at this scale. At a grid spacing of 930 m (1.9 km), we achieve a simulation throughput of 0.043 (0.23) simulated years per day and an energy consumption of 596 MWh per simulated year. Furthermore, we propose the new memory usage efficiency metric that considers how efficiently the memory bandwidth – the dominant bottleneck of climate codes – is being used.

In this related video from PASC17, Hannes Vogt (ETH Zurich / CSCS, Switzerland) presents his poster: Large-Scale Climate Simulations with Cosmo.

Recently upgraded, the Piz Daint supercomputer is Cray XC50 system used for this climate simulation is installed at the Swiss National Supercomputing Centre (CSCS). The upgrade was accomplished with additional NVIDIA Tesla P100 GPUs, boosting the Linpack the system’s performance to 19.6 petaflops.”