In this sponsored post from Asetek, the company examines how high wattage trends in HPC, deep learning and AI might be reaching an inflection point.

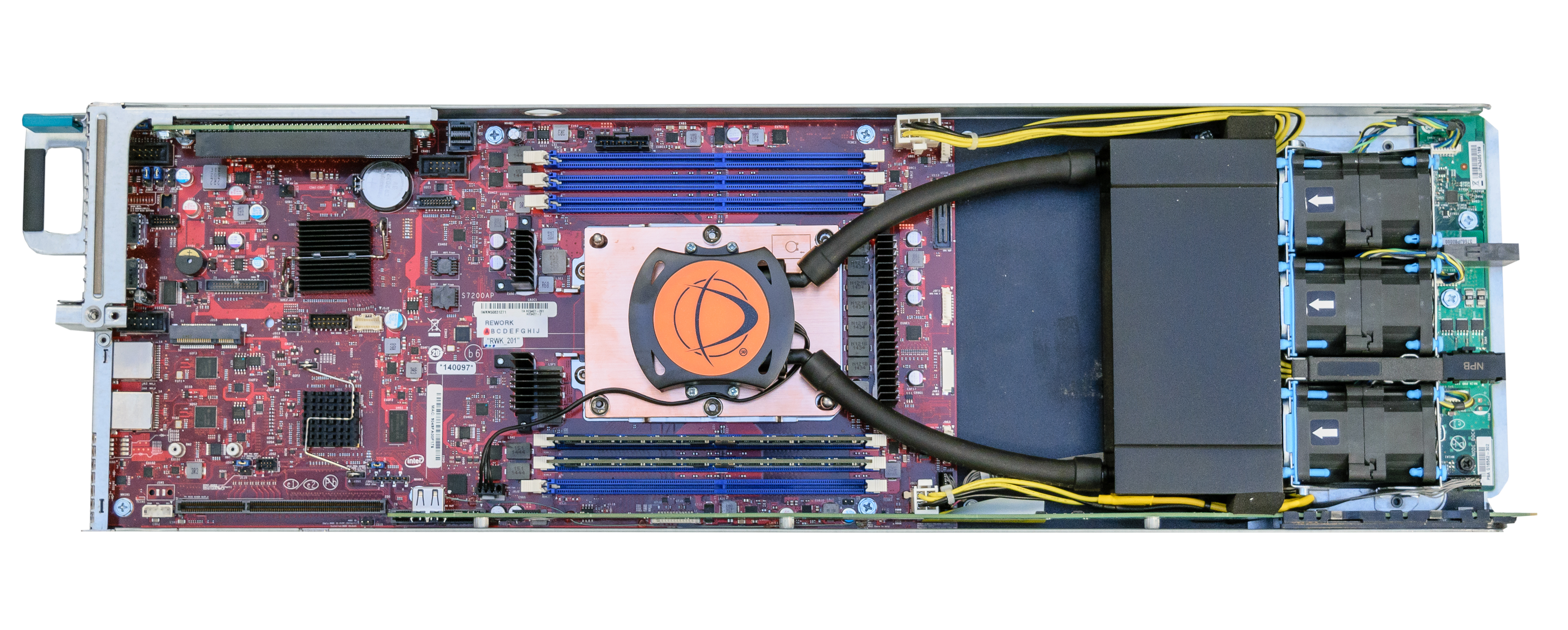

Asetek RackCDU D2C Cooling

As seen earlier this year at ISC17 in Frankfurt, many of the conversations at SC17 will focus around machine learning, AI and deep learning.

Conference tracks on the application of HPC in science, finance, logistics, manufacturing, and oil & gas will continue to expand to address the growing penetration of HPC in areas of traditional enterprise computing. With all of these segments exploiting (or planning to exploit) machine learning and AI using HPC-like compute, the issues of implementation quickly arise.

Magnified in 2017 by machine learning and AI, there is a heightened concern in the HPC community over wattage trends in CPUs, GPUs and emerging neural chips required to meet accelerating computational demands in HPC clusters.

Magnified in 2017 by machine learning and AI, there is a heightened concern in the HPC community over wattage trends in CPUs, GPUs and emerging neural chips required to meet accelerating computational demands in HPC clusters. Specifically, the resulting heat and its impact on node, rack and cluster heat density as seen with Intel’s Knight’s Landing and Knight’s Mill, Nividia’s P100 and with the Platinum versions of the latest Intel Skylake processors.

It is important to note that these new developments represent an inflection point more than simply an extension of trends seen previously. The wattages are so high that to cool the nodes containing the highest performance chips used in HPC leaves one with little choice other than liquid cooling to maintain reasonable rack densities. If not addressed at the node level with liquid cooling, undesirable interconnect distances, floor space build-outs and data center expansions become necessary.

Depending on the approach taken, machine learning and AI exacerbate this trend. Heat and wattage issues seen with GPUs during the training or learning phase of an AI application (especially if used in a deep learning/ neural network approach) are now well known. And in some cases, these issues continue into application rollout if GPUs are applied to that as well.

Even if the architecture uses quasi-GPUs like Knight’s Mill in the training phase (via “basic” machine learning or deep learning followed by a handoff to scale-out CPUs like Skylake for actual usage) the issues of wattage/density/cooling remains. And it isn’t getting any better.

With distributed cooling’s ability to address site needs in a variety of heat rejection scenarios, it can be argued that the compute-wattage-inflection-point is a major driver in the accelerating global adoption of Asetek liquid cooling at HPC sites and by the OEMs that serve them. And as will be shown at SC17, quite of few of the nodes OEMs are showing with liquid cooling are targeted at machine learning.

Given the variety of clusters (especially with the entrance of AI), the adaptability of the cooling approach becomes quite important.

Given the variety of clusters (especially with the entrance of AI), the adaptability of the cooling approach becomes quite important. Asetek distributed pumping architecture is based on low pressure, redundant pumps and closed loop liquid cooling within each server node. This allows for a high level of flexibility in heat capture and heat rejection.

Asetek ServerLSL is a server-level liquid assisted air cooling (LAAC) solution. It can be used as a transitional stage in the introduction of liquid cooling or as a tool to enable the immediate incorporation of the highest performance computing nodes into the data center. ServerLSL allows the site to leverage existing HVAC, CRAC and CRAH units with no changes to data center cooling. ServerLSL replaces less efficient air coolers in the servers with redundant coolers (cold plate/pumps) and exhausts 100% of this hot air into the data center via heat exchangers (HEXs) in each server. This enables high wattage server nodes to have 1U form factors and maintain high cluster rack densities. At a site level, the heat is handled by existing CRACs and chillers with no changes to the infrastructure. With ServerLSL, liquid cooled nodes can be mixed in racks with traditional air-cooled nodes.

Asetek ServerLSL Cooling

While ServerLSL isolates the system within each server, Asetek RackCDU systems are rack-level focused, enabling a much greater impact on cooling costs of the datacenter overall. RackCDU systems leverage the same pumps and coolers used with ServerLSL nodes. RackCDU is in use by all of the current sites in the TOP500 using Asetek liquid cooling.

Asetek RackCDU provides the answer both at the node level and for the facility overall. As with ServerLSL, RackCDU D2C (Direct-to-Chip) utilizes redundant pumps/cold plates atop server CPUs & GPUs (and optionally other high wattage components like memory). But the collected heat is move it via a sealed liquid path to heat exchangers in the RackCDU for transfer into facilities water. RackCDU D2C captures between 60% and 80% of server heat into liquid, reducing data center cooling costs by over 50% and allowing 2.5x-5x increases in data center server density.

[clickToTweet tweet=”At SC17, Asetek will have on display a new cooling technology in which servers share a rack mounted HEX. ” quote=”At SC17, Asetek will have on display a new cooling technology in which servers share a rack mounted HEX. “]

The remaining heat in the data center air is removed by existing HVAC systems in this hybrid liquid/air approach. When there is unused cooling capacity available, data centers may choose to cool facilities water coming from the RackCDU with existing CRAC and cooling towers.

The high level of flexibility in addressing cooling at the server, rack, cluster and site levels provided by Asetek distributed pumping is lacking in approaches that utilize centralized pumping. Asetek’s approach continues to deliver flexibility in the areas of heat capture, coolant distribution and heat rejection.

At SC17, Asetek will also have on display a new cooling technology in which servers share a rack mounted HEX. The servers utilizing this shared HEX approach allow them to continue to be used if the site later moves to RackCDU.

To learn more about Asetek liquid cooling, stop by booth 1625 at SC17 in Denver. Appointments for in-depth discussions about Asetek’s data center liquid cooling solutions at SC17 may be scheduled by sending an email to questions@asetek.com.