In this sponsored post, Katie Rivera of One Stop Systems explores the power of GPUs and the potential benefits of composable infrastructure for HPC.

Katie Rivera, Marketing Communications Manager, One Stop Systems

Those outside of the high performance computing (HPC) industry have difficulty understanding why HPC is so important to everyone. HPC vendors generate an endless stream of announcements about technological advancements that many industry outsiders probably tune out. But these advancements in HPC technology make everyone’s lives better every day in ways people may not realize. High performance computing plays a role in scientific discoveries, military defense, medical research, artificial intelligence and many other ground-breaking applications. CPUs still dominate the traditional HPC landscape, but to power these discoveries, CPUs alone no longer provide the compute power these applications require. Instead, CPUs rely on more and more GPUs to help pave the way to greater discoveries in the ever-expanding HPC applications through parallel operations.

[clickToTweet tweet=”Katie Rivera – Thirty four of the 50 most popular applications HPC users work with offer GPU acceleration.” quote=”Katie Rivera – Thirty four of the 50 most popular applications HPC users work with offer GPU acceleration.”]

Hundreds of HPC applications are optimized for GPUs. Thirty four of the 50 most popular applications HPC users work with offer GPU acceleration, and NVIDIA lists 513 GPU accelerated applications in their latest October 2017 catalog. With such a large number of HPC applications supporting GPUs, it makes sense that data scientists would want to use as many of the most powerful GPUs as they can. NVIDIA Tesla V100 is the world’s most advanced data center GPU ever built. Each Tesla V100 SXM2 provides 16GB of HBM2 Stacked Memory and a staggering 125 TeraFLOPS mixed-precision deep learning performance, 15.7 TeraFLOPS single-precision performance and 7.8 TeraFLOPS double-precision performance when using NVIDIA GPU Boost technology. This performance is made possible by the 5,120 CUDA cores and 640 Tensor Cores in the Tesla V100. The V100 GPUs take GPU acceleration to the next level.

HPC data scientists have two basic hardware architecture options to take advantage of the powerful Tesla V100 GPU. First, they may add a few GPUs inside a server with some storage in order to take advantage of the additional compute power. For a single node HPC system with a single application, this hyper-converged infrastructure solution may suffice. The second option combines existing servers with a GPU expansion accelerator system to add greater numbers of GPU compute resources than the servers can support on their own. For a single or small number of nodes this adds flexibility, greater power and better cooling for the most demanding GPUs. Expansion also allows bandwidth aggregation of many PCIe 3.0 based GPUs into the latest PCIe 4.0 based servers. For data centers with many nodes, the expansion option adds unlimited flexibility to the HPC architecture by decoupling the latest innovations in CPU capabilities, GPU performance and NVMe storage into a system called “composable HPC infrastructure.” Composable infrastructure allows any number of CPU nodes to dynamically map the optimum number of GPU and NVMe storage resources to each node required to complete a specific task. When the task completes, the resources return to the cluster pool so they can be mapped to the next set of nodes to run the next task.

HPC data scientists can use servers they already own and add GPU expansion and NVMe storage via expansion with no additional server investment.

Composable infrastructure using expansion accelerators provides many benefits. HPC data scientists can use servers they already own and add GPU expansion and NVMe storage via expansion with no additional server investment. If they do plan to purchase new servers, data scientists should choose the best server for the application, no matter how many GPUs fit inside. Since servers, GPUs and storage upgrade on different schedules from the various vendors, composable infrastructure can be upgraded at different times spreading the capital expenditures over many fiscal periods. Better yet, data scientists can rent the latest technology composable infrastructure systems and software from Cloud Service Providers using operational expenditure budgets rather than capital equipment budgets. Other benefits of composable infrastructure using expansion systems include large number of GPUs on the same PCIe or network fabric, especially for AI, deep learning, RTM, Monte Carlo and image processing applications that benefit from peer-to-peer communication with moderate CPU interaction.

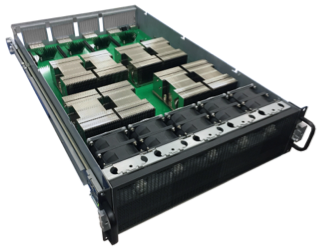

CA16010 high-density compute accelerator (HDCA)

At GTC Europe in Munich last month, OSS released two of the most powerful GPU expansion accelerators used in composable infrastructure solutions, the CA16010 and SCA8000. Both expand the performance of typical GPU-accelerated compute nodes to new limits. The CA16010 high-density compute accelerator (HDCA) platform delivers 16 PCIe NVIDIA Tesla V100 GPUs providing over 1.7 petaflops of Tensor Operations in a single node for maximum performance in the highest density per rack. Using the OSS GPUltima rack-level solution with 128 PCIe Tesla V100 GPUs, the CA16010 nodes combine for over 14 PetaFLOPs of compute capability using NVIDIA GPU Boost™.

The SCA8000 platform packs eight powerful NVIDIA Tesla V100 SXM2 GPUs connected via NVIDIA NVLink in a single GPU expansion accelerator. Each Tesla V100 SXM2 GPU provides 300GB/s bidirectional interconnect bandwidth for the most performance-hungry, peer-to-peer applications in data centers today. With up to four PCI-SIG PCIe Cable 3.0 compliant links to the host server up to 100m away, the SCA8000 supports a flexible upgrade path for new and existing datacenters with the power of NVLink without upgrading server infrastructure. With advanced, independent IPMI system monitoring and full featured SNMP interface not available in any other GPU accelerator with NVLink, the SCA8000 fits seamlessly into any size datacenter.

SCA8000 platform

Visitors to Supercomputing (SC17) this month in Denver, Colorado can see both of these systems at the OSS Booth #2049 and discuss our hyper-converged and composable infrastructure hardware, software and rental solutions with our knowledgeable staff and partners.

Katie Rivera is marketing communications manager at One Stop Systems.