Today Excelero announced that SciNet, Canada’s largest supercomputer center, has deployed Excelero’s NVMesh server SAN for the highly efficient, cost-effective storage behind a new supercomputer at the University of Toronto. By using NVMesh for burst buffer – a storage architecture that helps ensure high availability and high ROI, SciNet created a unified pool of distributed high-performance NVMe flash that retains the speeds and latency of directly attached storage media, while meeting the demanding service level agreements (SLAs) for the new supercomputer.

Using Excelero’s NVMesh in a burst buffer implementation, SciNet created a peta-scale storage system that leverages the full performance of NVMe SSDs at scale, over the network – easily meeting SLA requirements for completing checkpoints in 15 minutes, without needing costly proprietary arrays. With NVMesh, SciNet created a unified, distributed pool of NVMe flash storage comprised of 80 NVMe devices in just 10 NSD protocol-supporting servers. This provided approximately 148 GB/s of write burst (device limited) and 230GB /s of read throughput (network limited) – in addition to well over 20M random 4K iOPS.

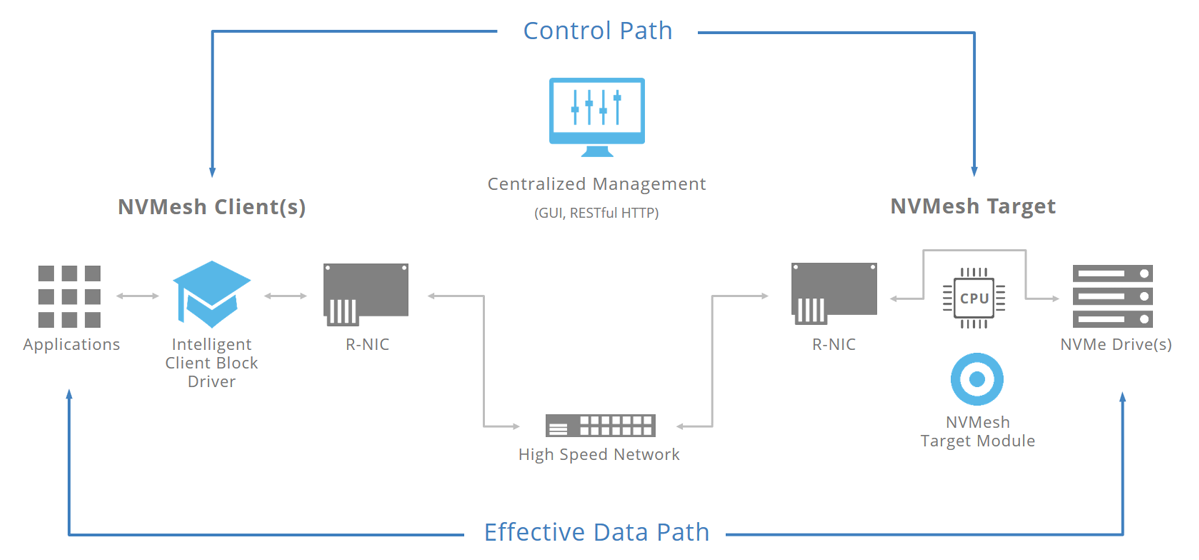

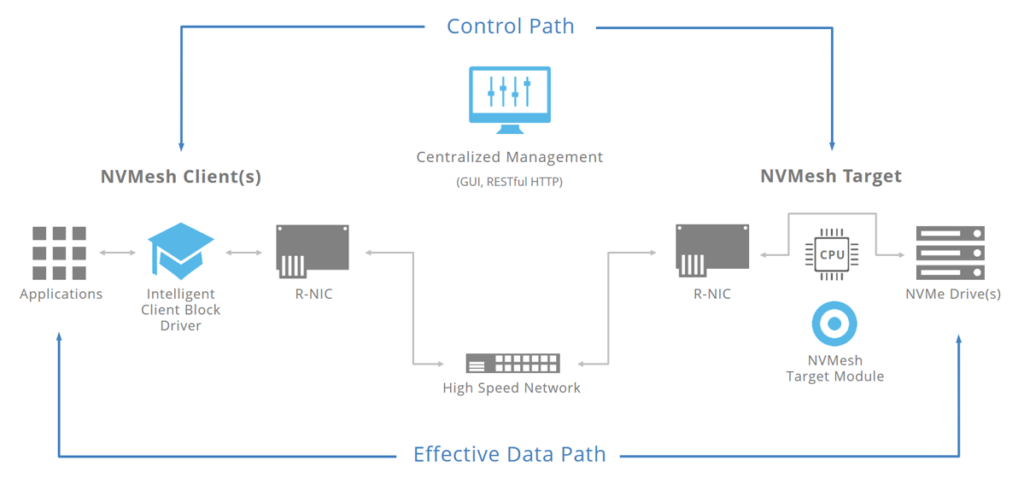

For SciNet, NVMesh is an extremely cost-effective method of achieving unheard-of burst buffer bandwidth,” said Dr. Daniel Gruner, chief technical officer, SciNet High Performance Computing Consortium. “By adding commodity flash drives and NVMesh software to compute nodes, and to a low-latency network fabric that was already provided for the supercomputer itself, NVMesh provides redundancy without impacting target CPUs. This enables standard servers to go beyond their usual role in acting as block targets – the servers now can also act as file servers.”

Emulating the “shared nothing” architectures of the Tech Giants, SciNet’s NVMesh deployment allows them to use hardware from any storage, server and networking vendor, eliminating vendor lock-in. Integration with SciNet’s parallel file system is straightforward, and the system enables SciNet to scale both capacity and performance linearly as its research load grows.