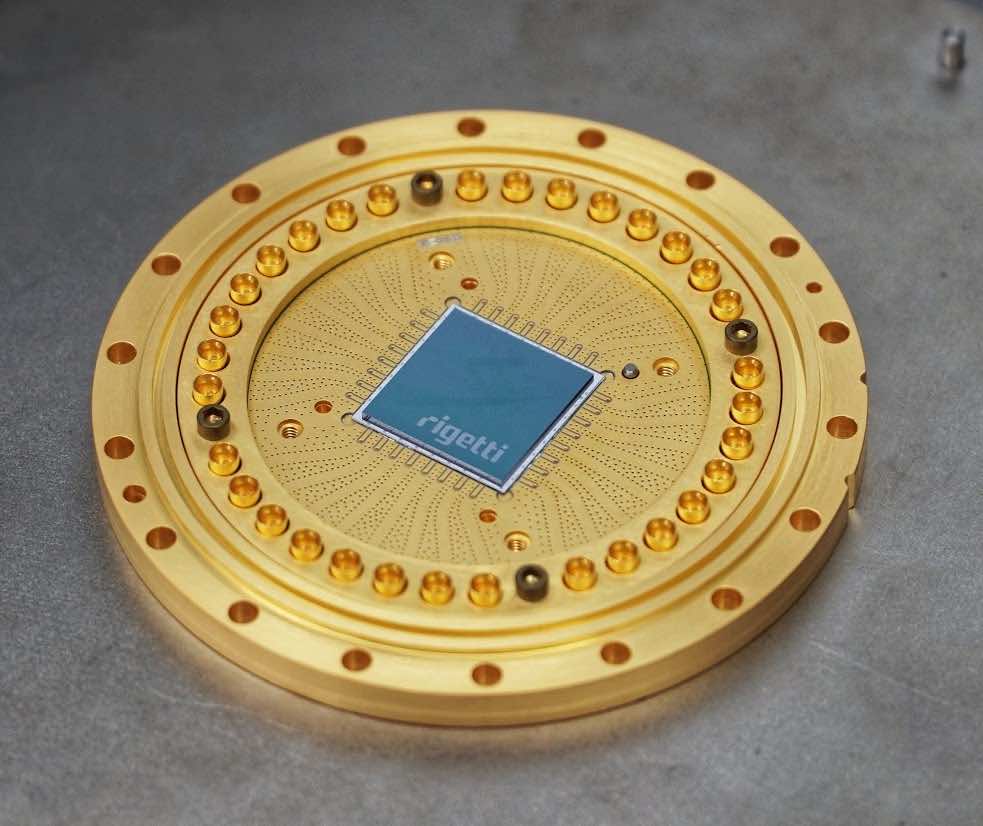

Over at Rigetti Computing, Will Zeng writes that the company has published a new white paper on unsupervised machine learning using 19Q, their new 19-qubit general purpose superconducting quantum processor. To accomplish this, they used a quantum/classical hybrid algorithm for clustering developed at Rigetti.

Over at Rigetti Computing, Will Zeng writes that the company has published a new white paper on unsupervised machine learning using 19Q, their new 19-qubit general purpose superconducting quantum processor. To accomplish this, they used a quantum/classical hybrid algorithm for clustering developed at Rigetti.

Clustering is a fundamental technique in modern data science with applications from advertising and credit scoring to entity resolution and image segmentation. The 19 qubits in our processor make this the largest hybrid demonstration to date. We believe in the fundamental power of hybrid quantum/classical computing. Our product Forest, a quantum development environment, is built upon this approach, with the Quil instruction set as its foundation. Today’s results are a demonstration of that power. We show that our algorithm has robustness to quantum processor noise, and we find evidence that classical optimization can be used to train around both coherent and incoherent hardware imperfections. Beating the best classical benchmarks will require more qubits and better performance, but hybrid proofs-of-concept like this one form the basis of valuable applications for the first quantum computers.

Abstract:

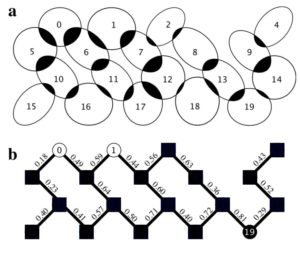

The general form of the clustering problem instance solved on the 19Q chip, and b, the corresponding Maxcut problem instance solved on the 19 qubit chip. The edge weights—corresponding to the overlap between neighbouring probability distributions on the plane—are chosen at random and the labels indicate the mapping to the corresponding qubit. The vertices are colored according to the solution to the problem.

Machine learning techniques have led to broad adoption of a statistical model of computing. The statistical distributions natively available on quantum processors are a superset of those available classically. Harnessing this attribute has the potential to accelerate or otherwise improve machine learning relative to purely classical performance. A key challenge toward that goal is learning to hybridize classical computing resources and traditional learning techniques with the emerging capabilities of general purpose quantum processors. Here, we demonstrate such hybridization by training a 19-qubit gate model processor to solve a clustering problem, a foundational challenge in unsupervised learning. We use the quantum approximate optimization algorithm in conjunction with a gradient-free Bayesian optimization to train the quantum machine. This quantum/classical hybrid algorithm shows robustness to realistic noise, and we find evidence that classical optimization can be used to train around both coherent and incoherent imperfections.

You can read more about the demo in the Rigetti research paper.