Alan Jorgensen from Bounded Floating Point (BFP)

Today a company called Bounded Floating Point announced a “breakthrough patent in processor design, which allows representation of real numbers accurate to the last digit for the first time in computer history.”

This bounded floating point system is a game changer for the computing industry, particularly for computationally intensive functions such as weather prediction, GPS, and autonomous vehicles,” said the inventor, Alan Jorgensen, PhD. “By using this system, it is possible to guarantee that the display of floating point values is accurate to plus or minus one in the last digit.”

As we’ve talked about here on insideHPC, error in floating point operations has been known since the beginning of the computer age and can be catastrophic. According to BFP, the most notorious floating point error was the Patriot missile failure in Saudi Arabia, when a Patriot missile failed to destroy a Scud missile. As a result, 28 U.S. military people were killed. This occurred when the conversion of 100 hours in tenths of a second (3600000) to floating point introduced an undetectable error resulting in the missile guidance software incorrectly locating the scud missile.

All general‐purpose computers, including smart phones, have hardware or software implementations of mathematical operations on floating point numbers. In most cases floating point numbers, and the operations on them, introduce an error  which is undetected and can accumulate, even catastrophically, over a sequence of operations. Performing complex mathematical computations requires myriad floating point operations, each of which is likely to make the result less accurate without use of this new system.

which is undetected and can accumulate, even catastrophically, over a sequence of operations. Performing complex mathematical computations requires myriad floating point operations, each of which is likely to make the result less accurate without use of this new system.

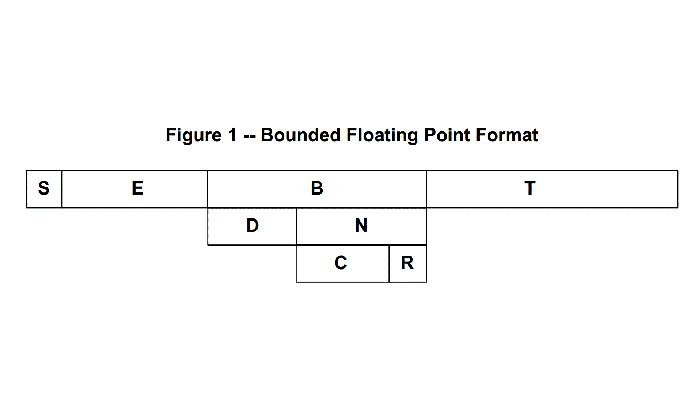

How does it work? – The innovative bounded floating point system computes two limits (or bounds) that contain the represented real number. These bounds are carried through successive calculations. When the calculated result is no longer sufficiently accurate the result is so marked, as are all further calculations made using that value. It is fail‐safe and performs in real time. It can operate in conjunction with existing hardware and software. Conversions between existing standardized floating point and this new bounded floating point format are simple operations.

The company was issued US Patent number 9,817,662 for the invention as of November 14, 2017.

About the Inventor:

Alan Jorgensen is a software testing engineer, professor, and cyber bounty hunter who has been concerned with the error in floating point calculations since 1975 while testing processor architecture. After extensive research and tests, he successfully developed a system that solved the decades‐old problem.

Absolutely amazing that the US Patent Office would grant a patent for an idea I first publicly presented in 2013, and published in a very well-received book (The End of Error: Unum Arithmetic) in February 2015. All three forms of unum arithmetic are open source and free of patent restrictions (MIT Open Source license). For Jorgensen to claim to be the inventor of this concept is pretty outrageous.

I have to agree with Dr Gustafson. Im my opinion Mr Jorgensen’s “patent” is worthless.

Have you notified the uspto patent officer? Also, EFF fights a lot of these battles. They may be able to assist.

I must respectfully disagree with your assessment. See below. I am wondering if you read the patent carefully? I am wondering why you think my idea is the same as yours? Precisely what points are the same? I should think that by your criteria, your idea is not new because of interval arithmetic. I do believe your idea is new and unique, and I respect that. I do not believe it solves many of the problems with the representation real in a fixed space.

The patent office does not evaluate the workability of patents submitted, but rather whether they are new and unique. My patent meets those requirements.

Agreed. These techniques have been used in software for quite some time – tracking maximal error and recalculating if needs be. I don’t even recall the first time I used one.

Chances are, he didn’t know. I also have my own weird solution. The problem is that I had to invent a fundamentally different method of representing linear values. Each digit is a binary “greater than half” or “less than half”. Arithmetic is very easy… I have a competitor to machine learning perceptors that I tried to develop this for.. but so far, I am using base-10 integers–assuming a “0.” on the left. I am only trying to represent something similar to probabilities… from 0 to 0.99999 (as many digits as we have). So there is no need for the”0.” and my notation can save bytes. These are “intensities” from proprioceptors and otherwise “confidences” (in correlation). The higher precision the numbers that can be represented, the finer the details that can be recognized amid features. This is particularly useful for ratio’d details–finding novel features between given features.

Matthew,

I am wondering if you published this method? A quick Google search of your name and “floating point” did not yield any results. I would be interested in more details of your solution to this problem, though it appears to me to be of the class of variable length floating point that in general has severe issues with cancellation error.

I appreciate Professor Gustafson’s comments, but I am fully aware of Unums.

I was concerned about his solution to floating point error when I heard of his book and purchased and read it immediately and commented in several venues.

Though his book was widely read, his solution to floating point error is not accepted in the industry. Who manufactures Unum processors? Who even uses them to do real substantive work?

Professor Kahan has published counter arguments, and though his debate with Professor Kahan was recorded poorly for YouTube, his arguments are cogent.

My explanation to Professor Kahan as to why Unums will not work is as follows:: Every Unum calculation has the potential for producing a data value of a different data type. I’m using common definition of “data type” here as meaning the format of the representation of data. The proposes solution to this issue of establishing sets for each data time will not work either because the number of data types grows exponentially. And, as with every other method of representing floating point error, cancellation is represented badly or even ignored.

I stand by the claims of my patent: It uniquely provides an efficient, real time, solution to bounding the representation of real number in computers that includes accommodation of both cancellation and rounding error and provides real time notification of loss of the defined required accuracy.

If you have read the patent in detail to see how it functions, and have comments on that design, I would appreciate that.

I have added a detailed rebuttal on my website.

I would like to thank Prof. Jorgensen for having cited my work in his patent as a background of his invention.

My original work dates back to 1991!

In Jan 2012 I published on arxiv the paper that is cited: https://arxiv.org/abs/1201.5975, which is a revision of the previous work, taking into account the reception that followed and subsequent developments.

To say the truth, although this is a decade-old and painful problem, it has not received a great attention by researchers in the field, perhaps because of the false conviction that there is little to do with it and that we just have to get along with it, and after all the great majority of computational problems are not so critical, so that emphasis so far has been placed in speed of computation, neglecting robustness and accuracy.

Therefore I cannot count a lot of citations, so that I am glad of this one, even if in negative terms.

However I don’t think that Prof. Jorgensen’s judgment of my work is fair!

I understand that in a patent one has to emphasize the merit of what is proposed while placing in a bad light other alternatives, but it would be great to be fair also in this circumstances.

In his patent he liquidates my work with a few words: “This technique increases required storage space, adds computation time and does not provide bounds for the error.”

Also if this is literally true it is not that bad as one may think just reading this few words.

Well, there is no free meal in this world, if we have additional information we need space for it, right? This is true also for the invention at hand: “The present invention makes a slight decrease in the maximum number of bits available for the significand for real number representation in order to accommodate space for error information”.

A time penalty also exists: “the present invention provides error computation in real time with, at most, a small increase in computation time”.

Therefore, as far as space and time are concerned, the situation seems to me not much different from the method that I proposed long time ago, because if it’s true that a software implementation of my method would certainly slow down computations significantly, this should not be true for a specialized hardware implementation, but this is not quantifiable, because no study on this subject has been carried out.

Finally, regarding error bounds, it is true that my method doesn’t provide error bounds, however it can ensure computations within given error bounds! Which, in my opinion, is what really matters.

In my method, when an ill conditioned problem is detected precision is automatically extended as much as required in order to respect given error bounds. It is not clear instead what we do here once a loss in precision is detected.

However this is no more my business and I don’t have time the go into the details of the patent.

To conclude, I wish to Prof. Jorgensen all the best and success with his patent, I would appreciate however if he would recognize that my method is not that bad, but it’s just another ways of doing things with its cons but also its pros.

Dr. Masotti

It was certainly not my intent to demean your work which I found extremely valuable for validating and improving the background for my patent for the mitigation of floating point error.

I certainly recognize the difficulty you have had having your work recognized. Making serious contributions in business, protected by NDA, and in government, protected by security classifications, makes it difficult for us to be recognized in the academic world. Yet you and I have strived to solve this problem that has cost lives and likely others where we have no way of attributing the loss of life to floating point error.

I have reread your paper and there are a number of highlighted places, reminding me of how valuable your paper has been for me.

The format for storing e of the couple (x,e), 2.1 of your paper, however, is unclear to me. Differing from interval arithmetic, this could provide a more rapid evaluation of the precision of the result.

I have found that during the computation of floating point operations, there are two kinds of error that develop and interact, rounding and cancelation, where rounding is a linear error and cancelation is an exponential error. For a long time I’ve thought of this as an “Apples and Oranges” kind of problem until I realized that I could compute and store the logarithm of an error bound. It is a bit tricky computing the logarithm of the accumulated rounding error, but I have a scheme that works pretty well.

I would have liked to have seen in your paper examples of problems like:

1000 – 999.1, or Sum(0.1, i=1,500)

With bounded floating point these look like:

W:\>bfp64 1000 – 999.1

0.90000000

W:\>bfp64 500 S .1

50.00000

Accurate to +-1 ulp (base 10).

It seems to me that nearly all attempts at mitigating floating point error simply ignore cancelation (with an occasional mention of “instability”). Goldberg 1991, What Every Computer Scientist Should Know … defines “catastrophic cancellation” and “benign cancellation” but I have found that there is an accumulation of benign cancellation that sometimes overshadows even voluminous rounding errors.

Apparently you have read at least some of my patent and I really appreciate that. Most scholars do not appreciate the patent process and the effort required to develop a rigorous patent. The patent that was actually granted was the 3rd submission. The submission date was the date of that 3rd submission.

Thank you for your comments, perhaps we can form another line of communication via my website, BoundedFloatingPoint.com.

Alan A. Jorgensen, BS EE, Ph.D. CS