John Barrus writes that Cloud TPUs are available in beta on Google Cloud Platform to help machine learning experts train and run their ML models more quickly.

John Barrus writes that Cloud TPUs are available in beta on Google Cloud Platform to help machine learning experts train and run their ML models more quickly.

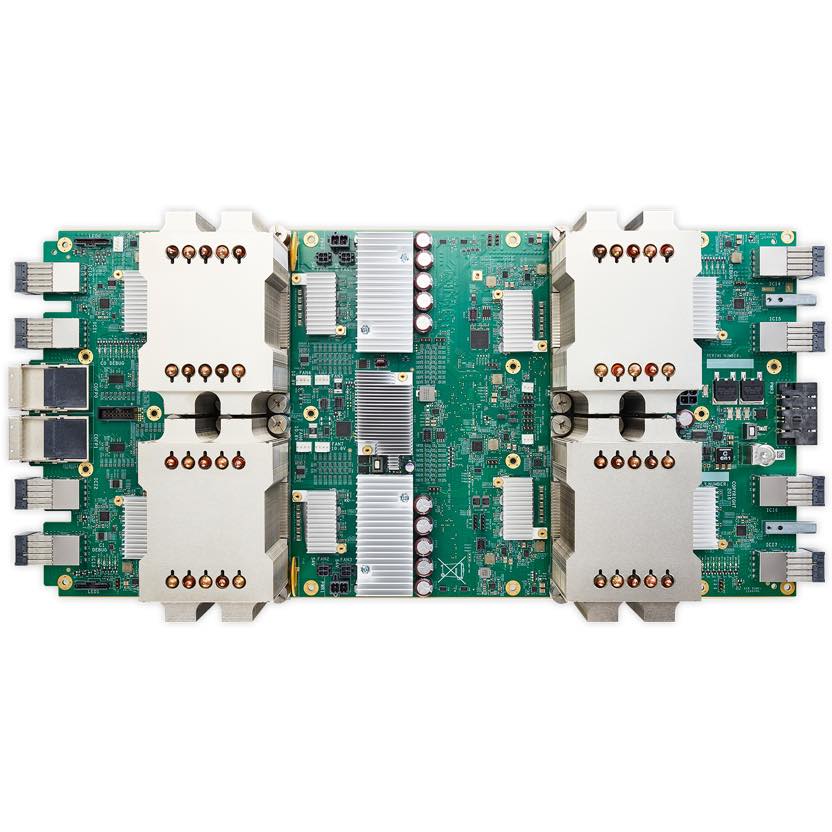

“Cloud TPUs are a family of Google-designed hardware accelerators that are optimized to speed up and scale up specific ML workloads programmed with TensorFlow. Built with four custom ASICs, each Cloud TPU packs up to 180 teraflops of floating-point performance and 64 GB of high-bandwidth memory onto a single board. These boards can be used alone or connected together via an ultra-fast, dedicated network to form multi-petaflop ML supercomputers that we call “TPU pods.” We will offer these larger supercomputers on GCP later this year.”

We designed Cloud TPUs to deliver differentiated performance per dollar for targeted TensorFlow workloads and to enable ML engineers and researchers to iterate more quickly. For example:

- Instead of waiting for a job to schedule on a shared compute cluster, you can have interactive, exclusive access to a network-attached Cloud TPU via a Google Compute Engine VM that you control and can customize.

- Rather than waiting days or weeks to train a business-critical ML model, you can train several variants of the same model overnight on a fleet of Cloud TPUs and deploy the most accurate trained model in production the next day.

- Using a single Cloud TPU and following this tutorial, you can train ResNet-50 to the expected accuracy on the ImageNet benchmark challenge in less than a day, all for well under $200!

Traditionally, writing programs for custom ASICs and supercomputers has required deeply specialized expertise. By contrast, you can program Cloud TPUs with high-level TensorFlow APIs, and we have open-sourced a set of reference high-performance Cloud TPU model implementations to help you get started right away:

- ResNet-50 and other popular models for image classification

- Transformer for machine translation and language modeling

- RetinaNet for object detection

To save you time and effort, we continuously test these model implementations both for performance and for convergence to the expected accuracy on standard datasets. Over time, we’ll open-source additional model implementations. Adventurous ML experts may be able to optimize other TensorFlow models for Cloud TPUs on their own using the documentation and tools we provide.

By getting started with Cloud TPUs now, you’ll be able to benefit from dramatic time-to-accuracy improvements when we introduce TPU pods later this year. As we announced at NIPS 2017, both ResNet-50 and Transformer training times drop from the better part of a day to under 30 minutes on a full TPU pod, no code changes required.

We made a decision to focus our deep learning research on the cloud for many reasons, but mostly to gain access to the latest machine learning infrastructure. Google Cloud TPUs are an example of innovative, rapidly evolving technology to support deep learning, and we found that moving TensorFlow workloads to TPUs has boosted our productivity by greatly reducing both the complexity of programming new models and the time required to train them. Using Cloud TPUs instead of clusters of other accelerators has allowed us to focus on building our models without being distracted by the need to manage the complexity of cluster communication patterns.”