Researchers at LLNL are using supercomputers to simulate the onset of earthquakes in California.

Researchers at LLNL are using supercomputers to simulate the onset of earthquakes in California.

In the next 30 years, there is a one-in-three chance that the Hayward fault will rupture with a 6.7 magnitude or higher earthquake, according to the United States Geologic Survey (USGS). Such an earthquake will cause widespread damage to structures, transportation and utilities, as well as economic and social disruption in the East Bay.

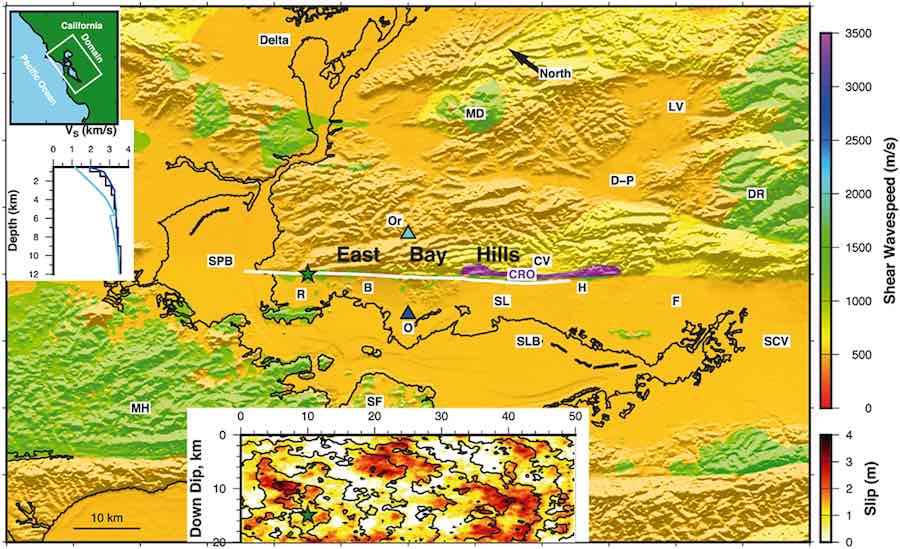

Lawrence Livermore and Lawrence Berkeley national laboratory scientists have used some of the world’s most powerful supercomputers to model ground shaking for a magnitude (M) 7.0 earthquake on the Hayward fault and show more realistic motions than ever before. The research appears in Geophysical Research Letters.

Past simulations resolved ground motions from low frequencies up to 0.5-1 Hertz (vibrations per second). The new simulations are resolved up to 4-5 Hertz (Hz), representing a four to eight times increase in the resolved frequencies. Motions with these frequencies can be used to evaluate how buildings respond to shaking

The simulations rely on the LLNL-developed SW4 seismic simulation program and the current best representation of the three-dimensional (3D) earth (geology and surface topography from the USGS) to compute seismic wave ground shaking throughout the San Francisco Bay Area. Importantly, the results are, on average, consistent with models based on actual recorded earthquake motions from around the world.

This study shows that powerful supercomputing can be used to calculate earthquake shaking on a large, regional scale with more realism than we’ve ever been able to produce before,” said Artie Rodgers, LLNL seismologist and lead author of the paper.

The Hayward fault is a major strike-slip fault on the eastern side of the Bay Area. This fault is capable of M 7 earthquakes and presents significant ground motion hazard to the heavily populated East Bay, including the cities of Oakland, Berkeley, Hayward and Fremont. The last major rupture occurred in 1868 with an M 6.8-7.0 event. Instrumental observations of this earthquake were not available at the time, however historical reports from the few thousand people who lived in the East Bay at the time indicate major damage to structures.

The recent study reports ground motions simulated for a so-called scenario earthquake, one of many possibilities.

We’re not expecting to forecast the specifics of shaking from a future M 7 Hayward fault earthquake, but this study demonstrates that fully deterministic 3D simulations with frequencies up to 4 Hz are now possible. We get good agreement with ground motion models derived from actual recordings and we can investigate the impact of source, path and site effects on ground motions,” Rodgers said.

As these simulations become easier with improvements in SW4 and computing power, the team will sample a range of possible ruptures and investigate how motions vary. The team also is working on improvements to SW4 that will enable simulations to 8-10 Hz for even more realistic motions.

For residents of the East Bay, the simulations specifically show stronger ground motions on the eastern side of the fault (Orinda, Moraga) compared to the western side (Berkeley, Oakland). This results from different geologic materials – deep weaker sedimentary rocks that form the East Bay Hills. Evaluation and improvement of the current 3D earth model is the subject of current research, for example using the Jan. 4, 2018 M 4.4 Berkeley earthquake that was widely felt around the northern Hayward fault.

Ground motion simulations of large earthquakes are gaining acceptance as computational methods improve, computing resources become more powerful and representations 3D earth structure and earthquake sources become more realistic.

Rodgers adds: “It’s essential to demonstrate that high-performance computing simulations can generate realistic results and our team will work with engineers to evaluate the computed motions, so they can be used to understand the resulting distribution of risk to infrastructure and ultimately to design safer energy systems, buildings and other infrastructure.”

Other Livermore authors include seismologist Arben Pitarka, mathematicians Anders Petersson and Bjorn Sjogreen, along with project leader and structural engineer David McCallen of the University of California Office of the President and LBNL.

This work is part of the DOE’s Exascale Computing Project (ECP). The ECP is focused on accelerating the delivery of a capable exascale computing ecosystem that delivers 50 times more computational science and data analytic application power than possible with DOE HPC systems such as Titan (ORNL) and Sequoia (LLNL), with the goal to launch a U.S. exascale ecosystem by 2021. The ECP is a collaborative effort of two Department of Energy organizations – the DOE Office of Science and the National Nuclear Security Administration.

This work is part of the DOE’s Exascale Computing Project (ECP). The ECP is focused on accelerating the delivery of a capable exascale computing ecosystem that delivers 50 times more computational science and data analytic application power than possible with DOE HPC systems such as Titan (ORNL) and Sequoia (LLNL), with the goal to launch a U.S. exascale ecosystem by 2021. The ECP is a collaborative effort of two Department of Energy organizations – the DOE Office of Science and the National Nuclear Security Administration.

Simulations were performed using a Computing Grand Challenge allocation on the Quartz supercomputer at LLNL and with an Exascale Computing Project allocation on Cori Phase-2 at the National Energy Research Scientific Computing Center (NERSC) at LBNL.