This is the fourth entry in a five-part insideHPC series that takes an in-depth look at how machine learning, deep learning and AI are being used in the energy industry. Read on to learn more about computing environments options for energy exploration and monitoring.

Download the full report.

Computing Environments Options

The resources needed for both exploration and to monitor a production environment can be significant. IT departments must decide on the return on investment when obtaining such large computing systems. The HPC systems alone can take up entire data centers and consume megawatts of electricity. The power consumption for a desired number of teraflops to petaflops with modern CPUs can be calculated with good accuracy. For example, with the introduction of accelerated computing from companies such as NVIDIA, the number of flops per watt of electricity has been increasing quite nicely and will continue to do so.

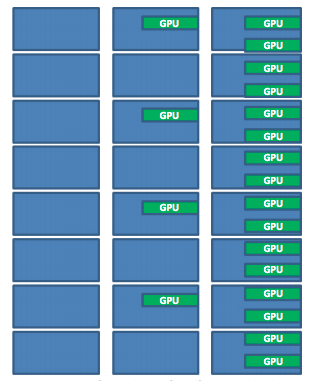

However, there is still the real task of creating productive systems and computing environments of both CPUs and GPUs that are available when scientists require them. Deciding on the right mix of CPUs and GPUs for various workflows using different deep learning models can be a challenge for any technical organization. An organization should first map out which applications require a significant number of CPUs for maximum scaling per cost. Then, look at which applications will perform best with GPUs. Once the ratio of CPUs to GPUs in the cluster has been determined to satisfy user requirements and anticipated workloads, the architecture of the cluster can be determined.

In the past, analyzing data was limited by the number of CPU flops available in a cluster or tightly connected systems. However, with the rise of the performance, as well as the performance per watt of GPUs, the equation has changed. While not all processing could be done on the GPU systems, a balance of CPUs and GPUs was needed for the required workloads. With the added demands on clusters thanks to the addition of AI techniques, additional GPU per CPU resources may be needed. A hybrid approach based on the organizations’ workloads should be considered for maximum efficiency. In addition, movement of data between systems and clusters must be understood so that the CPUs and GPUs can be used at high levels.

China Petroleum and Chemical Corp. (Sinopec) have developed software that incorporates AI when constructing new plants that are being called “smart manufacturing platforms.”

While creating compute clusters consisting of CPUs and GPUs in a corporate data center is feasible, the option now exists to use these types of systems in a cloud environment. The benefits of cloud computing can be calculated in terms of reduced capital expenditures and operation expenditures while using the latest servers. However, organizations must also incorporate utilizing a cloud computing environment into their workflow. In the energy exploration market, the impact of transferring terabytes to petabytes of information in and out of a cloud computing facility must not be overlooked. Significant delay and extra cost ― depending on the hosting company ― can disrupt the workflow of engineers and scientists. Simply focusing on a single aspect of the workflow to move to the cloud will likely result in less-than-ideal performance as compared to expectations. Thus, it is important to look at the entire workflow, including transferring data, computations and results interpretations.

Figure 2 – Different clusters for different workloads

Figure 2 shows how clusters can be created for CPU-intensive tasks — tasks that require a combination of CPUs and GPUs, or environments where the workloads are GPU-heavy.

DELL EMC

The Dell EMC portfolio of servers, storage, networking, software and services is uniquely positioned to provide cutting-edge technology to industries that require the latest in AI systems and software, and Dell EMC works with leading organizations to bring new capabilities to a wide range of industries. New insights from the vast amount of data now being collected from IoT devices and robotic devices in the energy exploration arena can now be achieved with help from Dell EMC experts together with the latest in computer server solutions. With the ability to work in a multi-cloud environment, depending on the needs and preferences of the customer, Dell EMC responds to the most demanding user requirements. and is constantly looking at the latest technologies in order to bring energy to market faster, with less environmental impact.

For example, Landmark has partnered with Dell EMC to bring together a combination of hardware and software in an appliance that can be deployed as a cloud solution. The Landmark Earth Appliance is a unique combination of converged and hyperconverged infrastructure based on the VXRAIL technology and Decision 360 software that brings a very fast time-to-value for customers looking for a complete solution. In the field, Dell EMC and Landmark have created the Landmark Field Appliance, which allows engineers to do analytics based on information generated by thousands of sensors. Real-time analytics, run in the field without having to move the data to a data center, result in a faster understanding of what is happening with complex systems, as it actually takes place. This is an amazing combination of hardware and software, tuned for field use.

For example, Landmark has partnered with Dell EMC to bring together a combination of hardware and software in an appliance that can be deployed as a cloud solution. The Landmark Earth Appliance is a unique combination of converged and hyperconverged infrastructure based on the VXRAIL technology and Decision 360 software that brings a very fast time-to-value for customers looking for a complete solution. In the field, Dell EMC and Landmark have created the Landmark Field Appliance, which allows engineers to do analytics based on information generated by thousands of sensors. Real-time analytics, run in the field without having to move the data to a data center, result in a faster understanding of what is happening with complex systems, as it actually takes place. This is an amazing combination of hardware and software, tuned for field use.

Over the next few weeks, this insideHPC Guide series will also cover the following topics:

- Opportunities Abound: HPC and Machine Learning for Energy Exploration

- Energy Companies Embrace Deep Learning for Inspections, Exploration & More

- Edge Computing Proves Critical for Drilling Rigs, Pipeline Integrity

- Determining Where & How to Adopt Machine Learning Technology

Download the full report, “Machine Learning in Energy: A Hot Spot in Seismic Processing,” courtesy of Dell EMC and Nvidia, to learn more about how machine learning is being used to move the energy industry forward.