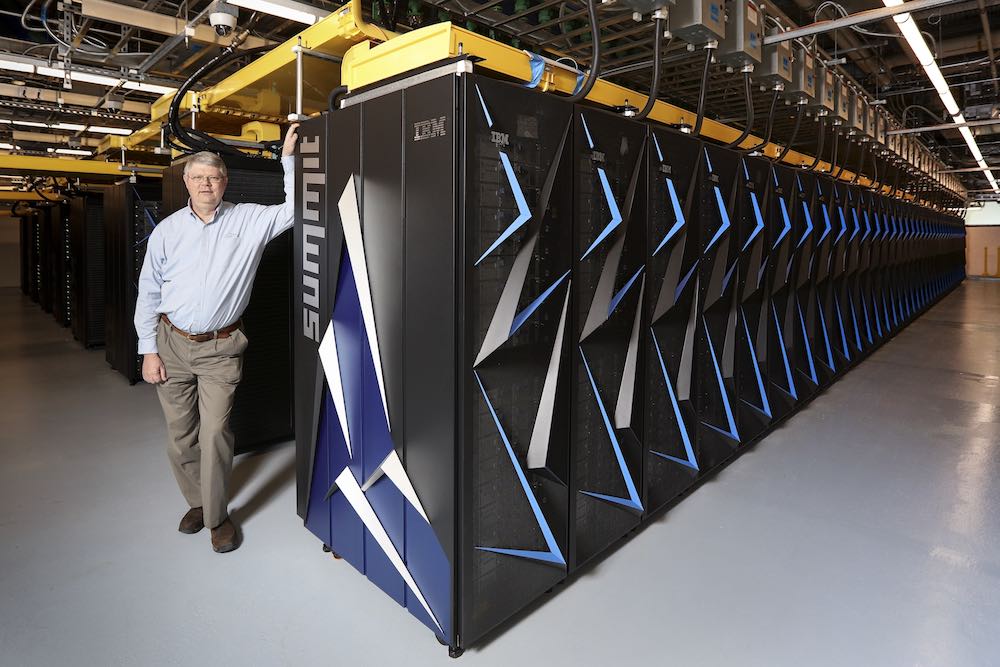

The world’s fastest supercomputer is now up and running production workloads at ORNL.

A year-long acceptance process for the 200-petaflop Summit supercomputer at Oak Ridge National Lab is now complete. Acceptance testing ensures that the supercomputer and its file system meet the functionality, performance, and stability requirements agreed upon by the facility and the vendor.

To successfully complete acceptance, the Oak Ridge Leadership Computing Facility (OLCF) worked closely with system vendor IBM to test hundreds of system requirements and fix any resulting hardware, software, and network issues.

Systems like Summit are usually serial number one. There is nothing like Summit on the market, and this was the first time a system of its scale was tested,” said Verónica Melesse Vergara, OLCF high-performance computing (HPC) support specialist and Summit acceptance lead.

In June and November 2018, Summit ranked first on the biannual TOP500 list of the world’s most powerful supercomputers based on the High Performance Linpack benchmark. Summit’s storage system, Alpine, also ranked first as the world’s fastest storage system on the November IO-500 list.

In this video from ISC 2018, Yan Fisher from Red Hat and Buddy Bland from ORNL discuss Summit, the world’s fastest supercomputer. Red Hat teamed with IBM, Mellanox, and NVIDIA to provide users with a new level of performance for HPC and AI workloads.

Even as Summit debuted on the international rankings list in June, OLCF team members were preparing for full system acceptance. After testing system hardware and benchmark requirements in spring 2018, the final months of acceptance focused on preparing to run a full workload of scientific applications.

Acceptance testing was a collaborative effort between OLCF, IBM, and partners NVIDIA, Mellanox, and Red Hat. Summit’s architecture includes 4,608 computing nodes, each comprising two IBM Power9 CPUs and six NVIDIA Volta GPUs connected with a Mellanox InfiniBand interconnect. Summit runs on a Linux operating system from Red Hat. Alpine is a 250-petabyte IBM Spectrum Scale parallel file system.

All five organizations worked closely together to find, prioritize, and repair issues, which is one of the goals of acceptance: to validate and fix things now so the system is productive for scientists,” said Jim Rogers, National Center for Computational Sciences director of computing and facilities and Summit technical procurement officer (TPO).

The end of acceptance testing signals the beginning of the machine’s scientific mission. Users from the OLCF Early Science Program and the DOE Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program will begin work on Summit in January 2019.

Step one: functionality

The three main steps of acceptance testing ensure the machine is ready for full production, answering the basic questions: Can the machine work well? Can it successfully run the users’ scientific codes? And can it work well and run scientific codes at full production?

During functionality tests, all system components and features must run successfully or, if they fail, recover as intended.

Functionality tests basically determine whether the machine works well,” said HPC Operations Group team lead Don Maxwell, who has worked on OLCF acceptance teams for 13 years.

At the end of functionality tests, staff members are certain they can compile and run jobs on the system—an important requirement for beginning the second step of performance tests.

We make sure all of the software, including the application launcher, the scheduler, compilers, programming models, and more, is performing as expected,” Melesse Vergara said.

Rogers, who assessed whether the machine met acceptance requirements in his role as TPO, said Summit’s acceptance plan was modeled after previous OLCF system acceptance plans, including those for the facility’s Titan and Jaguar supercomputers. However, Summit comes with a new generation of complexities.

These machines are getting so complicated that we’re putting more of an emphasis on the depth and breadth of testing,” Rogers said. “We now have about 30 sensor points on each node, so we can closely measure and better understand how the machine is running.”

Sensors directly on the node enable OLCF staff to correlate data points such as cooling temperatures or network speed with performance measures, such as data transfer or GPU utilization. In this way, the sensors not only serve a purpose during acceptance; they will also be used for the lifetime of the machine to help operators predict and plan for maintenance.

Predictive maintenance is just something that comes with machines of this scale.” Rogers said. “The sensors are a long-term provision to make sure the machine operates efficiently with less repair time and a means to prevent failures.”

Step two: performance

To meet performance requirements, Summit needed to deliver an average 5-times speedup for scientific applications compared to OLCF’s previous scientific workhorse, the 27-petaflop Titan system.

We run scientific applications in isolation to obtain a baseline performance metric for that application,” Melesse Vergara said. “We also run several test sizes to better understand performance of each application on the system.”

Early results from Gordon Bell Prize finalists demonstrate that some codes are already seeing speedups well over this mark.

File system performance is also essential to system performance. Alpine performance requirements included a data transfer speed of 2.5 terabytes per second.

“The file system is the entry and exit point for Summit’s acceptance. It is critical that the file system works in order for users to run on the supercomputer,” said Dustin Leverman, OLCF HPC storage engineer and Alpine acceptance lead.

Step three: stability

To ensure that any one failure among Summit’s many processors and nodes does not impact Summit users, the stability test monitors the resilience of the system under real conditions.

The stability test gauges how the system is going to operate under high utilization, so we can be confident that it is ready to turn over to users,” Rogers said.

Stability testing is the final and most strenuous leg of acceptance testing because it simulates a realistic workload that floods the system with thousands of jobs from different scientific applications.

“During stability testing, we run jobs on one node all the way up to the full size of the system,” Maxwell said.

To effectively monitor the performance of all these jobs, OLCF uses a “test harness” that tracks the status of each job as it is deployed and executed on the system. The stability test takes place over two weeks. At the same time, the functionality tests and performance tests that were already completed must run concurrently to test the resiliency of the system.

Now that Summit has passed acceptance, scientists can begin work in earnest. And the OLCF acceptance team?

We’re already applying lessons learned from Summit to acceptance plans for OLCF’s future Frontier system, just like we did with Jaguar and Titan,” Maxwell said.