This sponsored post explores the intersection of HPC, big data analytics, and AI. The resulting converged software will provide capabilities researchers need tackle their business’ next big challenge.

Are we witnessing the convergence of HPC, big data analytics, and AI? (Photo: Shutterstock/Sergey Nivens)

Are we witnessing the convergence of HPC, big data analytics, and AI?

Once, these were separate domains, each with its own system architecture and software stack, but the data deluge is driving their convergence. Traditional big science HPC is looking more like big data analytics and AI, while analytics and AI are taking on the flavor of HPC.

The data deluge is real. In 2018, CERN’s Large Hadron Collider generated over 50 petabytes (1,000 terabytes, or 1015 bytes) of data, and expects that to increase tenfold by 2025. The average Internet user generates over a GB of data traffic every day; smart hospitals over 3,000 GB per day; a manufacturing plant over 1,000,000 GB per day. A single autonomous vehicle is estimated to generate 4,000 GB per day. Every day. The total annual digital data output is predicted to reach or exceed 163 zettabytes (one sextillion, or 1021 bytes) by 2025. This is data that needs to be analyzed at near-real-time speed and stored somewhere for easy access by multiple collaborators. Extreme performance, storage, networking―sounds a lot like HPC.

What characterized “traditional” HPC was achieving extreme performance on computationally complex problems, typically simulations of real-world systems (think explosions, oceanography, global weather hydrodynamics, even cosmological events like supernovae, etc.). This meant very large parallel processing systems with hundreds, even thousands, of dedicated compute nodes and vast multi-layer storage appliances, over vast high-speed networks.

Workflows that unify artificial intelligence, data analytics, and modeling/simulation will make high-performance computing more essential and ubiquitous than ever. — Patricia Damkroger, manager, Intel Extreme Computing

Big data analytics, as it has come to be known, can be characterized as workflows with datasets that are so large that I/O transfer rates, storage footprints, and secure archiving are primary considerations. Here, I/O consumes a significant portion of the runtime and puts great stress on the filesystem.

For artificial intelligence, complex algorithms train models to analyze multiple sources of streaming data to draw inferences and predict events so that real-time actions can be taken.

We’re seeing applications from one domain employing techniques from the others. Look at just one example: Biological and medical science now generate vast streams of data from electron microscopes, genome sequencers, bedside patient monitors, diagnostic medical equipment, wearable health devices, and a host of other sources. Using AI-based analytics on HPC systems, life science researchers can construct and refine robust models that simulate the function and processes of biological structures at cellular and sub-cellular levels. AI analysis of these massive data sets is transforming our understanding of disease, while practical AI solutions in hospitals are already helping clinicians quickly and accurately identify medical conditions and their treatment.

Up to now, HPC, big data, and AI domains have developed their own widely divergent hardware/software environments. A traditional HPC environment would typically deploy Fortran/C++ hybrid applications with MPI over a large cluster using Slurm* for large-scale resource management and Lustre* high-speed remote storage on a high-performance network of servers, fabric switches, and appliances.

On the other hand, with data analytics and AI environments, you would tend to see Python* and Java* applications using open source Hadoop* and Spark* frameworks over HDFS* local storage and standard networked server components.

As workloads converge, they will require platforms that bring these technologies together. There are already efforts to develop a unified infrastructure with converged software stacks and compute infrastructure. For example, the Intel® Select Solutions for HPC includes solutions for HPC and AI converged clusters. The recently announced Aurora Supercomputer, to be delivered by Intel, will be a converged platform (see Accelerating the Convergence of HPC and AI at Exascale). Intel is also working hard to optimize AI frameworks on CPU-based systems, which is crucial for the performance of converged workflows. Intel Deep Learning Boost, a new AI extension to Intel® Xeon® processors, accelerates AI applications while delivering top HPC performance using features like Intel® AVX-512.

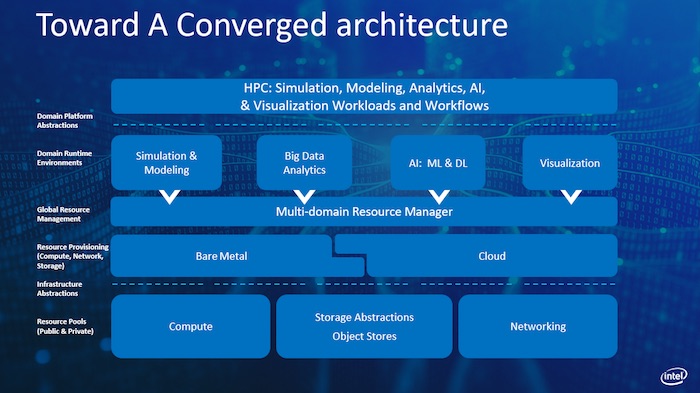

For systems to converge, they must handle the full range of scientific and technical computing applications—from equation-driven, batch-processing simulations to the automated, data-fueled analysis needed to support human decision-making in real time. And, they must be easy to use. Figure 1 shows the framework that Intel® and its collaborators are using to enable converged, exascale computing.

Figure 1: The convergence of HPC, data analysis, and AI requires a unified software stack and a consistent architecture. (Image courtesy of Intel)

While traditional HPC platforms focused on processor and interconnect performance, converged platforms will need to balance processor, memory, interconnect, and I/O performance while providing the scalability, resilience, density, and power efficiency required by these workloads. With the convergence of AI, data analytics, and modeling/simulation, these converged HPC platforms and solution stacks will provide capabilities researchers need to make progress on their toughest challenges, and for enterprises to deliver innovative products and services.

This post is based on the article The Intersection of AI, HPC and HPDA: How Next-Generation Workflows Will Drive Tomorrow’s Breakthroughs by Patricia Damkroger of the Intel® Datacenter Group that appeared recently on Top500.org.

For more information:

- Intel® Deep Learning Boost

- Explore resources available for popular AI frameworks optimized on Intel® architecture, including installation guides, and other learning material

- Developers can create applications that take advantage of Intel® Deep Learning through available optimized frameworks and libraries, such as Intel® Math Kernel Library for Deep Neural Networks (Intel® MKL-DNN)