In this special guest feature, Dan Olds from OrionX shares first-hand coverage of the Student Cluster Competition at the recent ISC 2019 conference.

In this special guest feature, Dan Olds from OrionX shares first-hand coverage of the Student Cluster Competition at the recent ISC 2019 conference.

I’m constantly amazed by the how many different system configurations we see in Student Cluster Competitions. Given that everyone has to use hardware that’s currently available on the market and they all have to be under the 3,000 watt power cap, you’d think that the systems would gravitate towards a common configuration – but you’d be wrong.

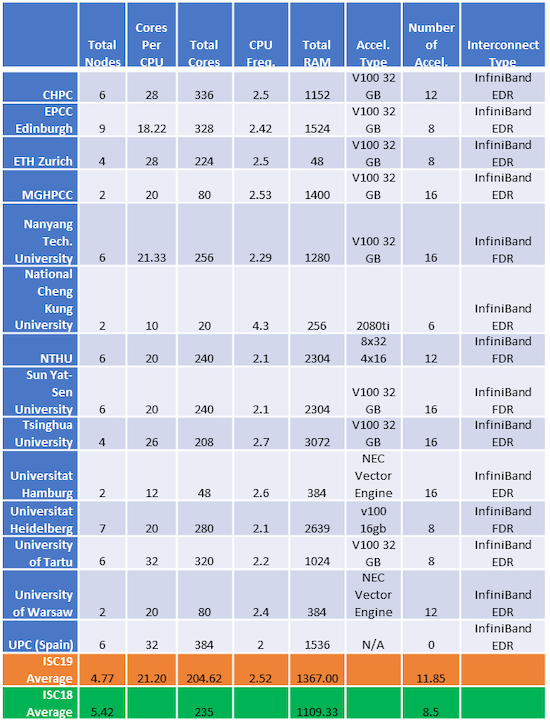

Here’s what each team came up with for the ISC19 cluster competition:

Highlights:

- Scotland’s EPCC has the largest configuration by node count with nine. At the other end of the scale, we see Warsaw, Hamburg, NCKU, and Team Boston weighing in with dual nodes. The Boston machine is particularly noteworthy – it has only 80 CPU cores but is sporting 1.4 TB memory and 16 32GB NVIDIA V100 GPUs. Now that’s a configuration that is aimed squarely at taking home the LINPACK trophy.

- Hamburg and Warsaw are running NEC Aurora vector machines, which are Xeon-based servers equipped with eight specialized vector accelerators. These teams have done great work in simply getting the application to run on these brand-new architectures – kudos to them.

- Chinese server vendor Inspur is sponsoring four teams at ISC19 – the most of any vendor. Their teams include Tsinghua, Taiwan’s National Tsing Hua, Sun Yat-Sen, and Heidelberg.

- Node counts are down this year, as are total CPU cores. This is the continuation of a trend that started back in 2012 or so with the advent of GPUs. Students are using more of their power budget on accelerators and eschewing CPUs as more and more applications become GPU-enabled. The average number of GPUs in the 2019 field is a whopping three more than what we saw in 2018. That’s an increase of nearly 40%.

- We also see a significant rise in system memory, moving from 1,109 TB to 1,367 TB, an increase of almost 25%.

So will small, GPU-packed configurations rule out? Or will we see the larger systems taking the awards? We’ll be revealing detailed results in our next posts, stay tuned.

Dan Olds is an Industry Analyst at OrionX.net. An authority on technology trends and customer sentiment, Dan Olds is a frequently quoted expert in industry and business publications such as The Wall Street Journal, Bloomberg News, Computerworld, eWeek, CIO, and PCWorld. In addition to server, storage, and network technologies, Dan closely follows the Big Data, Cloud, and HPC markets. He writes the HPC Blog on The Register, co-hosts the popular Radio Free HPC podcast, and is the go-to person for the coverage and analysis of the supercomputing industry’s Student Cluster Challenge.

Check out our insideHPC Events Calendar

Is the amount of RAM for ETH correct or is that per-node?