Over at TACC, Jorge Salazar writes that researchers are using XSEDE supercomputers to model multi-fault earthquakes in the Brawley fault zone, which links the San Andreas and Imperial faults in Southern California. Their work could predict the behavior of earthquakes that could potentially affect millions of people’s lives and property.

Over at TACC, Jorge Salazar writes that researchers are using XSEDE supercomputers to model multi-fault earthquakes in the Brawley fault zone, which links the San Andreas and Imperial faults in Southern California. Their work could predict the behavior of earthquakes that could potentially affect millions of people’s lives and property.

Some of the world’s most powerful earthquakes involve multiple faults, and scientists are using supercomputers to better predict their behavior. Multi-fault earthquakes can span fault systems of tens to hundreds of kilometers, with ruptures propagating from one segment to the other. During the last decade, scientists have observed several cases of this complicated type of earthquake. Major examples include the magnitude (abbreviated M) 7.2 2010 Darfield earthquake in New Zealand; the M7.2 El Mayor – Cucapah earthquake in Mexico immediately south of the US-Mexico border; the 2012 magnitude 8.6 Indian Ocean Earthquake; and perhaps the most complex of all, the M7.8 2015 Kaikoura earthquake in New Zealand.

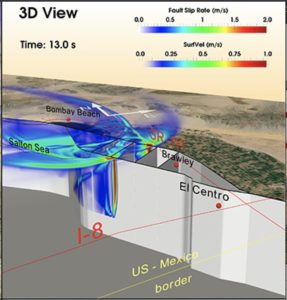

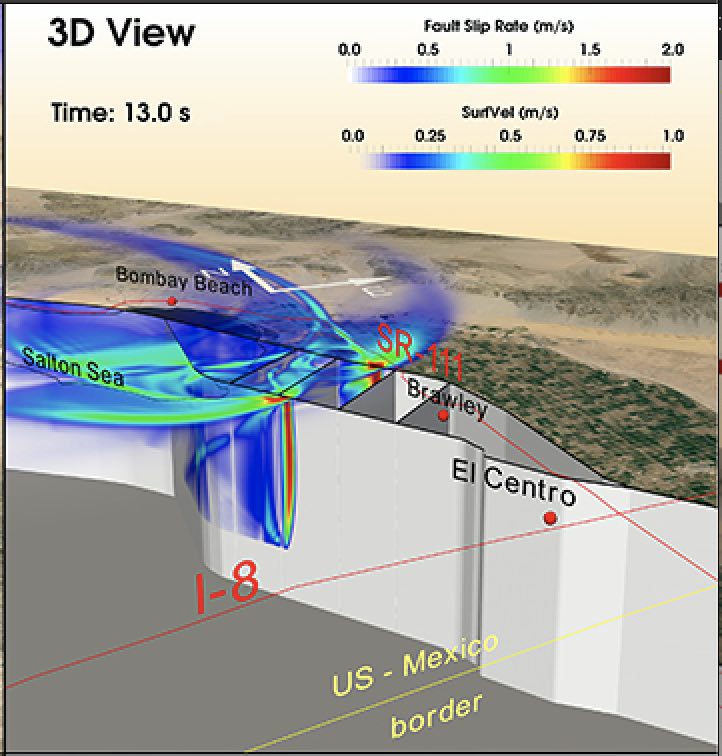

The main findings of our work concern the dynamic interactions of a postulated network of faults in the Brawley seismic zone in Southern California,” said Christodoulos Kyriakopoulos, a Research Geophysicist at the University of California, Riverside. He’s the lead author of a study published in April of 2019 in the Journal of Geophysical Research, Solid Earth, published by the American Geophysical Union. “We used physics-based dynamic rupture models that allow us to simulate complex earthquake ruptures using supercomputers. We were able to run dozens of numerical simulations, and documented a large number of interactions that we analyzed using advanced visualization software,” Kyriakopoulos said.

A dynamic rupture model is a model that allows scientists to study the fundamental physical processes that take place during an earthquake. With this type of model, supercomputers can simulate the interactions between different earthquake faults. For example, the models allow study of how seismic waves travel from one fault to and influence the stability of another fault. In general, Kyriakopoulos said that these types of models are very useful to investigate big earthquakes of the past, and perhaps more importantly, possible earthquake scenarios of the future.

The numerical model Kyriakopoulos developed consists of two main components. First is a finite element mesh that implements the complex network of faults in the Brawley seismic zone. “We can think of that as a discretized domain, or a discretized numerical world that becomes the base for our simulations. The second component is a finite element dynamic rupture code, known as FaultMod (Barall et. al. 2009) that allows us to simulate the evolution of earthquake ruptures, seismic waves, and ground motion with time,” Kyriakopoulos said. “What we do is create earthquakes in the computer. We can study their properties by varying the parameters of the simulated earthquakes. Basically, we generate a virtual world where we create different types of earthquakes. That helps us understand how earthquakes in the real world are happening.”

The model helps us understand how faults interact during earthquake rupture,” he continued. “Assume an earthquake starts at point A and travels towards point B. At point B, the earthquake fault bifurcates, or splits in two parts. How easy would it be for the rupture, for example, to travel on both segments of the bifurcation, versus taking just one branch or the other? Dynamic rupture models help us to answer such questions using basic physical laws and realistic assumptions.”

Modeling realistic earthquakes on a computer isn’t easy. Kyriakopoulos and his collaborators faced three main challenges. “The first challenge was the implementation of these faults in the finite element domain, in the numerical model. In particular, this system of faults consists of an interconnected network of larger and smaller segments that intersect each other at different angles. It’s a very complicated problem,” Kyriakopoulos said.

The second challenge was to run dozens of large computational simulations. “We had to investigate as much as possible a very large part of parameter space. The simulations included the prototyping and the preliminary runs for the models. The Stampede supercomputer at TACC was our strong partner in this first and fundamental stage in our work, because it gave me the possibility to run all these initial models that helped me set my path for the next simulations.” The third challenge was to use optimal tools to properly visualize the 3-D simulation results, which in their raw form consist simply of huge arrays of numbers. Kyriakopoulos did that by generating photorealistic rupture simulations using the freely available ParaView software.

To overcome these challenges, Kyriakopoulos and colleagues used the resources of XSEDE, the NSF-funded Extreme Science and Engineering Environment. They used the computers Stampede at the Texas Advanced Computing Center; and Comet at the San Diego Supercomputer Center (SDSC). Kyriakopoulos’ related research includes XSEDE allocations TACC’s Stampede2 system.

Approximately one-third of the simulations for this work were done on Stampede, specifically, the early stages of the work,” Kyriakopoulos said. I would have to point out that this work was developed over the last three years, so it’s a long project. I would like to emphasize, also, how the first simulations, again, the prototyping of the models, are very important for a group of scientists that have to methodically plan their time and effort. Having available time on Stampede was a game-changer for me and my colleagues, because it allowed me to set the right conditions for the entire set of simulations. To that, I would like to add that Stampede and in general XSEDE is a very friendly environment and the right partner to have for large-scale computations and advanced scientific experiments.”

Their team also used briefly the computer Comet of SDSC in this research, mostly for test runs and prototyping. “My overall experience, and mostly based on other projects, with SDSC is very positive. I’m very satisfied from the interaction with the support team that was always very fast in responding my emails and requests for help. This is very important for an ongoing investigation, especially in the first stages where you are making sure that your models work properly. The efficiency of the SDSC support team kept my optimism very high and helped me think positively for the future of my project.”

XSEDE had a big impact on this earthquake research. “The XSEDE support helped me optimize my computational work and organize better the scheduling of my computer runs. Another important aspect is the resolution of problems related to the job scripting and selecting the appropriate resources (e.g amount of RAM, and number of nodes). Based on my overall experience with XSEDE I would say that I saved 10-20% of personal time because of the way XSEDE is organized,” Kyriakopoulos said.

My participation in XSEDE gave a significant boost in my modeling activities and allowed me to explore better the parameter space of my problem. I definitely feel part of a big community that uses supercomputers and has a common goal, to push forward science and produce innovation,” Kyriakopoulos said.

Looking at the bigger scientific context, Kyriakopoulos said that their research has contributed towards a better understanding of multi-fault ruptures, which could lead to better assessments of the earthquake hazard. “In other words, if we know how faults interact during earthquake ruptures, we can be better prepared for future large earthquakes—in particular, how several fault segments could interact during an earthquake to enhance or interrupt major ruptures,” Kyriakopoulos said.

Excellent article! I will share this in my linkedIn.com group – Innovative Uses of HPC