Researchers are using Deep Learning techniques on DOE supercomputers to help develop fusion energy.

Researchers are using Deep Learning techniques on DOE supercomputers to help develop fusion energy.

For decades, scientists have sought to control nuclear fusion—the energy that powers the sun and other stars—by developing massive fusion reactors to produce and contain plasma, with the goal of mirroring the astronomically high pressure and temperature conditions of celestial objects.

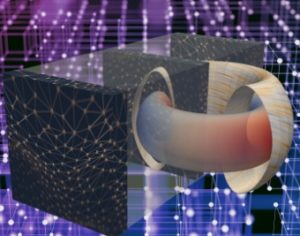

To ensure plasma—the fourth fundamental state of matter—retains its heat and does not interact with materials in the containment vessel, researchers employ doughnut-shaped fusion devices called tokamaks, which use magnetic fields to trap fusion reactions in place. However, large-scale plasma instabilities called disruptions can interfere with this process.

During disruptions, plasma rapidly escapes from the confined area and reaches the walls of the tokamak. In addition to stopping the reaction, this contact transfers intense levels of heat, which can cause serious or irreparable damage to the reactor.

A team of researchers led by Bill Tang of the US Department of Energy’s (DOE’s) Princeton Plasma Physics Laboratory (PPPL) and Princeton University recently tested its Fusion Recurrent Neural Network (FRNN) code on various high-performance computing (HPC) systems, including the 27-petaflop Titan and the 200-petaflop Summit, the world’s most powerful and smartest supercomputer for open science.

We aim to accurately predict the potential for disruptive events before they occur, as well as understand the reasons why they happen in the first place,” Tang said.

Both Titan and Summit are located at the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility at DOE’s Oak Ridge National Laboratory. The team’s results are published in Nature.

Going green for good

Two fuel sources used in fusion reactions are deuterium and tritium—both isotopes of hydrogen—that produce plasma when exposed to extreme pressures and temperatures. In a tokamak, the hydrogen nuclei then fuse together to create helium and neutrons, releasing immense amounts of energy that researchers could exploit to efficiently produce electricity.

One of the biggest grand challenges in science is to deliver sustainable, clean energy, and many scientists dream of harnessing the power of fusion energy for use on Earth,” Tang said.

Natural fuel sources for fusion reactions are abundant around the world, from hydrogen in seawater to lithium in soil, and fusion reactions using such fuels would not emit carbon dioxide or produce long-lasting radioactive waste. Thus, a reliable way to predict and mitigate disruptions could accelerate the adoption of fusion as an environmentally friendly, virtually unlimited source of energy.

In short, fusion is the perfect energy source for the future,” said lead author Julian Kates-Harbeck, a PhD candidate at Harvard University who worked on the project through the DOE Computational Science Graduate Fellowship (CSGF) program. “If we make this possibility a reality, fusion energy could support the world for millions of years.”

Racing the clock

Disruptions take place in the blink of an eye, so earlier warning times are essential. Because traditional equation-based simulation methods cannot make these predictions fast enough, researchers are turning to data-driven artificial intelligence (AI) methods such as machine learning, in which systems analyze data and make decisions based on previous experiences.

Although classical machine learning methods effectively predict disruptions on today’s machines, even more precision will be necessary for future machines such as the ITER tokamak being built in France. When complete, ITER will be the largest fusion reactor in the world.

The problem is that a bigger reactor has a larger volume of energy stored in the plasma and less surface area to capture disruptions if they occur,” Kates-Harbeck said. “Larger machines will be better at trapping fusion energy, but disruptions will be a much more severe problem than they are now.”

ITER will require 95 percent of disruption predictions to be correct and will not be able to afford many false alarms, which waste precious time and resources. To provide enough time for the implementation of mitigation strategies, algorithms will need to sound the alarm at least 30 milliseconds before a disruption occurs.

With that level of accuracy and that amount of time, people running the machine would have time to either mitigate the disruption by cooling the plasma or even by finding a way to avoid it entirely,” Tang said.

A deep learning milestone

To preemptively tackle this challenge, Kates-Harbeck developed FRNN, a novel AI resource designed to predict disruptions; the deep learning code already comes close to meeting ITER’s requirements. Deep learning is a specialized type of machine learning that uses large artificial neural networks to learn from data.

Unlike classical machine learning methods, FRNN—the first deep learning code applied to disruption prediction—can analyze data with many different variables such as the plasma current, temperature, and density. Using a combination of recurrent neural networks and convolutional neural networks, FRNN observes thousands of experimental runs called “shots,” both those that led to disruptions and those that did not, to determine which factors cause disruptions.

When the code learns from many examples of the same patterns from this data, it can then make a more accurate prediction about whether a disruption is likely to occur,” Kates-Harbeck said.

After analyzing data from the DIII-D National Fusion Facility, the largest tokamak in the United States and located in San Diego, FRNN successfully predicted disruptions on the largest tokamak in the world, the Joint European Torus Facility in the United Kingdom.

This accomplishment makes FRNN the first code to make reliable predictions for devices not seen during training. Such cross-machine generalization will be crucial for operating massive reactors like ITER, which will be able to withstand only a handful of disruptions throughout its entire lifetime.

Super scalability

The team initially ran FRNN on Tiger, an HPC cluster at Princeton University, then turned to some of the most powerful CPU-GPU hybrid systems in the world to further test, optimize, and improve the code. Alexey Svyatkovskiy of Princeton University, a coauthor of the Nature paper, led this effort.

After running FRNN on 6,000 of Titan’s NVIDIA Tesla K20X GPUs, the researchers determined that porting their versatile code from a university cluster to a leadership-class supercomputer did not hamper scalability or speed.

Titan was invaluable for large scaling tests to see how close we could get to reaching solutions with significantly more computing power,” Kates-Harbeck said. “We found that our code has the ability to analyze larger datasets and handle even bigger problems than what we had tackled up to that point.”

Next, the team ran FRNN on the TSUBAME 3.0 supercomputer at the Tokyo Institute of Technology’s Global Scientific Information and Computing Center. Using this system’s NVIDIA Tesla P100 GPUs, the researchers found that FRNN could potentially push the window of disruption prediction from 30 milliseconds to 50 or even 100 milliseconds, which would provide more time to introduce mitigation methods.

Having replicated these results on Summit, the researchers plan to conduct additional experiments on the OLCF’s flagship system.

Currently, they are running FRNN on the AI Bridging Cloud Infrastructure, a dedicated AI supercomputer at the National Institute of Advanced Industrial Science and Technology in Japan.

Eventually, the researchers hope to move beyond predicting disruptions to preventing them from happening in the first place.

With powerful predictive capabilities, we can move from disruption prediction to control, which is the holy grail in fusion,” Tang said. “It’s just like in medicine—the earlier you can diagnose a problem, the better chance you have of solving it.”

Source: DOE

Dr. Bishop used neural networks on analog computer to control fusion device fifty years ago

The work of fusion reactor ( and many other processes ) should be done on adaptive recurrent way. If the process is unstable, chaotic, nonrecurrent then it must be stabilized or stopped. See my papers in Journal of fusion energy, Neural computing & applications, Int.J.Mol.Theor. Phys., Danilo Rastovic ( Zagreb ).