In this special guest feature, Dan Olds from OrionX shares first-hand coverage of the Student Cluster Competition at the recent ISC 2019 conference.

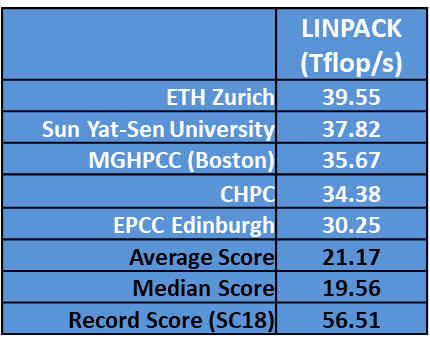

The benchmark results from the recently concluded ISC19 Student Cluster Competition have been compiled, sliced, diced, and analyzed senseless. As you cluster comp fanatics know, this year the student teams are required to run LINPACK, HPCG, and HPCC as part of the ISC19 competition. The LINPACK run is to qualify for the Highest LINPACK award, but doesn’t otherwise contribute to the team final scores. HPCG account for 10% of their overall scores as does HPCC. In this article we’ll be looking at the LINPACK and HPCG results, we’ll cover detailed HPCC outcomes in our next story.

The benchmark results from the recently concluded ISC19 Student Cluster Competition have been compiled, sliced, diced, and analyzed senseless. As you cluster comp fanatics know, this year the student teams are required to run LINPACK, HPCG, and HPCC as part of the ISC19 competition. The LINPACK run is to qualify for the Highest LINPACK award, but doesn’t otherwise contribute to the team final scores. HPCG account for 10% of their overall scores as does HPCC. In this article we’ll be looking at the LINPACK and HPCG results, we’ll cover detailed HPCC outcomes in our next story.

As can be seen by the chart, newcomer ETH Zurich took home the Highest LINPACK trophy, which is quite a feather in their Swiss hats. Teams seldom win a major award in their first outing.

As can be seen by the chart, newcomer ETH Zurich took home the Highest LINPACK trophy, which is quite a feather in their Swiss hats. Teams seldom win a major award in their first outing.

Sun Yat-Sen was a close second with their score of 37.83 Tflop/s, which isn’t unusual for them – they’ve won two LINPACK awards in previous competitions.

MGHPCC (Team Boston) took home third by tallying 35.67 Tflop/s on their LINPACK run. This was the team I was betting on to take the LINPACK competition and perhaps set a new student HPL record. They had the perfect configuration for a record LINPACK run: a slim dual-node config, 16 NVIDIA V100 32GB GPUs, and a whopping 1.4 TB of memory. But, alas, it wasn’t to be….the team had some problems with their interconnect and couldn’t get everything cooking in time to turn in a huge score.

CHPC and Edinburgh get honorable mentions for their LINPACK scores, finishing well above the average and median scores of the field.

Typically, the team with the most GPUs wins LINPACK, but that wasn’t the case this time. ETH Zurich only sported eight V100s to accompany their four nodes and 1.5 TB of memory. By our calculations, Zurich had the ninth most powerful configuration in the competition, but still managed to come out at the top of the LINPACK pack.

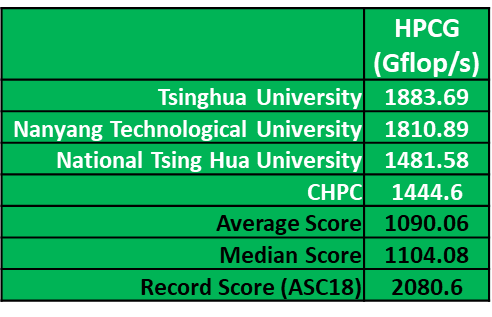

While Tsinghua didn’t manage to chart on LINPACK, they came back to lead the field on HPCG with a score of 1.8 Tflop/s. Even though they had what we evaluated as the most powerful cluster in the competition, they still didn’t get too close to the record of 2080, established by, guess who, Tsinghua at an ASC competition.

While Tsinghua didn’t manage to chart on LINPACK, they came back to lead the field on HPCG with a score of 1.8 Tflop/s. Even though they had what we evaluated as the most powerful cluster in the competition, they still didn’t get too close to the record of 2080, established by, guess who, Tsinghua at an ASC competition.

Nanyang Tech turned in a highly competitive 1,810.89 GFlop/s result, which put them pretty close to Tsinghua’s winning score, but just not quite enough to get over that mountain. Taiwan’s NTHU and South Africa’s CHPC take home third place and honorable mention respectively.

It’s interesting to note that both the LINPACK and HPCG scores are lower than in previous competitions. LINPACK in particular looks like it has fallen off a cliff, down by 17 Tflop/s from the record of 56.51 set at SC18. It doesn’t look to me like the hardware is the issue, there were plenty of teams that had enough gear to mount a serious challenge to the record.

Next up, we’ll take a deep dive into the HPC Challenge benchmark – it’s eight benchmarks in one, the data from which should fulfill anyone’s quant desires. Stay tuned!

Dan Olds is an Industry Analyst at OrionX.net. An authority on technology trends and customer sentiment, Dan Olds is a frequently quoted expert in industry and business publications such as The Wall Street Journal, Bloomberg News, Computerworld, eWeek, CIO, and PCWorld. In addition to server, storage, and network technologies, Dan closely follows the Big Data, Cloud, and HPC markets. He writes the HPC Blog on The Register, co-hosts the popular Radio Free HPC podcast, and is the go-to person for the coverage and analysis of the supercomputing industry’s Student Cluster Challenge.