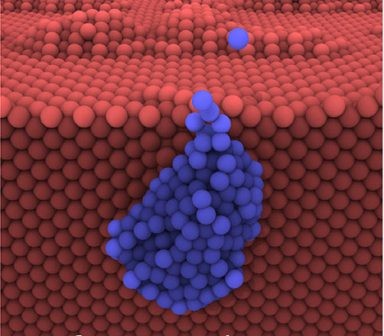

The Exascale Atomistics for Accuracy, Length, and Time (EXAALT) project within the US Department of Energy’s Exascale Computing Project (ECP) has made a big step forward by delivering a five-fold performance advance in addressing its fusion energy materials simulations challenge problem.

The Exascale Atomistics for Accuracy, Length, and Time (EXAALT) project within the US Department of Energy’s Exascale Computing Project (ECP) has made a big step forward by delivering a five-fold performance advance in addressing its fusion energy materials simulations challenge problem.

The accomplishment is associated with a physical model called SNAP (Spectral Neighbor Analysis Potential), which EXAALT relies on because of its ability to accurately represent the real behavior of materials at an affordable cost, thus enabling the simulation of large systems of atoms for long time scales. SNAP was developed by EXAALT team members Aidan Thompson and Mitchell Wood of Sandia National Laboratories.

As of last year, the historical trend of the efficiency of the SNAP kernel on various computing architectures showed a decrease in terms of performance relative to the theoretical peak of the hardware, particularly in the case of GPU architectures. Consequently, EXAALT made grappling with this concern a central endeavor in fiscal year 2019.

EXAALT’s effort was strengthened by its selection for assistance under the National Energy Research Scientific Computing Center (NERSC) Exascale Science Applications Program (NESAP), an early-access program for users at NERSC.

NERSC staff members work with us very closely to profile our code, benchmark it, understand where the bottlenecks are, and then help us port the code to get ready for the new NERSC machine, called Perlmutter [aka, NERSC-9] as a waypoint for upcoming exascale systems,” said Danny Perez, EXAALT principal investigator.

The system is named for Saul Perlmutter, an astrophysicist who shared the 2011 Nobel Prize in Physics for his contributions to research showing that the expansion of the universe is accelerating.

The idea is to work with the software team to make sure that codes will be ready to hit the ground running when the new machine [planned for late 2020] comes on the floor,” Perez said. “So far that’s been extremely helpful because it lets our team members collaborate with the specialists at NERSC and also with the engineers from the vendors—for example, Cray and NVIDIA. That’s really gotten us a lot of very important expertise that enabled us to make very fast progress in terms of ensuring our code was as efficient as it could be.”

In addition to NERSC and NESAP, EXAALT joined with ECP’s Co-Design Center for Particle Applications (CoPA) to form a team of teams composed of Thompson, Stan Moore (CoPA), and Rahulkumar Gayatri (NESAP) that during the last few months has reengineered the SNAP kernel from scratch.

In addition to NERSC and NESAP, EXAALT joined with ECP’s Co-Design Center for Particle Applications (CoPA) to form a team of teams composed of Thompson, Stan Moore (CoPA), and Rahulkumar Gayatri (NESAP) that during the last few months has reengineered the SNAP kernel from scratch.

Instead of trying to do incremental tweaks to the existing code, they took a fresh look and said, OK, let’s see how we would write this from the ground up with no historical background,” Perez said. “So, they reorganized the code to expose more parallelism. More operations can be done simultaneously, and that’s very important when you run on hardware like GPUs where you have many processing units that you have to keep busy all the time.”

Exposing more parallelism required dramatically redesigning how the data was stored in memory because the SNAP kernel had historically required abundant memory. “We found ways to really decrease the amount of memory we needed, and that freed up some resources to play other tricks such as caching and reusing partial results many times,” Perez said. “That reengineering really let us use far fewer resources in terms of memory and turned out to be much more efficient running on very parallel hardware like we have on Summit [at the Oak Ridge Leadership Computing Facility at Oak Ridge National Laboratory] and will have on Perlmutter.”

During a hackathon organized by NESAP, Stan Moore of CoPA ported new improvements that were developed at NERSC into EXAALT’s main production code. These advances had been accomplished in proxy code, a smaller version of the production code that abstracts the main kernel and makes the development process much easier. The EXAALT team then applied lessons learned from the proxy code effort to the main production code.

After Moore ported the code enhancements, he benchmarked the result on Summit. “What he saw was a five-fold increase in terms of performance on a single node of Summit compared with the previous version of the code we had,” Perez said. “That’s very impressive. We’re excited about this because you typically don’t get this kind of increase on mature codes.”

With that outcome, the EXAALT team extrapolated what could be expected on the full Summit machine. “We think we’ll achieve something like a 90-fold increase in simulation rate compared with what we could do on pre-ECP machines; in this case, the pre-ECP machine was Mira at the Argonne Leadership Computing Facility at Argonne National Laboratory,” Perez said. “Such an increase is very exciting—it’s almost two orders of magnitude that we foresee being able to do on Summit. If we look into the future toward the exascale, then we should have at least another factor of five in possible speedup that comes just from the size of the machine. Summit is at roughly 200 petaflops, so by the time we go to the exascale, we should have another factor of five. That starts to be a transformative kind of change in our ability to do the science on these machines. At the exascale we should be at the level of 500 times more simulation throughput if the current trend maintains itself.”

Source: Scot Gibson at ECP