Data—the gold that today’s organizations spend significant resources to acquire—is ever-growing and underpins significant innovation in technologies for storing and accessing it. In this technology guide, insideHPC Special Research Report: Modernizing and Future-Proofing Your Storage Infrastructure, we’ll see how in this environment, different applications and workflows will always have data storage and access requirements, making it critical for planning to understand that a heterogeneous storage infrastructure is needed for a fully functioning organization. Most organizations require both High Performance Computing (HPC) technologies as well as other IT functionalities.

Data—the gold that today’s organizations spend significant resources to acquire—is ever-growing and underpins significant innovation in technologies for storing and accessing it. In this technology guide, insideHPC Special Research Report: Modernizing and Future-Proofing Your Storage Infrastructure, we’ll see how in this environment, different applications and workflows will always have data storage and access requirements, making it critical for planning to understand that a heterogeneous storage infrastructure is needed for a fully functioning organization. Most organizations require both High Performance Computing (HPC) technologies as well as other IT functionalities.

Challenges with HPC Today and How it is Changing

Systems and clusters that comprise the computing and storage infrastructure for applications that require the highest performance available (at a given price or footprint) can be incredibly complex and costly. However, they do create a competitive advantage for companies dedicated to these technologies. For example, reducing the weight of a vehicle and improving air flow around it leads to a more efficient car, truck or airplane. This can only be done through complex simulations that require hundreds to thousands of CPUs. Other examples of using HPC technologies for a competitive advantage are in energy exploration (finding oil or gas more quickly and reliably), using historical data to make better investment decisions and reduce risk, or decoding a genome in order to develop more targeted medicines (personal medicine). In many cases today, the HPC infrastructure is isolated both physically and organizationally far from the computer systems that are used for business intelligence or enterprise resource planning. Moving the entire HPC process into the overall workflow of a corporation can lead to greater data-sharing and faster decision-making.

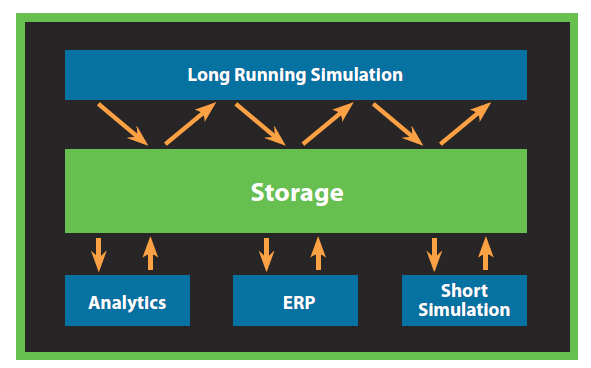

In many organizations today, with the HPC infrastructure separate from the mainstream IT infrastructure, a dedicated team of IT professionals is required to keep systems working, anticipate outages and respond accordingly. If HPC servers, storage and networking were moved into the main IT organization, cost savings could be realized—not just from dual use of the systems (HPC and traditional applications), but also from more optimized use of personnel who can plan or respond to a wider range of issues for an entire company. A modern data center must be able to service the needs of a wide range of users, both internal and possibly external to the organization. Flexibility is key in order to meet a variety of required SLAs. For example, a business intelligence user may require that their query be performed in just a few seconds, while a longer running simulation only must be completed in a few hours. The storage system must also be flexible in order to deliver data as needed for a range of applications. Different storage profiles must be available for different application use cases in order for IT organizations to optimize performance to meet competing demands. By disaggregating the storage systems from the compute systems, more application profiles can be accommodated and optimized for the storage requirements.

Although the use of HPC technologies can result in better designs, faster decisions, new discoveries and cost savings in the long run, these goals must be aligned with corporate goals as well. By integrating the technologies needed for faster answers with IT priorities, more productive and business-relevant workflows are possible and the technology and organization silos can disappear. All of the data can then be used by all of the applications, not just for HPC. (See Figure to see how multiple workloads should be able to access the storage system.)

Industry Trends

Simply put, data is exploding. This gold mine of knowledge is generated from a proliferation of IoT devices and tracked online behavior, among other sources. IDC, in conjunction with Seagate, predicts that there will be 175 zettabytes of data created in 2025, up from 33 zettabytes in 2018. This is just five years away.

So the question becomes, how will all of that data be stored? Even more importantly, how can the data be used for both business intelligence and HPC applications? If the data that a corporation houses or has access to must be stored in two different systems as mentioned previously, then costs are doubled without an increase in the corporate knowledge or the time to make critical decisions.

All workflows require access to corporate and/or open source data. From researching cosmic events to determining the best combination of molecules for a new cancer-fighting drug, the data that is needed serves a number of important purposes. In addition to being needed for the simulation, the raw data as well as the resulting data can be used for business analytics and other simulations. For example, as intermediate results are calculated from a long-running simulation, the data that is generated can be fed into artificial intelligence algorithms that could be used to steer the simulation. The data that is generated and used must be available close to the computational servers. Since the data may grow over time, scaling with high-density storage devices is crucial to the workflows that innovative organizations may employ.

When a fast and scalable storage system is used, in addition to making HPC applications run faster, real-time analytical processing can be realized, allowing organizations to monitor, understand and interact with streaming data. This workflow uses the data as it is generated, rather than just storing away (and probably ignoring) the data if not used in a timely manner.

Over the next few weeks we will explore these topics surrounding storage infrastructure:

- Introduction, Mixing Workloads, Components/Storage

- Challenges with HPC Today and How it is Changing, Industry Trends

- Industry Innovators, Storage Considerations

- Value, Seagate as a Trusted Partner, Next Steps

Download the complete insideHPC Special Research Report: Modernizing and Future-Proofing Your Storage Infrastructure, courtesy of Seagate.