Inspur released five new AI servers that fully support the new NVIDIA Ampere architecture. The new servers support up to 8 or 16 NVIDIA A100 Tensor Core GPUs, with remarkable AI computing performance of up to 40 PetaOPS, as well as delivering tremendous non-blocking GPU-to-GPU P2P bandwidth to reach maximum 600 GB/s.

Inspur was swift to unveil upgrades to its AI servers based on the NVIDIA Ampere architecture as it allows global AI users a broad array of computing platforms optimized for various applications,” said Liu Jun, GM of AI and HPC at Inspur. “With this upgrade, Inspur offers the most comprehensive AI server portfolio in the industry, better tackling the computing challenges created by data surges and complex modeling. We expect that the upgrade will significantly boost AI technology innovation and applications.

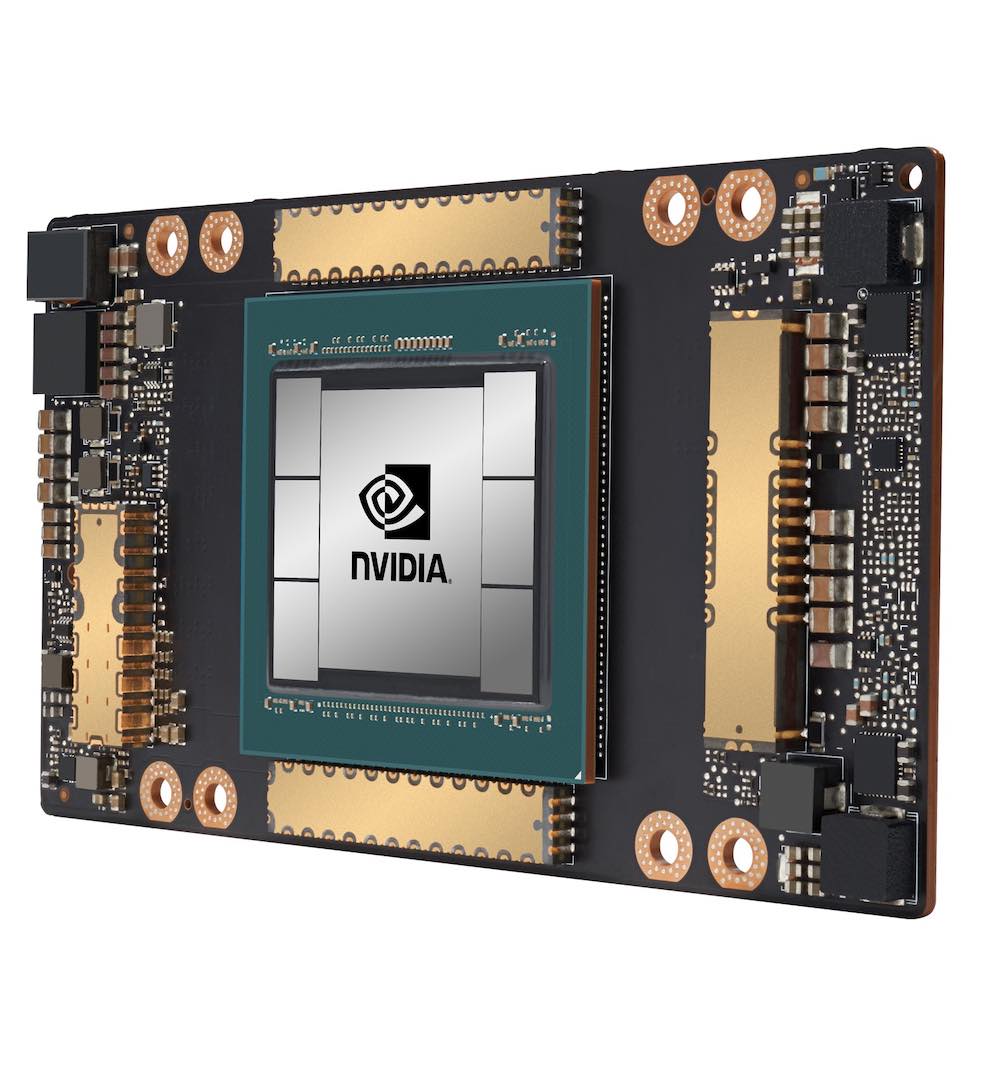

Each of the five servers will address multiple AI computing scenarios and support 8 to 16 of the latest NVIDIA A100 Tensor Core GPUs. The third generation of Tensor Cores in A100 GPUs are faster, more flexible and easier to use and enable these servers to offer AI computing performance of up to 40 PetaOPS. With next-gen NVIDIA NVSwitch fabric technology, the GPU-to-GPU P2P communication speed has been doubled to reach 600 GB/sec. The increased computing performance and updated GPU-to-GPU bandwidth will bring AI computing efficiency to enable AI training for larger data scales and more complicated models. In addition, the NVIDIA A100 GPUs have 40GB of HBM2 memory with 70% higher memory bandwidth at 1.6TB/sec to support larger deep learning models for training.

Inspur AI servers include:

- Inspur NF5488M5-D accommodates 8 A100 GPUs plus 2 mainstream CPUs in 4U space, delivering leading AI computing technology and mature ecosystem support to users.

- Inspur NF5488A5 supports 8 A100 GPUs with the 3rd generation NVlink plus 2 PCI-E Gen4 CPUs, providing users with high-bandwidth data pipeline communication.

- Inspur NF5488M6 is a highly optimized system for HPC and AI computing users that will integrate 8 A100 GPUs with NVSwitch technology plus 2 next-gen mainstream CPUs.

- Inspur NF5688M6 boasts the ultimate scalability and can support 8 A100 GPUs, accommodates more than 10 PCIe Gen4 devices, and brings about a 1:1:1 balanced ratio of GPUs, NVMe storage and NVIDIA Mellanox InfiniBand network.

- Inspur NF5888M6 can support 16 A100 GPUs interconnected in one single system via NVSwitch. The computing performance could reach up to 40 PetaOPS, making it the most powerful AI super server for users who pursue the ultimate performance.

Inspur will also upgrade its leading AI computing resource platform AIStation and automatic machine learning platform AutoML Suite to support NVIDIA A100, which provide more flexible AI computing system resources management and powerful AI modeling algorithm development support.

Inspur will also upgrade its leading AI computing resource platform AIStation and automatic machine learning platform AutoML Suite to support NVIDIA A100, which provide more flexible AI computing system resources management and powerful AI modeling algorithm development support.

Additionally, Inspur plans to add the new EGX A100 configuration to its edge server portfolio to deliver enhanced security and unprecedented performance at the edge. The EGX A100 converged accelerator combines an NVIDIA Mellanox SmartNIC with GPUs powered by the new NVIDIA Ampere architecture, so enterprises can run AI at the edge more securely.

NVIDIA A100 Tensor Core GPUs offer customers unmatched acceleration at every scale for AI, data analytics and HPC,” said Paresh Kharya, Director of Product Management for Accelerated Computing at NVIDIA. “Inspur AI servers, powered by NVIDIA A100 GPUs, will help global users eliminate their computing bottlenecks and dramatically lower their cost, energy consumption, and data center space requirements.”

Inspur, a global leading AI server provider, offers a comprehensive AI product portfolio. It also fosters close partnerships with leading AI customers and empowers them to enhance performance in AI applications like voice, image, video, language and search.