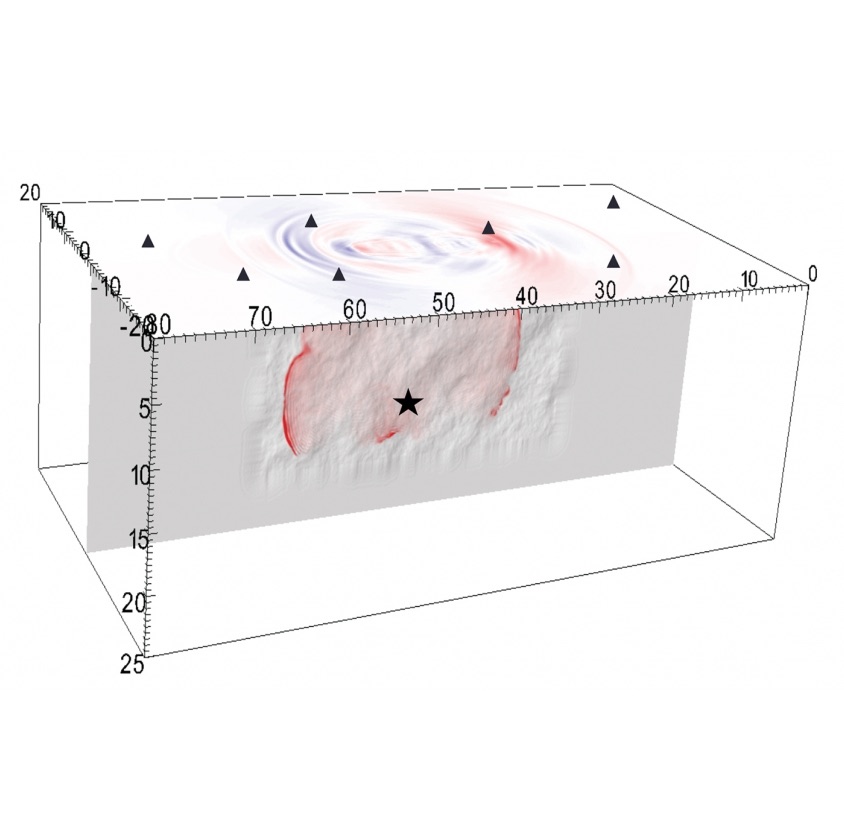

A snapshot of an earthquake computed by the dynamic rupture code Waveqlab3D, propagating along a vertically dipping strike-slip fault with superimposed fault roughness. The image covers 9.6 seconds of rupture-propagation progression after the initiation at the hypocenter (star). Corresponding ground motion velocity is shown in red and blue at the surface. Image courtesy of Kyle Withers, U.S. Geological Survey/Southern California Earthquake Center.

With help from DOE supercomputers, a USC-led team expands models of the fault system beneath its feet, aiming to predict its outbursts.

The Southern California Earthquake Center (SCEC) headquarters where Christine Goulet works sits atop the belly of the beast. Its University of Southern California location means quakes could literally be triggered “under our feet,” says Goulet, an earthquake engineer and the center’s executive director for applied science.

It thus serves as “our natural laboratory,” she says. “We have the San Andreas Fault and other faults related to that system. What we can learn can be applied to other regions, but we really focus on this very active area.”

All along the 750-mile-long San Andreas, enormous tectonic forces deep underground inch the Pacific Plate northwest relative to the North American Plate, sliding horizontally along what’s known as a strike-slip fault zone that can lock in place for years before suddenly releasing pent-up energy, generating major earthquakes.

More than a thousand collaborating SCEC researchers, many at campuses elsewhere in the United States and abroad, are trying to learn more about why quakes happen and how best to understand them and their effects. The center’s hallmark tool is sophisticated computer modeling.

The SCEC is beginning another exploratory round of advanced simulations, tapping a grant of computing time from the Department of Energy INCITE (Innovative and Novel Computational Impact on Theory and Experiment) computing initiative. This renewed 2020 allocation, for which Goulet serves as principal investigator, gives a few dozen SCEC programmers and researchers access to two of the world’s most powerful supercomputers for a total of 800,000 node-hours to build “finer models of the Earth,” she says.

The center relies heavily on validated computational models. That means ensuring that its simulations are consistent with measurements and other information collected following actual earthquakes, such as how much faults moved, where the ground was ruptured or otherwise altered, and how much of the earth was shaken at different locations.

The first step is learning more about the Earth’s structure by collecting a lot of data,” Goulet explains. Investigators use this knowledge of how previous earthquake waves propagated underground to create computer simulations that are consistent with the laws of physics and the observations.

‘The Earth may seem rigid to us, but during earthquake–shaking, rocks deform.’

After that, the researchers can “use the models to predict specific events we haven’t seen yet,” she adds.

“The key is to start with a big-picture understanding of science and then refine it. It is like making sense of images you can only see from afar or that have very poor resolution.” To make sense of the details, the observer must get close and sharpen the focus. “First, understand the bigger picture, and then the higher resolution. It’s after that we create the computer models, and the higher the resolution of the model, the more physics we need to include in it.”

SCEC’s CyberShake software models lower-frequency earthquake vibrations to analyze and map hazards. By simulating local Earth structure, CyberShake can estimate tremors from hundreds of thousands of possible earthquake scenarios at specific sites within its study area. Originally built for use in SCEC’s part of Southern California, CyberShake was extended to central California in 2017 and north of San Francisco a year later.

Nevertheless, the program has limitations. Because it lacks sufficient model resolution, it generally cannot address earthquake vibrations higher than 1 cycle per second, or 1 hertz.

Lower vibration levels that are accessible to CyberShake are still valuable to model, however, because they affect buildings of 10 stories or more and large infrastructures such as road systems and bridges, Goulet notes. “But for earthquake engineering, we would like to go up to 20 hertz. What we’re trying to do is put more-realistic physics in our code” – models that can capture high-frequency changes known as inelasticities, which can permanently warp severely shaken materials. “The Earth may seem rigid to us, but during earthquake-shaking, rocks deform.” Models also will “have to represent uneven material distribution in the crust. We don’t know the details of its composition at the scale we need for wave propagation simulation.”

For their 2020 INCITE work, SCEC scientists and programmers will have access to 500,000 node hours on Argonne National Laboratory’s Cray XC40 Theta supercomputer, delivering as much as 11.69 thousand trillion floating point operations per second, or 11.69 petaflops.

For their 2020 INCITE work, SCEC scientists and programmers will have access to 500,000 node hours on Argonne National Laboratory’s Cray XC40 Theta supercomputer, delivering as much as 11.69 thousand trillion floating point operations per second, or 11.69 petaflops.

The team is using Theta “mostly for dynamic earthquake ruptures,” Goulet says. “That is using physics-based models to simulate and understand details of the earthquake as it ruptures along a fault, including how the rupture speed and the stress along the fault plane changes.”

The group also will get 300,000 hours of node time on Oak Ridge National Laboratory’s IBM AC922 Summit supercomputer, which has 9,226 Power 9 CPUs and 27,648 NVIDIA graphics processing units (GPUs). Summit’s computing power is rated at 200 petaflops, but in some cases can calculate into the exaflops, or a billion billion flops.

We’re using Summit for a lot of the CyberShake computations,” Goulet says, as well as for modeling to address how seismic waves interact with inelasticity and topography. “We have codes that are based on GPUs, and they work well there.”

Source: DOE ASCR Discovery