Canadian startup Tenstorrent has launched its new Grayskull high performance AI processor. According to the company, Grayskull provides a substantial performance and power efficiency advantage over all existing inference solutions to date, and enables machine learning platforms that offer multiple levels of inference performance compared to all other inference solutions, in a cost effective manner.

Canadian startup Tenstorrent has launched its new Grayskull high performance AI processor. According to the company, Grayskull provides a substantial performance and power efficiency advantage over all existing inference solutions to date, and enables machine learning platforms that offer multiple levels of inference performance compared to all other inference solutions, in a cost effective manner.

Founded by seasoned leaders and engineers behind successful, innovative and complex solutions at leading semiconductor companies, Tenstorrent has taken an approach that dynamically eliminates unnecessary computation, thus breaking the direct link between model size growth and compute/memory bandwidth requirements. Conditional computation enables adaptation to both inference and training of a model to the exact input that was presented, like adjusting NLP model computations to the exact length of the text presented, and dynamically pruning portions of the model based on input characteristics. Grayskull delivers significant baseline performance improvements on today’s most widely used machine learning models, like BERT, ResNet-50 and others, while opening up orders of magnitude gains using conditional execution on both current models and future ones optimized for this approach.

For artificial intelligence to reach the next level, machines need to go beyond pattern recognition and into cause-and-effect learning. Such machine learning (ML) models require computing infrastructure that allows them to continue growing by orders of magnitude for years to come. ML computers can achieve this goal in two ways: by weakening the dependence between model size and raw compute power, through features like conditional execution and dynamic sparsity handling, and by facilitating compute scalability at hitherto unrivaled levels. Rapid changes in ML models further require flexibility and programmability without compromise. Tenstorrent addresses all of these by introducing a fully programmable architecture that supports fine-grain conditional execution and an unprecedented ability to scale via tight integration of computation and networking.

The past several years in parallel computer architecture were all about increasing TOPS, TOPS per watt and TOPS per cost, and the ability to utilize provisioned TOPS well. As machine learning model complexity continues to explode, and the ability to improve TOPS oriented metrics rapidly diminishes, the future of the growing computational demand naturally leads to stepping away from brute force computation and enabling solutions with more scale than ever before,” said Ljubisa Bajic, founder and CEO of Tenstorrent. “Tenstorrent was created with this future in mind. Today, we are introducing Grayskull, Tenstorrent’s first AI processor that is sampling to our lead partners, and will be ready for production in the fall of 2020.”

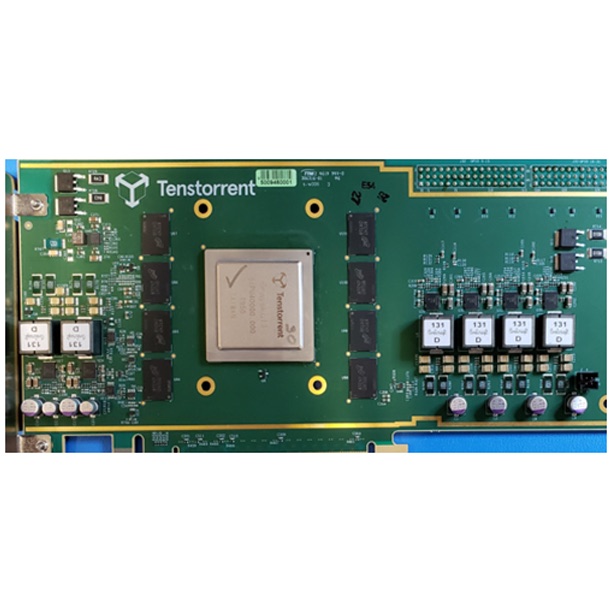

The Tenstorrent architecture features an array of proprietary Tensix cores, each comprising a high utilization packet processor and a powerful, programmable SIMD and dense math computational block, along with five efficient and flexible single-issue RISC cores. The array of Tensix cores is stitched together with a custom, double 2D torus network on a chip (NoC), which facilitates unprecedented multi-cast flexibility, along with minimal software burden for scheduling coarse-grain data transfers.

Grayskull integrates 120 Tensix cores, with 120MB of local SRAM facilitating a faster execution of AI workloads. This AI processor also provides eight channels of LPDDR4, supporting up to 16GB of external DRAM and 16 lanes of PCI-E Gen 4. At the chip thermal design power set-point required for a 75W bus-powered PCIE card, Grayskull achieves 368TOPS and, powered by conditional execution, up to 23,345 sentences/second using BERT-Base for the SQuAD 1.1 data set, making it 26X more performant than today’s leading solution.

Grayskull, which is focused on the inference market, is already available in sample quantities. Target markets include, data centers, public/private cloud servers, on-premises servers, edge servers, automotive and many others.