Not too long ago, building a converged HPC/AI environment – with two domains: High Performance Computing (HPC) and Artificial Intelligence (AI) – would require spending a lot of money on proprietary systems and software with the hope that it would scale as business demands changed.

Not too long ago, building a converged HPC/AI environment – with two domains: High Performance Computing (HPC) and Artificial Intelligence (AI) – would require spending a lot of money on proprietary systems and software with the hope that it would scale as business demands changed.

In this insideHPC technology guide, “insideHPC Guide to QCT Platform-on-Demand Designed for Converged Workloads,”as we’ll see, by relying on open source software and the latest high performance/low cost system architectures, it is possible to build scalable hybrid on-premises solutions that satisfy the needs of converged HPC/AI workloads while being robust and easily manageable.

High Performance Computing with QCT POD

Users and administrators migrating from traditional HPC systems will find that QCT POD presents the HPC developer with a familiar environment that they can use easily and efficiently, both on-premises and remotely through web-based tools. They have all the popular compilers, including GCC, Intel® Parallel Studio XE, and the NVIDIA® HPC-SDK™ (for Fortran, C, and C++), and math libraries BLAS, BLACS, and LAPACK, all optimized for high performance, along with Environment Modules for easy runtime setup, and job schedulers such as Slurm for advanced workload management.

The QCT POD also supports Kubernetes and Singularity, permitting users to run HPC jobs the modern way— in containers managed and monitored with browser-based tools.

HPC Example: WRF Model

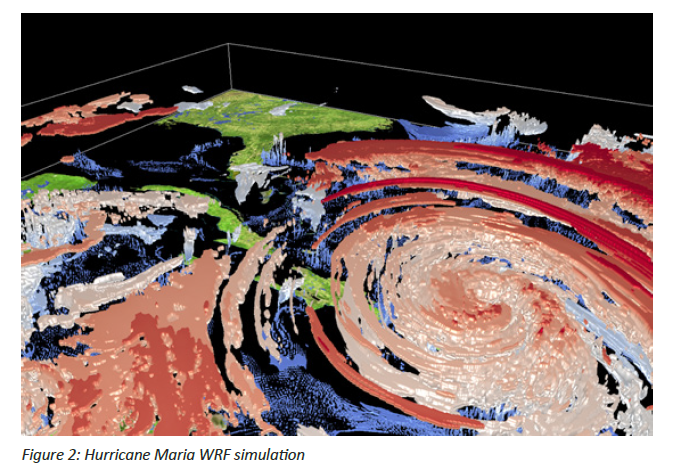

To see how the QCT POD simplifies and expedites deployment of a popular HPC workload, let’s look at what it takes to get the Weather Research and Forecasting model WRF up and running.

WRF is open source and written mostly in Fortran and C. As such, developers can use their preferred high- performance compilers, including the NVIDIA HPC SDK, to build WRF and the libraries it requires.

Being open source allows developers to adapt and insert other open source codes into the WRF core to enhance performance. But this can cause real difficulties for developers by introducing complicated dependency and compatibility issues with code coming from other environments and using different compilers. To resolve these issues could result in a patchwork of special runtime environments that become ever more difficult to manage.

By design, QCT provisions the POD with pre-compiled optimized runtime libraries for core workloads, and gives developers recipes for rebuilding those libraries as needed. Now users can employ QCTs Environment Modules to load the specific library versions and the corresponding workload settings they need with just a few quick commands. This lets them build their HPC applications quickly and start running benchmark tests as soon as they get access to the QCT HPC POD.

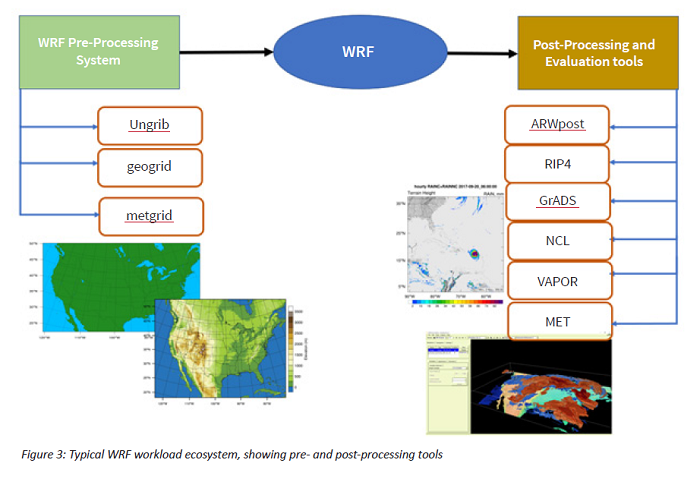

This was very much the case with the WRF model. In particular, WRF simulations use pre- and postprocessing tools to prepare the input for the model and to analyze and visualize the simulation output. Figure 3 shows the entire WRF workflow ecosystem.

Each of the pre- and post-processing tools have their own unique runtime library dependencies that need to be built, tested, and deployed. Building the entire WRF ecosystem typically takes weeks to months to migrate and get running on a new HPC system. By using the pre-configured software provided by QCT, developers can get the WRF ecosystem ready for input and generating output for analysis quicker than on traditional HPC systems.

By providing optimized and pre-compiled runtime libraries with POD, QCT eliminates most of the pain developers encounter when preparing a runtime environment for HPC applications on a new HPC system. Users are able to get up and running with their first test runs with minimal effort and wasted time.

Over the next few weeks we’ll explore QCT’s Platform-on-Demand designed for converged workloads:

- Introduction, What converged HPC/AI workloads look like

- The converged Platform-on-Demand solution from QCT

- High Performance Computing with QCT POD

- Deep Learning with QCT POD, Summary, About QCT

Download the complete insideHPC Guide to QCT Platform-on-Demand Designed for Converged Workloads courtesy of QCT.