In this insideHPC Guide, “Things to Know When Assessing, Piloting, and Deploying GPUs,”our friends over at WEKA suggest that when organizations decide to move existing applications or new applications to a GPU-influenced system there are many items to consider, such as assessing the new environment’s required components, implementing a pilot program to learn about the system’s future performance, and considering eventual scaling to production levels.

In this insideHPC Guide, “Things to Know When Assessing, Piloting, and Deploying GPUs,”our friends over at WEKA suggest that when organizations decide to move existing applications or new applications to a GPU-influenced system there are many items to consider, such as assessing the new environment’s required components, implementing a pilot program to learn about the system’s future performance, and considering eventual scaling to production levels.

Simply starting to assemble hardware systems and layering software solutions on top of them without a deep understanding of the desired outcomes or potential bottlenecks may lead to disappointing results. Planning and experimentation are essential parts of implementing a GPU-based system, a process that is ongoing and requires continual evaluation and tuning.

Introduction

Traditionally, graphical processing units, or GPUs, were used in processing 3D content/data in gaming. Today, GPUs have become a key technology for applications in manufacturing, life sciences, and financial modeling. GPUs speed up simulations due to their fast parallel processing capabilities and are now being used extensively in Artificial Intelligence (AI) and Machine Learning (ML) applications. Creating a large-scale environment that utilizes GPUs takes planning, piloting, implementing at scale, and, finally, evaluation.

For an effective production environment using GPUs, organizations need a defined process that contains the following activities:

- Assessment

- Pilot Program

- Scaling implementation for anticipated workloads

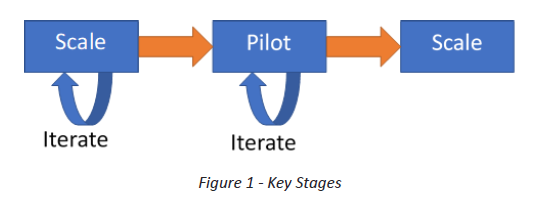

Within the assessment and pilot activities, project leaders should expect to complete multiple iterations to achieve a clear understanding or tuning of the components. Figure 1 represents the process and shows that iterating on these steps is key to a successful implementation at scale.

Assessment

A key to creating a large-scale system that responds to user demands starts with understanding the challenges that a company or organization is trying to solve. Depending on the industry and algorithms used, a number of areas need to be understood when planning and deciding if a GPU-based installation is right for the workloads.

- What is the expected result from a new system design? Customers need to understand what the business needs and wants. Better decisions? More accurate voice recognition? Assistance with guiding software simulations? Before designing a working system, the goals must be understood and be attainable before starting a new project.

- What are the fundamental algorithms that will be used and the nature of the software? If the software chosen is provided by a third-party, and the software has been optimized to use GPUs, then the project and implementation can proceed more quickly. However, suppose the implementation will use new algorithms that require software development. In that case, the implementation team needs a deep understanding of the software requirements to determine whether or not GPUs will shorten the application’s time.

- How much data will be used? Even if using GPUs might lower an application’s running time, GPUs may not be the correct solution if the quantity of data is low. When determining the amount of data that will be used when setting up a new software system to analyze data, looking back at the amount of data collected in the past day, week, month, or year is extremely useful. Just as important is looking at what types of data are helpful for the task at hand. Just because 1 TB of data per day has been collected does not mean that a new system must analyze all of this data. Specific tools may be needed to separate the data being used compared to the data being captured. More data does not equate to better data.

- A key to any system is understanding the type of data. Where it is coming from, and what insights might be contained in the data. The metadata for any system needs to be examined to understand various properties of the data. Implementing software that can open up the metadata is not just a one- time effort—comparing the metadata over a period of time, whether hours or weeks, will give an insight into not only data growth but trends in data types, complexity, and relevance to the study at hand.

- Understanding the type of the data to be used is critical to developing an optimized system. For example, when analyzing images or videos, one would conclude that GPUs would be the best choice, while trying to make sense of IoT information may be better served with CPUs. A lot of this will depend on a combination of the amount of data that is anticipated to be of value and, of course, the type of data.

- Besides the CPU and GPU choices, other infrastructure components need to be understood at the time of planning. Network bandwidth and storage types are integral parts of a smooth-running system that must be mapped out early to identify which of these pieces must be upgraded or purchased new.

- The eventual sizing of the infrastructure, CPUs, accelerators, networking, and storage capacity should support the data volumes, the SLAs’ latencies, and the budget needed. A sizing exercise starts at the result and then works backward to the required hardware and framework. All aspects should be understood, including network latencies and bandwidths and the storage system’s ability to deliver data quickly to where it is needed. An example of incorrect sizing would be connecting a GPU with 12GB/s when inferencing but using remote/local storage that can do 2-6GB/s only.

- When assessing performance, evaluate pipeline bottlenecks and not just performance bottlenecks. For example, how do the GPU servers share the data? Do they copy it to local drives? This procedure takes time and is considered a pipeline bottleneck, not a strict performance bottleneck.

- When planning for performance, keep in mind that the required performance on Day 1 is not the same requirement for Day 2, Day 30, Day 100, and so on. Set several performance requirements (e.g., more GPUs or more data scientists) because the requirements at each stage will be different. Soliciting expert input helps the implementation team to understand the current situation and the future state in no uncertain terms. When planning for a new or upgraded system to analyze or learn from existing processes, getting the help of specialists who have experience in the domain field should be standard practice. An objective look at the amount of data in the system, the possible algorithms, and various options will ultimately lead to a better implementation at scale.

Over the next few weeks we’ll explore Weka’s new insideHPC Guide:

- Introduction, Assessment

- Pilot Program

- Scaling and Implementation, Related Considerations – Storage, Summary

Download the complete Things to Know When Assessing, Piloting, and Deploying GPUs courtesy of Weka.