This article by Kimberly Mann Bruch, senior writer at the San Diego Supercomputer Center, examines the role of HPC systems at SDSC and at the Pittsburgh Supercomputing Center in the development of artificial neural networks by MIT researchers for computational chemistry.

This article by Kimberly Mann Bruch, senior writer at the San Diego Supercomputer Center, examines the role of HPC systems at SDSC and at the Pittsburgh Supercomputing Center in the development of artificial neural networks by MIT researchers for computational chemistry.

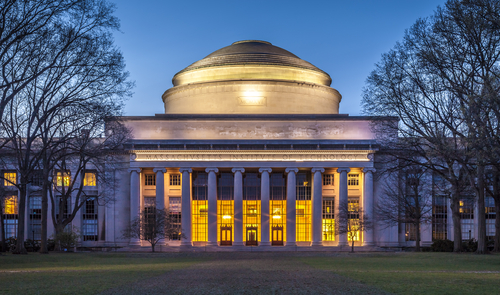

Even though computational chemistry represents a challenging arena for machine learning, a team of researchers from the Massachusetts Institute of Technology (MIT) may have made it easier. Using Comet at the San Diego Supercomputer Center at UC San Diego and Bridges at the Pittsburgh Supercomputing Center, they succeeded in developing an artificial intelligence (AI) approach to detect electron correlation – the interaction between a system’s electrons – which is vital but expensive to calculate in quantum chemistry.

AI-based methods, however, show promise in making electron correlation detection much more tractable while improving the throughput, or number of materials that can be analyzed, of such computations. With Comet and Bridges, Professor Heather Kulik and her MIT colleagues developed several unique artificial neural network models, which are published in the Journal of Chemical Theory and Computation and the Journal of Physical Chemistry Letters. These simulations could help advance an array of new materials with predictive modeling.

“In these two papers, we first developed supervised models to predict high-quality, high-cost diagnostics of strong correlation at low computational cost,” said Kulik, a computational chemist and chemical engineering professor at MIT. “We overcame the fact that diagnostics seldom agree to build a consensus-based classifier model, so we used various low- and predicted high-cost as inputs to the virtual adversarial training of an artificial neural network model in what we believe to be the first semi-supervised learning model applied to computational chemistry.”

The simulations showed how certain strong correlations could be present in some, but not other, molecules typically explored during high-throughput screening of materials. This allowed the researchers to identify when more affordable computational models would be predictive.

Using machine learning, the models were able to make the predictions for strong correlation in the materials at a much lower computational cost than conventional methods, potentially accelerating the search for materials in a range of applications, such as finding drug-like compounds for treating diseases or new materials for improving batteries.

Using machine learning, the models were able to make the predictions for strong correlation in the materials at a much lower computational cost than conventional methods, potentially accelerating the search for materials in a range of applications, such as finding drug-like compounds for treating diseases or new materials for improving batteries.

“This type of machine learning model is uniquely suited to this multi-stage approach because it is robust and stands up to noisy/erroneous inputs,” further explained Fang Liu, an NSF Molecular Sciences Software Institute fellow who was co-author on both papers. “We used a great deal of theoretical chemistry codes to conduct our studies and that would not have been possible without Comet and Bridges.”

The team’s workflow, MultirefPredict, interfaced with at least three electronic structure codes and used both central processing units (CPUs) and graphics processing units (GPUs) on Comet and Bridges.

Kulik and her team were allocated time on the supercomputers via the National Science Foundation’s (NSF) Extreme Science and Engineering Discovery Environment (XSEDE). “Due to our complex requirements, having resources where we could set up workflows to run in an interoperable manner with different codes was very helpful for us,” said Kulik, who also teaches a course on XSEDE resources. “Using those supercomputers firsthand allowed me to think about ways I can teach students who may just be learning computational chemistry to complement their experimental research for ways that they can use not only now but in the future.”

This research was primarily supported by the U.S. Department of Energy (DE-SC0018096). Access to Comet and Bridges was provided by XSEDE (TG-CHE140073).

About SDSC

The San Diego Supercomputer Center (SDSC) is a leader and pioneer in high-performance and data-intensive computing, providing cyberinfrastructure resources, services, and expertise to the national research community, academia, and industry. Located on the UC San Diego campus, SDSC supports hundreds of multidisciplinary programs spanning a wide variety of domains, from astrophysics and earth sciences to disease research and drug discovery. In late 2020 SDSC launched its newest National Science Foundation-funded supercomputer, Expanse. With more than twice the performance of Comet, Expanse supports SDSC’s theme of ‘Computing without Boundaries’ with a data-centric architecture, public cloud integration and state-of-the art graphics processing units (GPUs) for incorporating experimental facilities and edge computing.

About PSC

The Pittsburgh Supercomputing Center (PSC) is a joint computational research center of Carnegie Mellon University and the University of Pittsburgh. Established in 1986, PSC is supported by several federal agencies, the Commonwealth of Pennsylvania and private industry and is a leading partner in XSEDE (Extreme Science and Engineering Discovery Environment), the National Science Foundation cyber infrastructure program. PSC provides university, government and industrial researchers with access to several of the most powerful systems for high-performance computing, communications and data storage available to scientists and engineers nationwide for unclassified research. PSC advances the state of the art in high-performance computing, communications and data analytics and offers a flexible environment for solving the largest and most challenging problems in computational science.