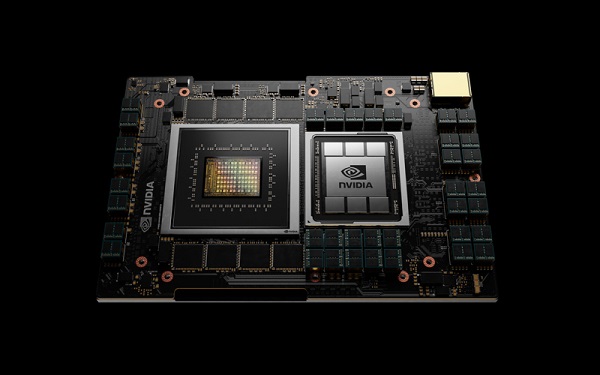

Nvidia’s “Grace” Arm-based CPU

Nvidia announced a slew of new products today on the first day of its annual GTC conference, including a first-time foray into data center CPU that has pushed Intel stock prices down and Nvidia shares up.

Nvidia’s new “Grace” CPU, the company’s first CPU for the data center, is an Arm-based chip that the company said is designed for what the company called “Giant AI” and HPC workloads. Its launch comes on the heels of high end CPUs announced in recent weeks by AMD and by Intel. As of this writing, Intel stock is down nearly 4 percent on the news while Nvidia stock has enjoyed almost a 4 percent rise.

While Nvidia’s move into CPUs may be a surprise, it isn’t surprising that the company’s CPU technology is Arm-based. In September, Nvidia announced a $40 billion deal to acquire UK-based Arm Holdings from Softbank, which would expand Nvidia’s position in the chip sector from its stronghold in GPUs by giving it ownership of Arm CPU intellectual property. The acquisition currently is going through extensive regulatory approvals in the U.S., China and Europe, a process that will involve objections from Google, Microsoft and Qualcomm.

The result of more than 10,000 engineering years of work, according to Nvidia, Grace is a specialized processor targeting workloads such as training natural language processing models with more than 1 trillion parameters. “When tightly coupled with Nvidia GPUs, a Grace CPU-based system will deliver 10x faster performance than today’s Nvidia DGX-based systems, which run on x86 CPUs. While the vast majority of data centers are expected to be served by existing CPUs, Grace — named for Grace Hopper, the U.S. computer-programming pioneer — will serve a niche segment of computing,” the company said.

Grace will be supported by the Nvidia HPC software development kit and by CUDA and CUDA-X libraries. Availability is expected in the beginning of 2023.

The Swiss National Supercomputing Centre (CSCS) and Los Alamos National Laboratory both plan to bring Grace-powered Hewlett Packard Enterprise supercomputers online in 2023.

“With an innovative balance of memory bandwidth and capacity, this next-generation system will shape our institution’s computing strategy,” said Thom Mason, director of the Los Alamos National Laboratory. “Thanks to NVIDIA’s new Grace CPU, we’ll be able to deliver advanced scientific research using high fidelity 3D simulations and analytics with datasets that are larger than previously possible.”

Nvidia BlueFiueld-3

The company also launched BlueField-3, which Nvidia said is the first 400Gb/second DPU data processing unit to power software-defined networking, storage and cybersecurity for data centers.

Nvidia said one BlueField-3 DPU delivers the equivalent data center services of up to 300 CPU cores, freeing up CPU cycles to run business-critical applications.

“Modern hyperscale clouds are driving a fundamental new architecture for data centers,” said Jensen Huang, founder and CEO of Nvidia. “A new type of processor, designed to process data center infrastructure software, is needed to offload and accelerate the tremendous compute load of virtualization, networking, storage, security and other cloud-native AI services.”

Nvidia said the DPU features 10x the accelerated compute power of the previous generation, with 16x Arm A78 cores and 4x acceleration for cryptography. It supports fifth-generation PCIe and has time-synchronized data center acceleration. BlueField-3 also acts as the monitoring, or telemetry, agent for NVIDIA Morpheus, an AI-enabled, cloud-native cybersecurity platform, also announced today.

BlueField-3 leverages advantage of Nvidia DOCA, which the company calls a data-center-on-a-chip architecture, an open software platform for building software-defined, hardware-accelerated networking, storage, security and management applications running on BlueField DPUs. It includes orchestration tools to provision and monitor thousands of DPUs; as well as libraries, APIs and applications, such as deep packet inspection and load balancing.

Nvidia DGX Superpod

Nvidia said server manufacturers Dell Technologies, Inspur, Lenovo and Supermicro are integrating BlueField DPUs into their systems and that cloud services providers are using BlueField DPUs, including Baidu, JD.com and UCloud. The BlueField ecosystem is also being supported by hybrid cloud platform partners Canonical, Red Hat and VMware; cybersecurity companies such as Fortinet, Guardicore and storage providers DDN , NetApp and WekaIO; and edge platform providers Cloudflare, F5 and Juniper Networks.

BlueField-3 is expected to sample in the first quarter of 2022, according to the company.

Nvidia also unveiled what is calls the first cloud-native, multi-tenant AI supercomputer, the DGX SuperPOD, with BlueField-2 DPUs. In conjunction with this announced, the company also has launched Nvidia Base Command, enabling multiple users and IT teams to access and operate DGX SuperPOD infrastructures. Base Command is designed to coordinate AI training and operations on DGX SuperPOD infrastructures to support teams of data scientists and developers in multiple locations.

The new DGX model has four Nvidia A100 80GB GPUs for a 3x speed-up compared to the previous DGX. The company said up to 28 data scientists can share a DGX Station A100 simultaneously.

It will be available in Q2, as will Base Command. Subscriptions for DGX Station A100 start at a list price of $9,000 per month.

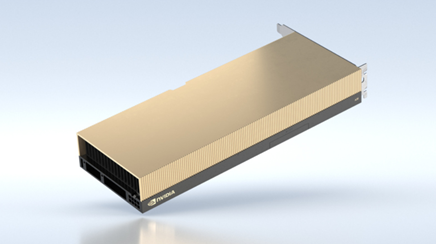

Nvidia A30 Tensor Core GPU

On the GPU front, the company announced the Nvidia A30 Tensor Core GPU, described as a “versatile mainstream compute GPU for AI inference and mainstream enterprise workloads.”

Nvidia A30 GPU

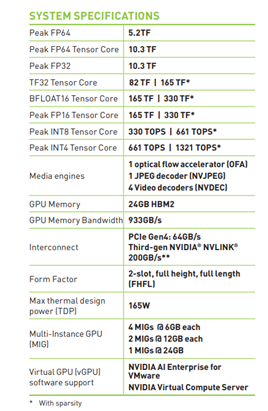

Powered by Nvidia Ampere architecture Tensor Core technology, it supports a range of math precisions and is built for AI inference at scale. “The same compute resource can rapidly re-train AI models with TF32, as well as accelerate high-performance computing (HPC) applications using FP64 Tensor Cores. Multi-Instance GPU (MIG) and FP64 Tensor Cores combine with fast 933 gigabytes per second (GB/s) of memory bandwidth in a low 165W power envelope, all running on a PCIe card optimal for mainstream servers,” the company said. “Whether using MIG to partition an A30 GPU into smaller instances or Nvidia NVLink to connect multiple GPUs to speed larger workloads, A30 can readily handle diverse-sized acceleration needs, from the smallest job to the biggest multi-node workload.”

For throughput, A30 delivers 165 TFLOPS for deep learning, which the company said is 20X faster for training and more than 5X faster inference compared to Nvidia T4 Tensor Core GPU. For HPC, A30 delivers 10.3 TFLOPS, nearly 30 percent more than NVIDIA V100 Tensor Core GPU. NVLink in A30 delivers 2X throughput compared to the previous generation. Two A30 PCIe GPUs can be connected via an NVLink Bridge to deliver 330 TFLOPs for deep learning, Nvidia said. And with up to 24GB of high bandwidth memory (HBM2), A30 delivers 933GB/s of GPU memory bandwidth, for AI and HPC workloads in mainstream servers.