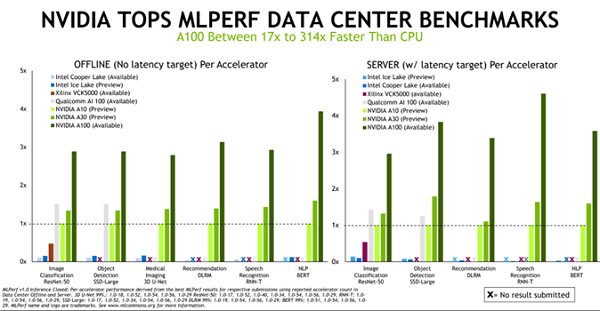

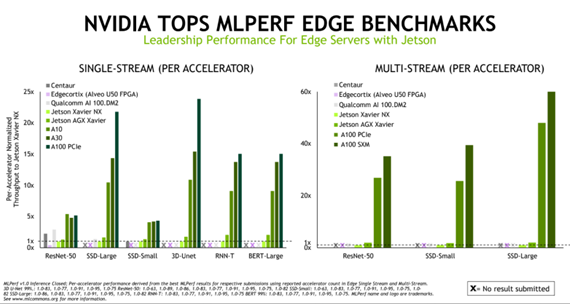

What has become Nvidia’s regular romp through MLPerf AI benchmarks continued with today’s release of the latest performance measurements across a range of inference workloads, including computer vision, medical imaging, recommender systems, speech recognition and natural language processing.

The latest benchmark round received submissions from 17 organizations and includes 1,994 performance and 862 power efficiency results for ML inference systems. Results can be viewed here.

Nvidia said it was the only company to submit results for every test in the data center and edge categories, delivering top performance results across all MLPerf workloads.

“Based upon a diverse set of usages and scenarios, the MLPerf 1.0 Inference benchmark is a proving ground where AI Inference solutions can be measured and compared,” said Dave Salvator, senior manager, product marketing in Nvidia’s Accelerated Computing Group. “In version 1.0, Nvidia has once again delivered outstanding performance across all seven workloads, both for the data center and edge suites…. Our TensorRT inference SDK played a pivotal role in making these great results happen. Nearly all Nvidia submissions were done using INT8 precision, which delivers outstanding performance while meeting or exceeding MLPerf’s accuracy requirements.

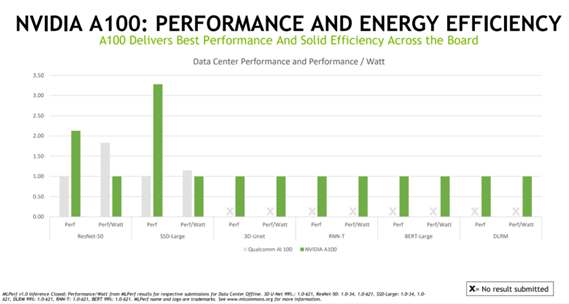

“A new feature of MLPerf Inference 1.0 is measuring power and energy efficiency,” Salvator said. “Nvidia made submissions for all Data Center scenarios, and nearly all of the Edge scenarios, a testament to our platform’s versatility, but also its great performance and energy efficiency.

Below is Nvidia’s review of the benchmark results: