The U.S. National Energy Research Scientific Computing Center today unveiled the Perlmutter HPC system, a beast of a machine powered by 6,159 Nvidia A100 GPUs and delivering 4 exaflops of mixed precision performance.

The U.S. National Energy Research Scientific Computing Center today unveiled the Perlmutter HPC system, a beast of a machine powered by 6,159 Nvidia A100 GPUs and delivering 4 exaflops of mixed precision performance.

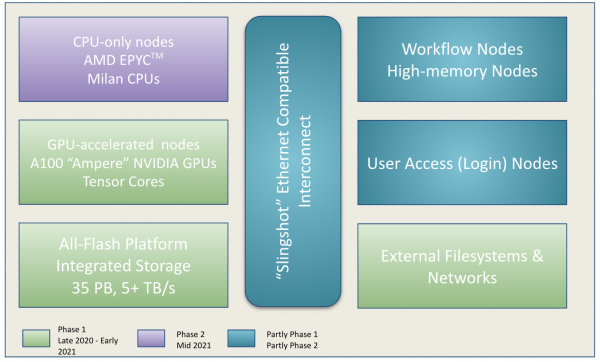

Perlmutter is based on the HPE Cray Shasta platform, including Slingshot interconnect, a heterogeneous system with both GPU-accelerated and CPU-only nodes. The system is being installed in two phases – today’s unveiling is Phase 1, which includes the system’s GPU-accelerated nodes and scratch file system. Phase 2 will add CPU-only nodes later in 2021.

“That makes Perlmutter the fastest system on the planet on the 16- and 32-bit mixed-precision math AI uses,” said Dion Harris, Nvidia senior product marketing manager in a blog released today. “And that performance doesn’t even include a second phase coming later this year to the system based at Lawrence Berkeley National Lab.”

Based on the Top500 list of the world’s most powerful supercomputers, the current top-ranked system is Fugaku, jointly developed by Japan’s RIKEN scientific institute and Fujitsu.

Perlmutter’s A100 GPUs utilize Nvidia Tensor Core technology and direct liquid cooling. Perlmutter also is NERSC’s first supercomputer with an all-flash scratch filesystem. The 35-petabyte Lustre filesystem will move data at a rate of more than 5 terabytes/sec making it the fastest storage system of its kind, according to NERSC.

Phase 1 of the Perlmutter installation is made up of 12 GPU-accelerated cabinets housing more than 1,500 nodes. Phase 2 later this year will add 12 CPU cabinets with more than 3,000 nodes. Each of Phase 1’s GPU-accelerated nodes has four A100 Tensor Core GPUs based on the NVIDIA Ampere GPU architecture alongside 256GB of memory. Each Phase 1 node also has a single AMD “Milan” CPU. The Phase 1 system also includes Non-Compute Nodes (NCNs), 20 User Access Nodes (NCN-UANs – login nodes) and service nodes. Some NCN-UANs can be used to deploy containerized user environments using Kubernetes for orchestration, according to NERSC.

Each of Phase 2’s CPU nodes will have two AMD Milan CPUs with 512GB of memory per node. The Phase 2 system also adds 20 more login nodes and four large memory nodes.

The programming environment will feature NVDIA HPC SDK (Software Development Kit) in addition to CCE, GNU and LLVM compilers to support diverse parallel programming models such as MPI, OpenMP, CUDA, and OpenACC for C, C++ and Fortran codes.

Installed at NERSC’s facility in Shyh Wang Hall at Lawrence Berkeley National Laboratory, the system is named for Saul Perlmutter, an astrophysicist at Berkeley Lab who shared the 2011 Nobel Prize in Physics for the discovery that the rate at which the universe expands is accelerating.

Perlmutter’s Phase I cabinets without the doors attached show blue and red lines of its direct liquid cooling system.

More than two dozen applications are being prepared for Perlmutter, aiming to advance science in astrophysics, climate science and more. One project involves the supercomputer helping to assemble what is expected to be the largest 3D map of the visible universe to date. It will process data from the Dark Energy Spectroscopic Instrument (DESI), a kind of cosmic camera that can capture as many as 5,000 galaxies in a single exposure. The goal is to shed light on dark energy, the mysterious physics behind the accelerating expansion of the universe, largely discovered by Perlmutter.

“Researchers need the speed of Perlmutter’s GPUs to capture dozens of exposures from one night to know where to point DESI the next night,” said Rollin Thomas, NERSC big data architect, Data and Analytics Services. “Preparing a year’s worth of the data for publication would take weeks or months on prior systems, but Perlmutter should help them accomplish the task in as little as a few days.”

Other work on Perlmutter is expected to focus on materials science, looking at atomic interactions that could point the way to better batteries and biofuels.

“In the past it was impossible to do fully atomistic simulations of big systems like battery interfaces, but now scientists plan to use Perlmutter to do just that,” said Brandon Cook, an applications performance specialist at NERSC who’s helping researchers launch such projects.