The new edition of the Top500 list of the world’s most powerful supercomputers saw the Japanese Fugaku system solidify its number one status in a list that the the Top500 organizers said shows a somewhat static and flattening performance growth curve. In fact, only one new system attained the top 10 (here’s the complete list).

Fugaku performance is now at 442 petaflops on the High Performance Linpack (HPL), a modest increase from the 416 petaflops the system achieved with its debut a year ago. In addition, where Fugaku really shines is its mixed precision HPL-AI benchmark performance, which grew to 2 exaflops, a leap from its 1.4 exaflops mark recorded a year ago. These are the first benchmark measurements above 1 exaflop for any precision on any type of hardware, according to Top500.

Another development, reflecting market growth of cloud HPC: instances high on the new list of Microsoft Azure and Amazon EC2 Cloud. No. 24 Pioneer-EU and no. 27 Pioneer-WUS2, rely on Azure. And at no. 41 is Amazon EC2 Instance Cluster.

On the geopolitical front, Top500 list organizers said this list saw fewer systems in China than expected, with 186 supercomputers, a significant drop from 212 machines on the previous list edition. “There hasn’t been much definitive proof of why this is happening, but it certainly is something to note,” the organizers said.

Still, the sheer number of Chinese systems outpaces any other country while the USA, on the other hand, checked in with 123 systems, an increase over the 113 American machines on the last list. Despite having fewer machines, the aggregate performances of the U.S. machines outstripped Chinese supercomputers, 856.8 Pflop/s, while China’s machines produced a performance of 445.3 Pflop/s.

China’s relatively modest standing on the new list reinforced rumors circulating in HPC circles that the country may not be forthcoming about its true supercomputing prowess.

Fugaku supercomputer at Riken

“There’s no new big systems for China,” said Jack Dongarra, distinguished professor of computer science at the University of Tennessee and a creator of the Linpack benchmark more than 40 years ago. “There was some anticipation that maybe China would have an exascale machine this time… We see very few new machines from China. Which is a little surprising. Maybe there’s some kind of political thing going on there… That would be my guess, that they are holding back. There are rumors that they have at least one exascale machine. And again, those are rumors but then they didn’t announce it. So you know, you could only assume that something like that’s happening.”

Jack Dongarra, University of Tennessee

As for the somewhat static of the new list, Dongarra agreed that it could be a calm before something of an exascale-driven storm.

“Yes, and it’s long overdue,” Dongarra said. “But I think it’s about to emerge, I would say in the next next year. I think the rumor is in the U.S. it (exascale) would appear at the end of the year in the November list. So in November, we would expect to see the U.S. exascale machine… The next wave is about to hit. It’ll be exciting next time. Hold your breath.

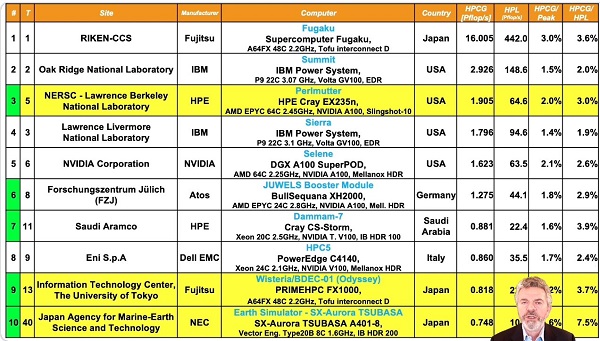

Here’s a rundown of current top 10:

- Fugaku a Fujitsu system installed at the RIKEN Center for Computational Science (R-CCS) in Kobe, Japan, grew its Arm A64FX capacity from 7,299,072 cores to 7,630,848 cores, resulting in its world record 442 petaflops result on HPL. This puts it three times ahead of the no. 2 system on the list.

- Summit, an IBM-built system installed in 2018 at Oak Ridge National Laboratory, remains the fastest system in the US with a performance of 148.8 petaflops. Summit has 4,356 nodes, each one housing two 22-core Power9 CPUs and six NVIDIA Tesla V100 GPUs. It had been on top of the Top500 until last June.

- Sierra, another IBM system and installed at the Lawrence Livermore National Laboratory in California, is ranked third with an HPL mark of 94.6 petaflops. Its architecture is very similar to that of Summit, with each of its 4,320 nodes equipped with two Power9 CPUs and four NVIDIA Tesla V100 GPUs.

- Sunway TaihuLight, a system developed by China’s National Research Center of Parallel Computer Engineering & Technology (NRCPC) and installed at the National Supercomputing Center in Wuxi, is listed at number four. It is powered exclusively by Sunway SW26010 processors and achieves 93 petaflops on HPL.

- Perlmutter, at No. 5 and housed at the National Energy Research Scientific Computing Center (NERSC)at the Lawrence Berkeley National Laboratory, is new to the top 10. It is based on the HPE Cray “Shasta” platform and a heterogeneous system with AMD EPYC based nodes and 1536 Nvidia A100 GPU accelerated nodes. Perlmutter achieved 64.6 Pflop/s.

- At number six is Selene, an NVIDIA DGX A100 SuperPOD installed in-house at NVIDIA Corp. It was listed as number seven last June but has doubled in size, allowing it to move up the list by two positions. The system is based on AMD EPYC processors with NVIDIA’s new A100 GPUs for acceleration. Selene achieved 63.4 petaflops on HPL as a result of the upgrade.

- Tianhe-2A (Milky Way-2A), a system developed by China’s National University of Defense Technology (NUDT) and deployed at the National Supercomputer Center in Guangzho, is ranked 6th. It is powered by Intel Xeon CPUs and NUDT’s Matrix-2000 DSP accelerators and achieves 61.4 petaflops on HPL.

- A new supercomputer, known as the JUWELS Booster Module, debuts at number seven on the list. The Atos-built BullSequana machine was recently installed at the Forschungszentrum Jülich (FZJ) in Germany. It is part of a modular system architecture and a second Xeon based JUWELS Module is listed separately on the TOP500 at position 44. These modules are integrated by using the ParTec Modulo Cluster Software Suite. The Booster Module uses AMD EPYC processors with NVIDIA A100 GPUs for acceleration similar to the number five Selene system. Running by itself the JUWELS Booster Module was able to achieve 44.1 HPL petaflops, which makes it the most powerful system in Europe

- HPC5, a Dell PowerEdge system installed by the Italian company Eni S.p.A., is ranked 8th. It achieves a performance of 35.5 petaflops using Intel Xeon Gold CPUs and NVIDIA Tesla V100 GPUs. It is the most powerful system in the list used for commercial purposes at a customer site.

- Frontera, a Dell C6420 system that was installed at the Texas Advanced Computing Center of the University of Texas last year is now listed at number nine. It achieves 23.5 petaflops using 448,448 of its Intel Platinum Xeon cores.

Green500

List organizers said that “although there was a trend of steady progress in the Green500, nothing has indicated a big step toward newer technologies.”

At the No. 1 spot for the Green500 was MN-3 from Preferred Networks in Japan. Knocked from the top of the last list by NVIDIA DGX SuperPOD in the US, MN-3 is back to reclaim its crown. This system relies on the MN-Core chip, an accelerator optimized for matrix arithmetic, as well as a Xeon Platinum 8260M processor. MN-3 achieved a 29.70 gigaflops/watt power-efficiency and has a TOP500 ranking of 337.”

HiPerGator AI of the University of Florida in the USA is now No.2 on the Green500 with a 29.52 gigaflops/watt power-efficiency. An NVIDIA machine boasts 138,880 cores, much more than any other machine in the Top5 of the Green500. This supercomputer utilizes an AMD processor – specifically the AMD EPYC 7742. With an overall performance that outpaces most of the other competition on the Green500, it’s no surprise this machine holds the 22nd spot on the TOP500 list.

The Wilkes-3 system out of the University of Cambridge in the U.K. has achieved the No. 3 spot. A Dell EMC machine, this supercomputer had an efficiency of 28.14 gigaflops/watt. Like HiPerGator AI, this system relies on an AMD EPYC processor – the AMD EPYC 7763. This system is ranked 101 on the TOP500 list.

The MeluXina machine of LuxProvide holds the No. 4 spot on the Green500. The only machine on the list from Luxembourg, it uses AMD EPYC processor and its efficiency was clocked at 26.96 gigaflops/wat. It’s ranked 37 on the TOP500.

Rounding out the Top5 of the Green500 is NVIDIA DGX SuperPOD. Created by NVIDIA in the U.S. and utilizing an AMD EPYC processor, it is ranked 216 on the TOP500. The system achieved 26.20 gigaflops/watt of efficiency.

Outside of the top five, a significant development is the Perlmutter system. “This supercomputer was the only new machine in the Top10 in the Top500 rankings; it also claims the No. 6 spot on the Green500,” the list organizers said. “With a performance of 64.6 Pflop/s, this supercomputer’s power efficiency of 25.55 gigaflops/watt is quite impressive.”

HPCG

The TOP500 list has incorporated the High-Performance Conjugate Gradient (HPCG) Benchmark results, which provides an alternative metric for assessing supercomputer performance and is meant to complement the HPL measurement. Fugaku, the winner of the TOP500, stayed consistent with last year’s results with 16.0 HPCG-petaflops. Summit also stuck with the results from last year, with Summit achieving 2.93 HPCG-petaflops and Perlmutter, a new system, benchmarking with 1.91 HPCG-petaflops.

HPL-AI

The HPL-AI benchmark seeks to highlight the convergence of HPC and artificial intelligence (AI) workloads based on machine learning and deep learning by solving a system of linear equations using novel, mixed-precision algorithms that exploit modern hardware.

As with the Top500, RIKEN’s Fugaku system is leading the pack here. As stated, this machine is considered by some to be the first “Exascale” supercomputer. Fugaku demonstrated this new level of performance on the new HPL-AI benchmark with 2 Exaflops.